MLOps Zoomcamp FAQ

Table of Contents

General Course-Related Questions

# I forgot if I registered, can I still join the zoomcamp?

You don't need to register, as registration is not mandatory. It is only used for gauging interest and collecting data for analytics. You can start learning and submitting homework without registering even while a cohort is “live”. There is no check against any registered list.

# Is it going to be live? When?

The course videos are pre-recorded, and you can start watching the course right now.

The zoomcamps are spread out throughout the year. See the article Guide to Free Online Courses at DataTalks Club.

We will also occasionally have office hours — live sessions where we will answer your questions. The office hours sessions are recorded too.

You can see the office hours (playlist with year 20xx) as well as the pre-recorded course videos in the Course Channel’s Bookmarks and/or DTC’s YouTube channel.

# Course - Can I still join the course after the start date?

Yes, even if you don't register, you're still eligible to submit the homeworks as long as the form is still open and accepting submissions.

Be aware, however, that there will be deadlines for turning in the final projects. So don't leave everything to the last minute.

# Course: How do I start?

No matter if you're with a 'live' cohort or following in self-paced mode, start by:

Reading pins and bookmarks on the course channel to see what things are where.

Reading the repository (bookmarked in channel) and watching the video lessons (playlist bookmarked in channel).

If you have questions, search the channel itself first; someone may have already asked and gotten a solution.

For the most Frequently Asked Questions, refer to this document:

If you don't want to read/skimmer/search the FAQ document, tag the

@ZoomcampQABotwhen asking questions, and it will summarize answers from its knowledge base.For generic, non-zoomcamp queries, consider using tools like ChatGPT, BingCopilot, or Google Gemini, especially for error messages.

Check if you're on track by checking the deadlines in the Course Management form for homework submissions.

The main difference if you're not in a "live" cohort is that responses to your questions might be delayed because fewer active students are online. This won't be an issue if you do your own due diligence by searching for answers first and reading the documentation of the library.

- If you need to ask questions and the resources above haven't helped, follow the guidelines in the

asking-questions.mddocument (bookmarked in channel) and also check the Pins.

# Course - Can I still graduate when I didn’t complete homework for week x?

Yes

# Certificate - Can I follow the course in a self-paced mode and get a certificate?

No, you can only get a certificate if you finish the course with a “live” cohort. We don't award certificates for the self-paced mode. The reason is you need to peer-review capstone(s) after submitting a project. You can only peer-review projects at the time the course is running.

# What’s the difference between the 2023 and 2022 course?

The difference is the Orchestration and Monitoring modules. Those videos will be re-recorded to use Prefect. The rest should mostly be the same.

Also, all of the homeworks will be changed for the 2023 cohort.

# Cohort: What’s the difference between the 2024 and 2023 course?

The difference is the Orchestration and Monitoring modules. Those videos will be re-recorded to use Mage-AI. The rest should mostly be the same.

Additionally, all of the homeworks will be changed for the 2024 cohort.

# Cohort: Will there be a 2024 Cohort? When will the 2024 cohort start?

Yes, it will start in May 2024.

# Cohort: I missed the current cohort, when is the next cohort scheduled for? Will there be a 202x cohort?

Please see the summary of all zoomcamps and their respective schedule at this link.

Note that there's no guarantee the zoomcamps will be run indefinitely or that the same zoomcamps will be conducted every year.

# Homework: What if my answer is not exactly the same as the choices presented?

Please choose the closest one to your answer. Also, do not post your answer in the course Slack channel.

# Homework: where can I find the schedule and/or deadlines of each homework assignment?

You can find the deadlines for each homework assignment in the course schedule or timeline provided at https://courses.datatalks.club/mlops-zoomcamp-2024/. The time is your own local time, as it has been automatically converted.

# Homework: Is the due date for homework 20th May? How do I check the updated playlist and homework for the mlops course?

The due date differs due to participants being in different time zones. It was the midnight of May 19th/20th in Berlin, and whatever corresponded to that in your particular time zone. You can find the deadline on the homework submission page:

You can find all cohort-specific information for the 2025 cohort here:

# Homework: Why is the experiment in question 6 taking so long to run? Should we use yellow taxi data, or green taxi data?

You might need to use the green taxi data rather than yellow taxi data. The preprocessing code provided with the homework expects green taxi data (even though the question specifies yellow taxi data). Using green taxi data seems to be the correct approach, based on similar questions. Note that there is no official confirmation of this yet (as of early morning, May 27th in Berlin).

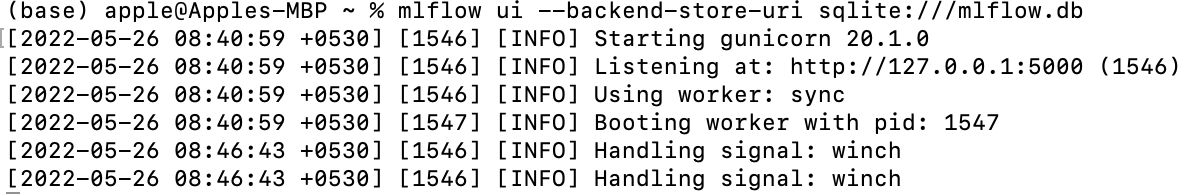

# Homework: I am getting conflicts on server ports and cannot establish a connection to the MLflow server, why?

Your port (5000) may be in use by some other process. To resolve this:

Run the following command to find out which process is using the port:

lsof -i :5000Either kill the process using that port or route to a different port. You can explicitly change the port with the following command:

mlflow ui --backend-store-uri sqlite:///mlflow.db --port 5001

# Homework 3, 2025 Cohort - Do I have to use MAGE as the orchestrator? Can I use any orchestrator I want?

You do not have to use MAGE or any specific orchestrator; it is totally up to you.

# Homework: Just found this course, can I still submit homeworks?

To clarify on late homework submissions:

- You cannot submit after the homework is scored, as the form is closed.

- Once the form is closed (i.e., scored), no further submissions are possible.

- You can check your code against the solution by reviewing the

homework.mdfile.

If the due date has passed but the form is still "Open/Submittable":

- This is considered a "late homework submission," and the form is still editable.

- Don’t forget to click the Update button to save any changes.

Please note, it's uncertain when the form will be closed as this process is currently manual.

# Hi, is it too late to start the course, I have ML experience?

It really depends on how much time and effort you can dedicate to the project over the coming weeks. Since you're late for the homeworks and they aren't required for the certificate, it might make sense to focus on the projects. Even if the first attempt is a struggle, it will be the best preparation for a second attempt, should you need or want one.

# Project: Are we free to choose our own topics for the final project?

Please pick up a problem you want to solve yourself. Potential datasets can be found on either Kaggle, Hugging Face, Google, AWS, or the UCI Machine Learning Datasets Repository. More links are documented in datasets.md. Please also read the README.md in 07-project folder.

# Project: Is the capstone an individual or team project?

It is an individual project.

# Project: For the final project, is it required to be put on the cloud?

You can get a few cloud points by using Kubernetes even if you deploy it only locally. Alternatively, you can use LocalStack to mimic AWS. Be sure you're clear on the Evaluation Criteria.

# Homework and Leaderboard: what is the system for points in the course management platform?

After you submit your homework, it will be graded based on the number of questions in that particular homework. You can see how many points you have on the homework page at the top.

In the leaderboard, you will find the sum of all points you've earned, which include:

- Points for Homeworks

- Points for FAQs

- Points for Learning in Public

If you submit something to the FAQ, you receive one point. For each Learning in Public link, you also get one point. Hover over the "?" for some explanations.

# What exactly is a learning-in-public post?

They are content that you create about what you have learned on a specific topic. Some DOs and DON’Ts are explained by Alexey in the following video:

https://www.loom.com/share/710e3297487b409d94df0e8da1c984ce

Anyone caught abusing and gaming the system will be publicly called out and have their points stripped so they don’t appear high on the Leaderboard (as of 18 June 2024).

# Leaderboard: I am not on the leaderboard / how do I know which one I am on the leaderboard?

When you set up your account, you are automatically assigned a random name such as “Lucid Elbakyan.” Click on the "Jump to your record on the leaderboard" link to find your entry.

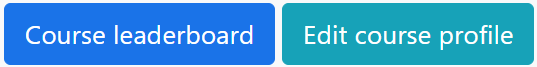

To see what your display name is, click on the Edit Course Profile button.

- The first field is your nickname/displayed name. Change it if you want to use your Slack username, GitHub username, or any other nickname to remain anonymous.

- Unless you want "Lucid Elbakyan" on your certificate, it is mandatory that you change the second field to your official name as per your identification documents (passport, national ID card, driver's license, etc.). This is the name that will appear on your Certificate!

# Error: creating Lambda Function (...): InvalidParameterValueException: The image manifest, config or layer media type for the source image ... is not supported.

This error occurs when the Docker image you are using is a manifest list (multi-platform). AWS Lambda does not support manifest lists—it only accepts single-platform images with a standard image manifest.

Quick fix: Build your Docker image using docker buildx and specify the platform explicitly.

docker buildx build --platform linux/amd64 -t your-ecr-image:latest -f Dockerfile .

This ensures the image is compatible with AWS Lambda. Also, make sure that you push your image using the --platform option.

# Criteria for getting a certificate?

Finish the Capstone project.

# Is completion of Homework necessary for a certificate?

No.

Can I submit the final project on the second attempt and still receive the certificate?

Yes, absolutely. It's your choice whether to submit one or two times; passing any one attempt is sufficient to earn the certificate.

Module 1: Introduction

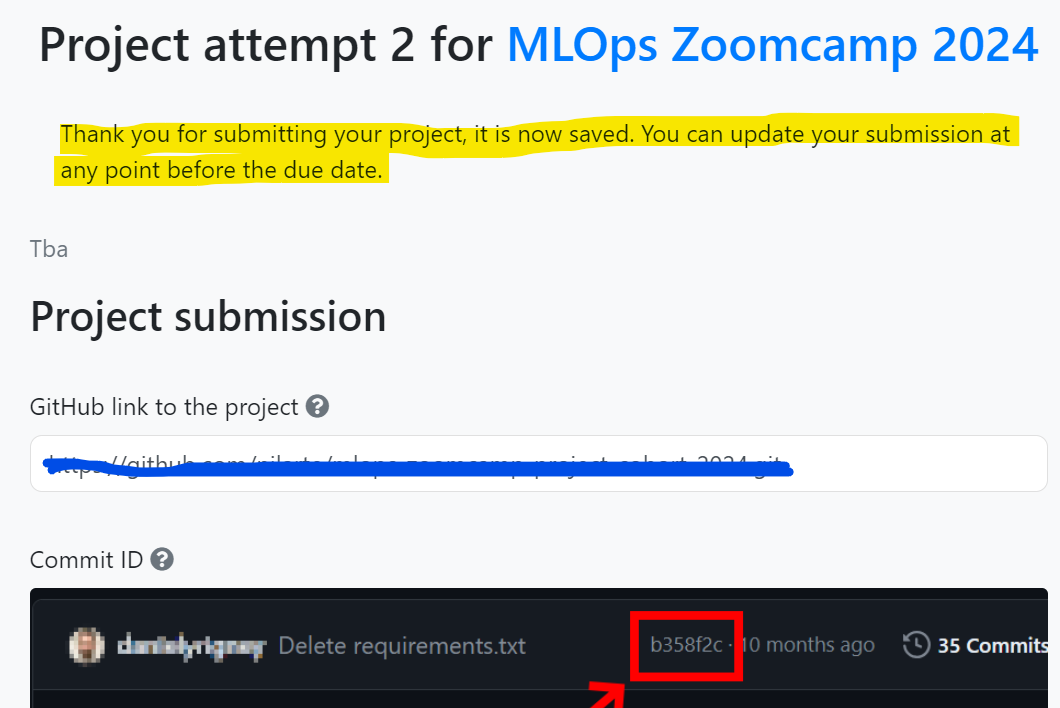

# Can I submit and update my project attempt multiple times before the final deadline?

Yes, you can submit and update your project attempts multiple times before the final deadline.

- It is advisable not to wait until the last minute. Submitting even a partially completed project early allows you to make improvements over time.

- Continue adding improvements as needed until the final date.

- Simply update the Git commit SHA to reflect changes.

# Opening Jupyter in VSCode

You can install the Jupyter extension to open notebooks in VSCode.

# Launching Jupyter notebook from codespace VM

When you are ready and have installed Anaconda, you can launch a Jupyter notebook in a new terminal with the following command:

jupyter notebook

Be careful not to make any typos. For instance, entering "jupyter-notebook" will result in an error:

Jupyter command `jupyter-notebook` not found.

# Configuring Github to work from the remote VM

In case you want to set up a GitHub repository (e.g., for homeworks) from a remote VM, you can follow these helpful tutorials:

- Setting up GitHub on AWS instance: Tutorial

- Setting up keys on AWS instance: GitHub Documentation

Once you complete these steps, you should be able to push to your repository successfully.

AWS Instance Note:

The selected AWS instance may not be covered under the free tier due to its size or other factors. Here is what the AWS free tier includes:

- Resizable compute capacity in the Cloud.

- 750 hours per month of Linux, RHEL, or SLES t2.micro or t3.micro* instance, depending on the region.

- 750 hours per month of Windows t2.micro or t3.micro* instance, depending on the region.

- 750 hours per month of public IPv4 address regardless of the instance type.

*Instances launch in Unlimited mode and may incur additional charges.

# Opening Jupyter in AWS

Faced issue while setting up Jupyter Notebook on AWS. I was unable to access it from my desktop. (I am not using Visual Studio and hence faced problem)

Run the following command:

jupyter notebook --generate-configEdit the file

/home/ubuntu/.jupyter/jupyter_notebook_config.pyto add the following line:NotebookApp.ip = '*'

# WSL: instructions

If you wish to use WSL on your Windows machine, here are the setup instructions:

Install wget:

sudo apt install wgetDownload Anaconda from the Anaconda download page using the

wgetcommand:wget <download-address>Turn on Docker Desktop WSL 2:

Clone the desired GitHub repository:

git clone <github-repository-address>Install Jupyter:

pip3 install jupyterConsider using Anaconda, which includes tools like PyCharm and Jupyter.

Alternatively, download Miniforge for a lightweight, open-source version of conda that supports mamba for improved environment solving speed. The Texas Tech University High Performance Computing Center provides a detailed guide:

For Windows, install WSL via:

wsl --installIf Python shows as version 3.10 after installing Anaconda with Python 3.9, execute:

source .bashrcIf the issue persists, add the following to your PATH:

export PATH="<anaconda-install-path>/bin:$PATH"

For using VSCode with WSL, refer to VSCode on WSL.

# Git: Created repo without .gitignore

If you created a repository without a .gitignore, follow these steps to add one:

Open Terminal.

Navigate to the location of your Git repository.

Create a .gitignore file for your repository:

touch .gitignoreLocate the .gitignore file. If you already have it, open it.

Edit the .gitignore file and add the following lines:

# Python *.pyc __pycache__/ *.py[cod] *$Save the changes to the .gitignore file.

Commit the changes.

# .gitignore: how-to

If you create a folder data and download datasets or raw files to your local repository, you might want to push all your code to a remote repository without including these files or folders. To achieve this, use a .gitignore file.

Follow these steps to create a .gitignore file:

- Create an empty

.txtfile using a text editor or command line. - Save as

.gitignore(ensure you use the dot symbol). - Add rules:

*.parquetto ignore all Parquet files.data/to ignore all files in thedatafolder.

For more patterns, read the GIT documentation.

<>{IMAGE:image_id}

# AWS: Suggestions

Ensure when stopping an EC2 instance that it fully stops. Look for the status indicator: green (running), orange (stopping), and red (stopped). Refresh the page to confirm it shows a red circle and status as stopped.

Note that stopping an EC2 instance might still incur charges, such as storage costs for uploaded data on an EBS volume.

Consider setting up billing alerts to monitor costs. However, specific instructions for setting them up are not provided here.

# IBM Cloud an alternative for AWS

You can get an invitation code from Coursera and use it in your account to verify it. IBM Cloud offers different features.

# AWS costs:

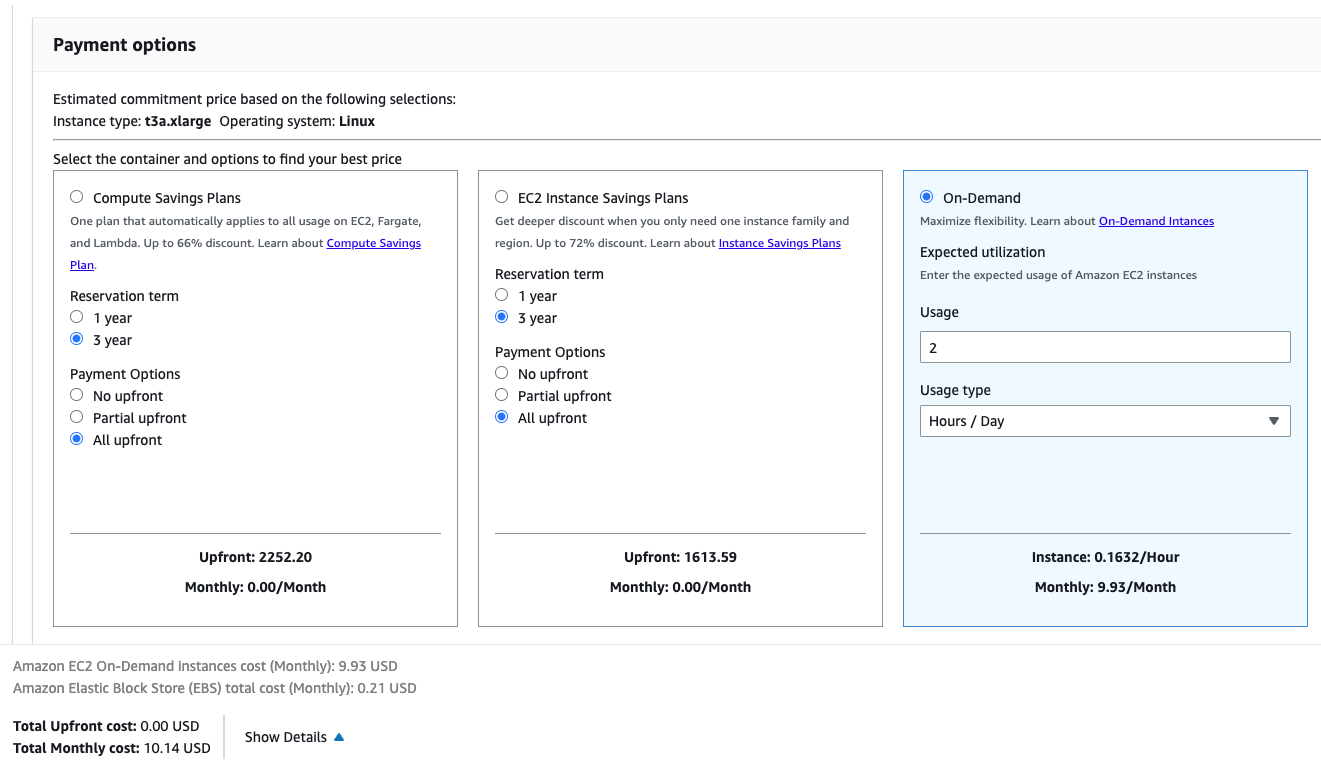

I am worried about the cost of keeping an AWS instance running during the course.

With the instance specified during working environment setup, if you remember to Stop Instance once you finish your work for the day, using that strategy, in a day with about 5 hours of work, you will pay around $0.40 USD, which will account for $12 USD per month. This seems to be an affordable amount.

You must remember that you will have a different public IP address every time you restart your instance, and you will need to edit your SSH Config file. It's worth the time though.

Additionally, AWS enables you to set up an automatic email alert if a predefined budget is exceeded.

Here is a tutorial to set this up.

Also, you can estimate the cost yourself using the AWS pricing calculator. At the time of writing (20.05.2023), a t3a.xlarge instance with 2 hr/day usage (which translates to 10 hr/week and should be enough to complete the course) and 30GB EBS monthly cost is 10.14 USD.

Here’s a link to the estimate.

# Is the AWS free tier enough for doing this course?

For many parts - yes. Some services like Kinesis are not in the AWS free tier, but you can use them locally with LocalStack.

# AWS EC2: this site can’t be reached

When I click an open IP address in an AWS EC2 instance, I get an error: "This site can’t be reached." What should I do?

This IP address is not meant to be opened in a browser. It is used to connect to the running EC2 instance via terminal. Use the following command from your local machine or a remote server:

- Assume the IP address is

11.111.11.111 - The downloaded key name is

razer.pem(ensure the key is moved to a hidden folder.ssh) - Your username is

user_name

ssh -i /Users/user_name/.ssh/razer.pem ubuntu@11.111.11.111

# Unprotected private key file!

After running the command:

ssh -i ~/.ssh/razer.pem ubuntu@XX.XX.XX.XX

I encountered the error: "unprotected private key file". To resolve this issue, ensure the file permissions are correctly set by running the following command:

chmod 400 ~/.ssh/razer.pem

For more detailed steps, see this guide.

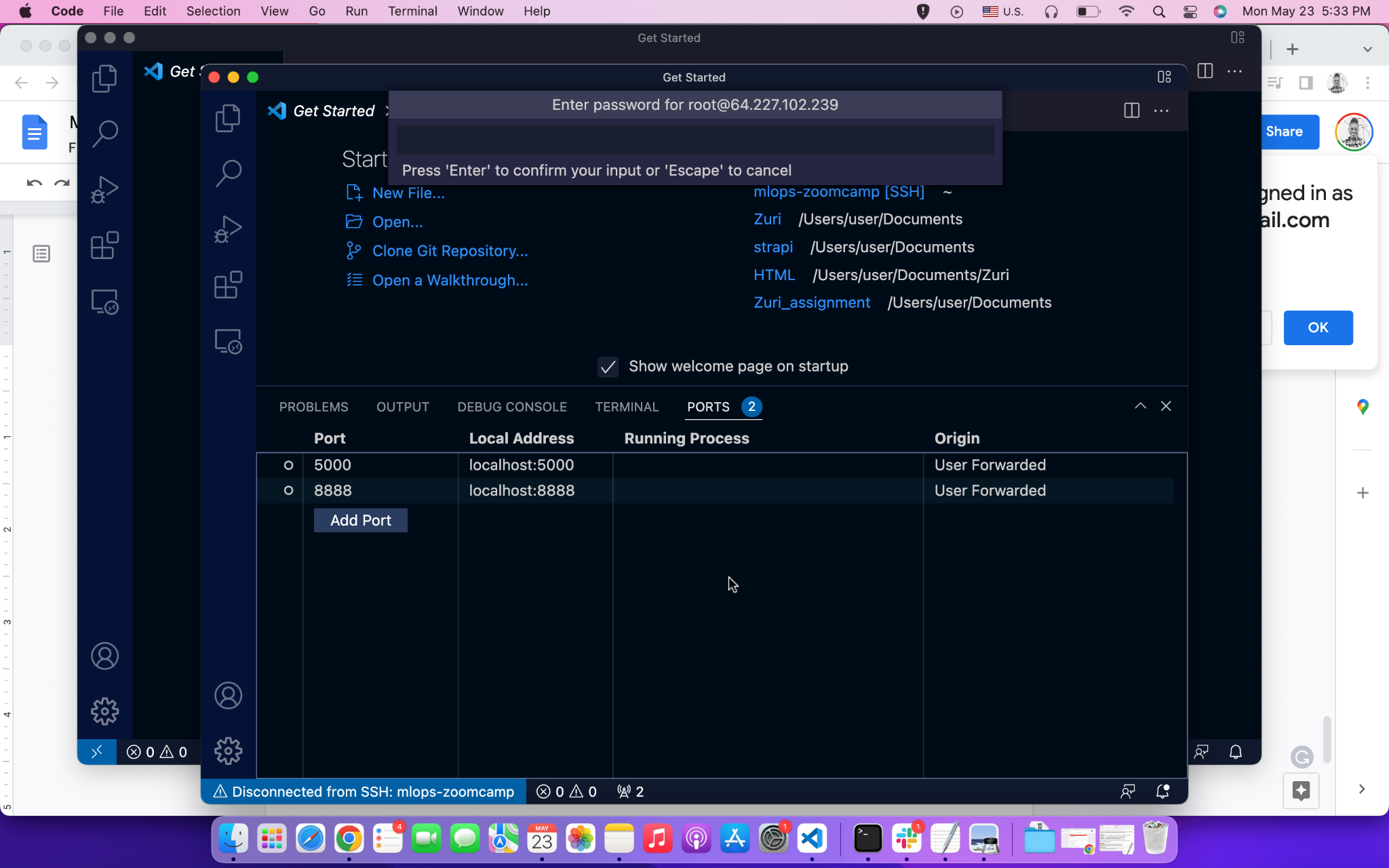

# AWS EC2 instance constantly drops SSH connection

My SSH connection to AWS cannot last more than a few minutes, whether via terminal or VS Code.

My config:

Host mlops-zoomcamp # ssh connection calling name

User ubuntu # username AWS EC2

HostName <instance-public-IPv4-addr> # Public IP, changes when instance is turned off.

IdentityFile ~/.ssh/name-of-your-private-key-file.pem # Private SSH key file path

LocalForward 8888 localhost:8888 # Connecting to internal service

StrictHostKeyChecking no

The disconnection occurs whether I SSH via WSL2 or via VS Code, often after running some code like import mlflow.

To reconnect, I need to stop and restart the instance, which assigns a new IPv4 address.

I've checked the steps at AWS's troubleshooting page: AWS SSH Connection Errors

Inbound rule should allow all IPs for SSH.

Expected Behavior:

- SSH connection should remain active while using the instance.

- Should be able to reconnect if disconnected.

Solution:

Memory Issue: Disconnections may occur if the instance runs out of memory. Use EC2's screenshot feature to troubleshoot. If it's an OS out-of-memory issue, consider:

- Using a higher compute VM with more RAM.

- Adding a swap file, which uses disk as a RAM substitute to prevent OOM errors.

- Follow Ubuntu's documentation: Ubuntu Swap FAQ.

- Alternatively, follow AWS documentation: AWS Swap File.

Timeout Issue: If connections drop due to timeouts, add the following to your local

.ssh/configfile to ping every 50 seconds:ServerAliveInterval 50

# AWS EC2: How do I handle changing IP addresses on restart?

Every time I restart my EC2 instance, I receive a different IP and need to update the config file manually.

Solution:

You can create a script to automatically update the IP address of your EC2 instance. Refer to this guide for detailed steps.

# VS Code crashes when connecting to Jupyter

Make sure to use an instance with enough compute capabilities such as a t2.xlarge. You can check the monitoring tab in the EC2 dashboard to monitor your instance.

# My connection to my GCP VM instance keeps timing out when I try to connect

If you switched off the VM instance completely in GCP, the IP address may change when it switches back on. You need to update the ssh_config file with the new external IP address. This can be done in VS Code if you have the Remote-SSH extension installed.

- Open the command palette and type

Remote-SSH: Open SSH Configuration File…. - Select the appropriate

ssh_configfile. - Edit the

HostNameto the correct IP address.

# X has 526 features, but expecting 525 features

Error:

ValueError: X has 526 features, but LinearRegression is expecting 525 features as input.

Solution:

The DictVectorizer creates an initial mapping for the features (columns). When calling the DictVectorizer again for the validation dataset, transform should be used as it will ignore features that it did not see when fit_transform was last called. For example:

X_train = dv.fit_transform(train_dict)

X_test = dv.transform(test_dict)

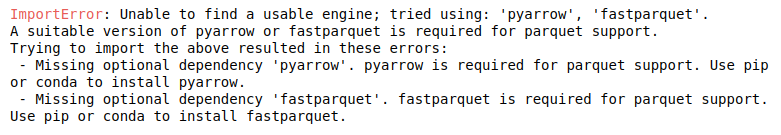

# Missing dependencies

If some dependencies are missing:

Install the following packages:

pandasmatplotlibscikit-learnfastparquetpyarrowseaborn

pip install -r requirements.txt

I have seen this error when using pandas.read_parquet(). The solution is to install pyarrow or fastparquet by running the following command in the notebook:

!pip install pyarrow

Note: If you’re using Conda instead of pip, install fastparquet rather than pyarrow, as it is much easier to install and it’s functionally identical to pyarrow for our needs.

# squared Option Not Available in mean_squared_error

The mean_squared_error function in scikit-learn no longer includes the squared parameter. To compute the Root Mean Squared Error (RMSE), use the dedicated function root_mean_squared_error from sklearn.metrics instead.

# No RMSE value in the options

The evaluation RMSE I get doesn’t figure within the options!

If you’re evaluating the model on the entire February data, try to filter outliers using the same technique you used on the train data (0 ≤ duration ≤ 60) and you’ll get an RMSE which is (approximately) in the options. Also, don’t forget to convert the columns' data types to str before using the DictVectorizer.

Another option:

- Along with filtering outliers, additionally filter on null values by replacing them with

-1. - You will get an RMSE which is (almost) the same as in the options.

- Use the

.round(2)method to round it to 2 decimal points.

Warning Deprecation

The Python interpreter warns of modules that have been deprecated and will be removed in future releases while also suggesting how to update your code. For example:

C:\ProgramData\Anaconda3\lib\site-packages\seaborn\distributions.py:2619:

FutureWarning: `distplot` is a deprecated function and will be removed in a future version. Please adapt your code to use either `displot` (a figure-level function with similar flexibility) or `histplot` (an axes-level function for histograms).

warnings.warn(msg, FutureWarning)

To suppress the warnings, you can include this code at the beginning of your notebook:

import warnings

warnings.filterwarnings("ignore")

# How to replace distplot with histplot

To replace sns.distplot with sns.histplot, you can use the following syntax:

sns.distplot(df_train["duration"])

Can be replaced with:

sns.histplot(

df_train["duration"], kde=True,

stat="density", kde_kws=dict(cut=3), bins=50,

alpha=.4, edgecolor=(1, 1, 1, 0.4),

)

This will give you an almost identical result.

# KeyError: 'PULocationID' or 'DOLocationID'

You need to replace the capital letter "L" with a small one "l".

# ImportError: Unable to find a usable engine; tried using: ‘pyarrow’, ‘fastparquet’.

To resolve this error, run the following command:

!pip install pyarrow

After successfully installing, you can delete the command.

# Reading large parquet files

When reading large parquet files, you might encounter the following error:

IndexError: index 311297 is out of bounds for axis 0 with size 131743

Here are some possible solutions:

Run as a Python Script:

- Try executing your code as a standalone Python script instead of within Jupyter Notebook.

Use PySpark Library:

- Consider using the PySpark library, which is optimized for handling large data files.

Read Parquet in Chunks:

- You can read parquet files in chunks using the pyarrow library. Reference this blog post for more details.

Using these methods may help manage and process large parquet files more efficiently.

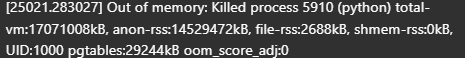

# Kernel getting killed during assignment tasks on local

If the Jupyter notebook kernel gets killed repeatedly due to out-of-memory issues when converting a Pandas DataFrame to a dictionary or other memory-intensive steps, try using Google Colab as it offers more memory.

Here's how you can proceed:

Upload the datasets to Google Drive in the folder "Colab Notebooks."

Mount the drive on Colab:

from google.colab import drive drive.mount('/content/drive')Pull the data from uploaded tables in Colab:

df_jan = pq.read_table('/content/drive/My Drive/Colab Notebooks/yellow_tripdata_2023-01.parquet').to_pandas()Complete the assignment in Colab.

Download the final assignment to your local machine and copy it into the relevant repository.

# What is the difference between label and one-hot encoding?

Two main encoding approaches are generally used to handle categorical data: label encoding and one-hot encoding.

Label Encoding: Assigns each categorical value an integer based on alphabetical order. Suitable for logical categorical data, such as a rating system or classification.

One-Hot Encoding: Creates new variables using 0s and 1s to represent original categorical data. Useful when there is no inherent order or logic to the categories.

Tools and Implementation

Sci-kit Learn:

- Dictionary Vectorizer: Handles categorical data and generates arrays based on unique instances in a DataFrame or other data structures.

OneHotEncoderclass: Specifically for applying one-hot encoding.

Pandas:

pd.get_dummies(): Similar functionality for one-hot encoding.

Note: Sometimes resetting a dataset into objects is necessary to apply one-hot encoding, especially when there is logical structuring in the data that could influence label encoding, which can be limiting for some applications.

# Distplot takes too long

First, remove the outliers (trips with unusual duration) before plotting.

# RMSE on test set too high

Problem

RMSE on the test set was too high when hot encoding the validation set using a previously fitted OneHotEncoder(handle_unknown='ignore') on the training set. In contrast, DictVectorizer yielded the correct RMSE.

Explanation

In principle, both transformers should behave identically when treating categorical features, especially in scenarios where there are no sequences of strings in each row (as in this week’s homework):

- Features are put into binary columns encoding their presence (1) or absence (0).

- Unknown categories are imputed as zeros in the hot-encoded matrix.

This discrepancy indicates that there might be a difference in how OneHotEncoder and DictVectorizer handle the data after fitting on the training set and applying to the validation set.

# ictVectorizerA: Alexey’s answer

In summary:

pd.get_dummiesor One-Hot Encoding (OHE) can produce results in different orders and handle missing data differently, potentially causing train and validation sets to have different columns.DictVectorizerwill ignore missing values (during training) and new values (during validation) in datasets.

Other sources:

<{IMAGE:image_id}>

# Why did we not use OneHotEncoder(sklearn) instead of DictVectorizer?

There are several reasons for choosing DictVectorizer over OneHotEncoder:

- Simple One-Step Process: DictVectorizer provides a straightforward method to encode both categorical and numerical features from dictionaries, outputting directly to a sparse matrix.

- Ideal for ML Pipelines: The direct output in sparse matrix format makes DictVectorizer a good fit for machine learning pipelines without needing additional preprocessing.

- Use Cases:

- Use OneHotEncoder if you need full control, are working with sklearn pipelines, or need to handle unknown categories safely.

- Use DictVectorizer when your data is in dictionary format (e.g., JSON or from APIs) and you aim for quick integration into the pipeline.

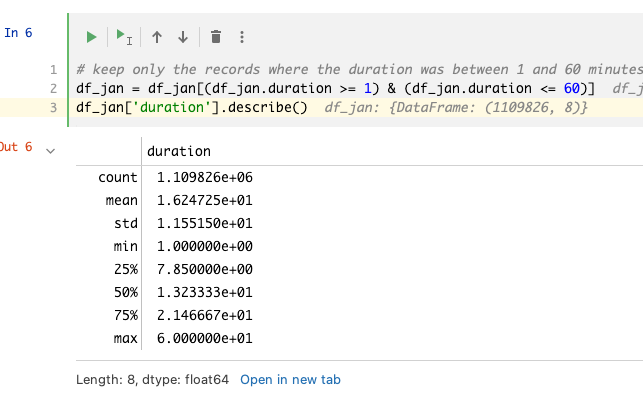

# Clipping outliers

How to check that we removed the outliers?

Use the pandas function describe() which can provide a report of the data distribution along with the statistics to describe the data. For example, after clipping the outliers using a boolean expression, the min and max can be verified using:

df['duration'].describe()

# Replacing NaNs for pickup location and drop off location with -1 for One-Hot Encoding

pd.get_dummies and DictVectorizer both create a one-hot encoding on string values. Therefore, you need to convert the values in PUlocationID and DOlocationID to string.

If you convert the values in PUlocationID and DOlocationID from numeric to string, the NaN values get converted to the string "nan". With DictVectorizer, the RMSE is the same whether you use "nan" or "-1" as the string representation for the NaN values. Therefore, the representation doesn't have to be "-1" specifically; it could also be some other string.

# Slightly different RMSE

Problem: My LinearRegression RMSE is very close to the answer but not exactly the same. Is this normal?

Answer: No, LinearRegression is a deterministic model; it should always output the same results when given the same inputs.

Check the Following:

- Ensure outliers are properly treated in both the train and validation sets.

- Verify that one-hot encoding is correctly applied by inspecting the shape of the one-hot encoded feature matrix. If it shows 2 features, there may be an issue.

- Hint: Convert drop-off and pick-up codes to the proper data format before fitting with

DictVectorizer.

- Hint: Convert drop-off and pick-up codes to the proper data format before fitting with

# Extremely low RMSE

Problem: I’m facing an extremely low RMSE score (e.g., 4.3451e-6) - what should I do?

Answer:

- Recheck your code to see if your model is inadvertently learning the target before making predictions.

- Ensure that the target variable is not included as a parameter while fitting the model. Including it can result in misleadingly low scores.

- Verify that

X_traindoes not contain any part of youry_train. This applies to the validation set as well. - Adjust your data handling to avoid data leakage between your features and the target.

# Enabling Auto-completion in Jupyter Notebook

Problem: How to enable auto-completion in Jupyter Notebook? Tab doesn’t work.

Solution:

You can enable auto-completion by running the following command:

!pip install --upgrade jedi==0.17.2

# Downloading the data from the NY Taxis datasets gives error: 403 Forbidden

Problem: While following the steps in the videos, you may encounter a 403 Forbidden error when trying to download files using wget.

Solution:

The issue occurs because the links point to files on cloudfront.net. An example of such a link is:

https://d37ci6vzurychx.cloudfront.net/trip+data/green_tripdata_2021-01.parquetInstead of downloading the dataset directly from the link, use the dataset URL in your file.

Update (27-May-2023):

- You can now download the data from the official NYC trip record page: TLC Trip Record Data.

- Go to the page, right-click, and use "copy link" to get the URL since the URL provided might change if NYC updates their system.

Example command:

wget https://d37ci6vzurychx.cloudfront.net/trip-data/green_tripdata_2021-01.parquet

# Using PyCharm & Conda env in remote development

Problem: PyCharm (remote) doesn’t see the conda execution path, preventing the use of a conda environment located on a remote server.

Solution:

On the remote server's command line, run:

conda activate envnameThen, execute:

which pythonThis will provide the Python execution path.

Use this path to add a new interpreter in PyCharm:

- Add local interpreter.

- Select system interpreter.

- Enter the path obtained from the previous step.

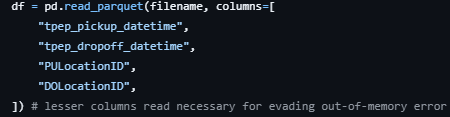

# Running out of memory

Problem: The output of DictVectorizer was consuming too much memory, causing an inability to fit the linear regression model before running out of memory on a 16 GB machine.

Solution:

- In the example for DictVectorizer on the scikit-learn website, the parameter

sparseis set asFalse. While this helps with viewing results, it greatly increases memory usage. - To address this, either set

sparse=True, or leave it at the default setting, which is alsoTrue.

By using sparse=True, memory usage will be reduced, allowing for more efficient model fitting.

# Activating Anaconda env in .bashrc

Problem: Installing Anaconda didn’t modify the .bashrc profile. This means the Anaconda environment was not activated after exiting and relaunching the Unix shell.

Solution:

For Bash:

- Initiate conda again, which will add entries for Anaconda in the

.bashrcfile.

cd YOUR_PATH_ANACONDA/bin ./conda init bash- This will automatically edit your

.bashrc.

- Initiate conda again, which will add entries for Anaconda in the

Reload:

source ~/.bashrc

# The feature size is different for training set and validation set

While working through HW1, you may notice that the feature sizes for the training and validation datasets are different. This issue often arises when using the incorrect method with a dictionary vectorizer.

Ensure you use the transform method on the premade dictionary vectorizer instead of fit_transform. Since you already have the dictionary vectorizer created, there's no need to execute the fit pipeline on the model.

# Permission denied (publickey) Error (when you remove your public key on the AWS machine)

If you encounter a "Permission denied (publickey)" error after removing your public key from an AWS machine, follow these steps:

Access your machine via Session Manager to recreate your public key. Refer to the guide for more details: Fix Permission Denied Errors.

To retrieve your old public key, use this command:

ssh-keygen -y -f /path_to_key_pair/my-key-pair.pemReplace

/path_to_key_pair/my-key-pair.pemwith the actual path to your key pair.For additional instructions on retrieving the public key, consult the AWS documentation: Retrieving the Public Key.

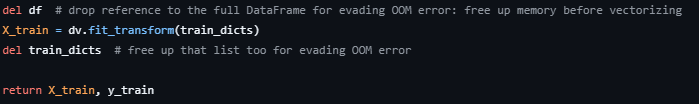

# Overfitting: Absurdly high RMSE on the validation dataset

Problem: The February dataset has been used as a validation/test dataset and stripped of the outliers in a similar manner to the train dataset (taking only the rows for the duration between 1 and 60, inclusive). The RMSE obtained afterward is in the thousands.

Solution:

- Ensure that the sparse matrix result from

DictVectorizeris not turned into anndarray. After removing that part of the code, a correct result was achieved.

<{IMAGE:image_id}>

If there are further issues, carefully review each preprocessing step to ensure consistency between training and validation datasets.

# Can’t import sklearn

If you encounter an error when trying to import sklearn, specifically:

from sklearn.feature_extraction import DictVectorizer

You can resolve it by installing scikit-learn with the following command:

!pip install scikit-learn

# Install docker in WSL2 without installing Docker Desktop

If you want to install Docker in WSL2 on Windows without Docker Desktop, follow these steps:

Install Docker

You can ignore the warnings during installation.

curl -fsSL https://get.docker.com -o get-docker.sh sudo sh get-docker.shAdd Your User to the Docker Group

sudo usermod -aG docker $USEREnable the Docker Service

sudo systemctl enable docker.serviceTest the Installation

Verify that both Docker and Docker Compose are installed successfully.

docker --version docker compose version docker run hello-worldEnsure Docker Starts Automatically

If the service does not start automatically after restarting WSL, update your

.profileor.zprofilefile with:if grep -q "microsoft" /proc/version > /dev/null 2>&1; then if service docker status 2>&1 | grep -q "is not running"; then wsl.exe --distribution "${WSL_DISTRO_NAME}" --user root \ --exec /usr/sbin/service docker start > /dev/null 2>&1 fi fi

# Zero elements in sparse matrix (AKA when dictionary vectorizer / categorical X transformation fails)

Seeing a message like:

<2855951x515 sparse matrix of type '<class 'numpy.float64'>' with 0 stored elements in Compressed Sparse Row format>

This issue might occur because your variables, intended for vectorization, were imported as floating point numbers rather than integers. This can lead to nonsensical models. To resolve this, convert your data with the following code (assuming dg is your dataframe and categorical stores the names of your variables to be vectorized):

dg[categorical] = dg[categorical].round(0).astype(int).astype(str)

# Using a docker image as development environment (Linux)

If you don’t want to install Anaconda locally and prefer not to use Codespace or a VPS, you can create and run a Docker image locally.

For this, use the following Dockerfile:

FROM docker.io/bitnami/minideb:bookworm

RUN install_packages wget ca-certificates vim less silversearcher-ag

# Uncomment the `COPY` and comment the `RUN` line if you have downloaded anaconda manually

# I did this to save bandwidth when experimenting with the image creation

RUN wget https://repo.anaconda.com/archive/Anaconda3-2022.05-Linux-x86_64.sh && bash Anaconda3-2022.05-Linux-x86_64.sh -b -p /opt/anaconda3

#COPY Anaconda3-2022.05-Linux-x86_64.sh /tmp/Anaconda3-2022.05-Linux-x86_64.sh

RUN bash /tmp/Anaconda3-2022.05-Linux-x86_64.sh -b -p /opt/anaconda3 && \

rm /tmp/Anaconda3-2022.05-Linux-x86_64.sh

ENV PATH="/opt/anaconda3/bin:$PATH" \

HOME="/app"

EXPOSE 8888

WORKDIR /app

USER 1001

ENTRYPOINT [ "jupyter", "notebook", "--ip", "0.0.0.0" ]

Build the image using:

docker build -f Dockerfile -t mlops:v0 .

Then you can run it with:

mkdir app

chmod -R 777 app

docker run --name jupyter -p 8888:8888 -v ./app:/app mlops:v0

In the logs, you will see the Jupyter URL needed to access the environment. The files you create will be stored in the app directory.

# Use uv as a package manager

There is an option to run the project without Anaconda while easily managing multiple Python versions on your machine. The new package manager, uv, is a fast and efficient one written in Rust. It's recommended for use in Python projects overall. Install guide

uv venv --python 3.9.7 # install python 3.9.7 used in the course

source .venv/bin/activate # activate the environment

python -V # should be 3.9.7

uv pip install pandas scikit-learn notebook seaborn pyarrow # install required packages

jupyter notebook # run jupyter notebook

Cleanup is straightforward. Deactivate the environment and delete the folder:

deactivate

rm -rf .venv

# I get `TypeError: got an unexpected keyword argument 'squared'` when using `mean_squared_error(..., squared=False)`. Why?

The squared parameter was added in scikit-learn 0.22. In earlier versions, it is not recognized, which causes the TypeError.

To compute RMSE in older versions:

- Use

np.sqrt(mean_squared_error(...)).

In scikit-learn 1.0 and later, you can use:

from sklearn.metrics import root_mean_squared_error as rmse

rmse_value = root_mean_squared_error(y_train, y_pred)

print('RMSE:', rmse_value)

This approach is more explicit and convenient.

# Visualizing outliers in large datasets with Seaborn: Boxplot vs Histplot

seaborn.boxplot is generally faster because it uses a smaller set of summary statistics (min, Q1, median, Q3, max) to represent the data, which requires less computational effort, especially for large datasets.

seaborn.histplot can be slower, particularly with large datasets, because it needs to bin the data and compute frequency counts for each bin, which involves more processing.

So, if speed is a concern, especially with large datasets, boxplots are typically faster than histograms.

# Reading parquet files with Pandas (pyarrow dependency)

Error

A module that was compiled using NumPy 1.x cannot be run in NumPy 2.2.4 as it may crash.

AttributeError: module 'pyarrow' has no attribute '__version__'

Solution

Downgrade the version of your numpy:

pip uninstall numpy -y

conda remove numpy --force

conda clean --all -y

conda install numpy=1.26 -y

Module 2: Experiment Tracking

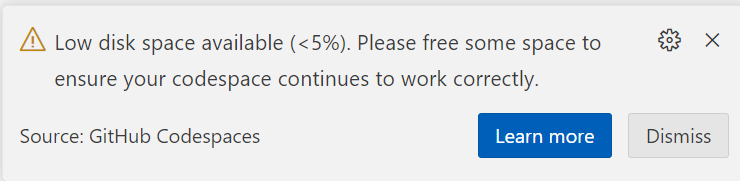

# Kernel died during Model Training on Github Codespaces

While training the model in Jupyter Notebook on GitHub Codespaces, the Jupyter kernel may die. To resolve this, upgrade the machine type in Codespaces from 8 cores to 14 cores. It is free to upgrade, but be aware that you will use more hours.

# Do we absolutely need to save data to disk? Can we use it directly from download?

Yes, you can use data directly from a URL without saving it to disk. For example, you can use Pandas to read data from a URL:

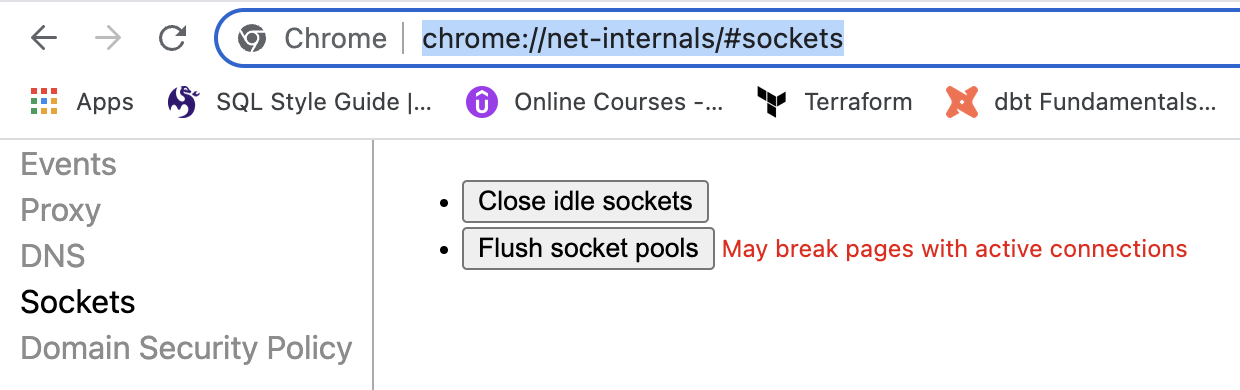

# Access Denied at Localhost:5000 - Authorization Issue

Problem

Localhost:5000 Unavailable // Access to Localhost Denied // You don’t have authorization to view this page (127.0.0.1:5000)

Solution

If you are using Chrome, follow these steps:

- Navigate to

chrome://net-internals/#sockets. - Press "Flush Socket Pools".

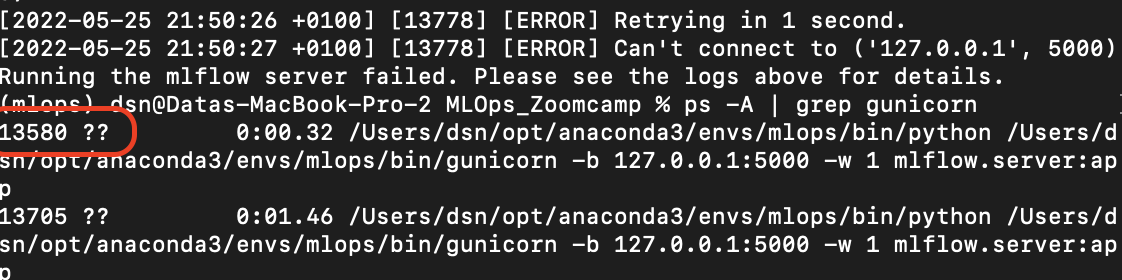

# Connection in use: ('127.0.0.1', 5000)

You have something running on the 5000 port. You need to stop it. Here are some ways to resolve the issue:

Using Terminal on Mac:

Run the command:

ps -A | grep gunicornIdentify the process ID (the first number after running the command).

Kill the process using the ID:

kill 13580where

13580represents the process number.

To Kill All Processes Using Port 5000:

sudo fuser -k 5000/tcpAlternative Command to Kill the Running Port:

kill -9 $(ps -A | grep python | awk '{print $1}')Change to a Different Port:

mlflow ui --backend-store-uri sqlite:///mlflow.db --port 5001

For more information, refer to the source.

# Could not convert string to float - ValueError

Running python register_model.py results in the following error:

ValueError: could not convert string to float: '0 int\n1 float\n2 hyperopt_param\n3 Literal{n_estimators}\n4 quniform\n5 Literal{10}\n6 Literal{50}\n7 Literal{1}'

Full Traceback:

Traceback (most recent call last):

File "/Users/name/Desktop/Programming/DataTalksClub/MLOps-Zoomcamp/2. Experiment tracking and model management/homework/scripts/register_model.py", line 101, in <module>

run(args.data_path, args.top_n)

File "/Users/name/Desktop/Programming/DataTalksClub/MLOps-Zoomcamp/2. Experiment tracking and model management/homework/scripts/register_model.py", line 67, in run

train_and_log_model(data_path=data_path, params=run.data.params)

File "/Users/name/Desktop/Programming/DataTalksClub/MLOps-Zoomcamp/2. Experiment tracking and model management/xfsub/scripts/register_model.py", line 41, in train_and_log_model

params = space_eval(SPACE, params)

File "/Users/name/miniconda3/envs/mlops-zoomcamp/lib/python3.9/site-packages/hyperopt/fmin.py", line 618, in space_eval

rval = pyll.rec_eval(space, memo=memo)

File "/Users/name/miniconda3/envs/mlops-zoomcamp/lib/python3.9/site-packages/hyperopt/pyll/base.py", line 902, in rec_eval

rval = scope._impls[node.name](*args, **kwargs)

ValueError: could not convert string to float: '0 int\n1 float\n2 hyperopt_param\n3 Literal{n_estimators}\n4 quniform\n5 Literal{10}\n6 Literal{50}\n7 Literal{1}'

Solution:

There are two plausible errors related to the hpo.py file where hyper-parameter tuning is run. The objective function should be structured as follows:

Ensure the

withstatement and thelog_paramsfunction are correctly applied to log all runs and parameters:def objective(params): with mlflow.start_run(): mlflow.log_params(params) rf = RandomForestRegressor(**params) rf.fit(X_train, y_train) y_pred = rf.predict(X_valid) rmse = mean_squared_error(y_valid, y_pred, squared=False) mlflow.log_metric('rmse', rmse)Add the

withstatement immediately before the function, just after:X_valid, y_valid = load_pickle(os.path.join(data_path, "valid.pkl"))Log parameters just after defining the

search_spacedictionary:search_space = {....} mlflow.log_params(search_space)

Logging parameters in groups can lead to issues because register_model.py expects to receive parameters individually. Ensure the objective function matches the example above.

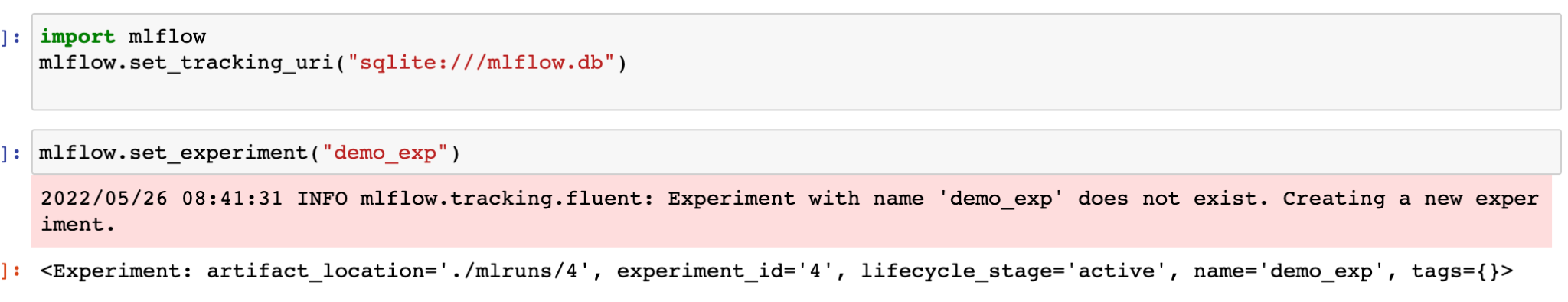

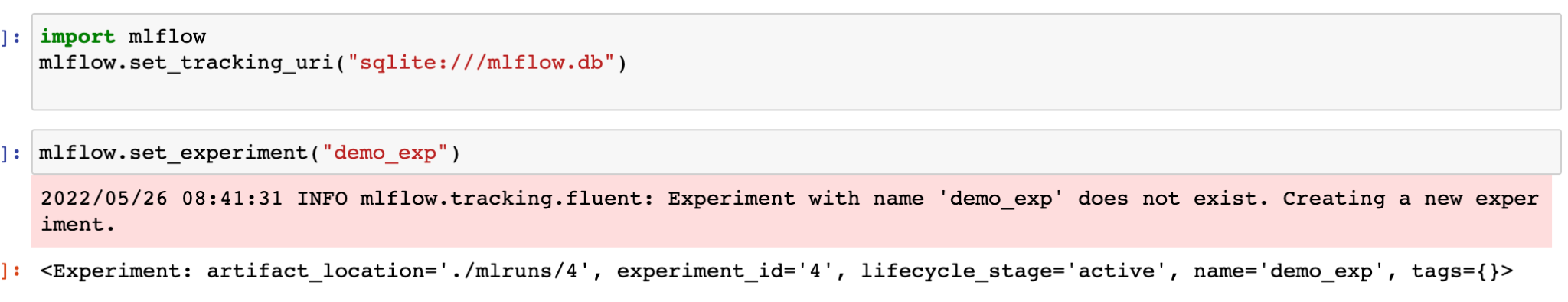

# Experiment not visible in MLflow UI

Make sure you launch the MLflow UI from the same directory as the code that is running the experiments (the same directory that contains the mlruns directory and the database that stores the experiments).

Or, navigate to the correct directory when specifying the tracking_uri.

For example:

If the

mlflow.dbis in a subdirectory calleddatabase, the tracking URI would be:sqlite:///database/mlflow.dbIf the

mlflow.dbis a directory above your current directory, the tracking URI would be:sqlite:///../mlflow.db

Another alternative is to use an absolute path to mlflow.db rather than a relative path.

You can also launch the UI from the same notebook by executing the following code cell:

import subprocess

MLFLOW_TRACKING_URI = "sqlite:///data/mlflow.db"

subprocess.Popen(["mlflow", "ui", "--backend-store-uri", MLFLOW_TRACKING_URI])

Then, use the same MLFLOW_TRACKING_URI when initializing MLflow or the client:

from mlflow.tracking import MlflowClient

client = MlflowClient(tracking_uri=MLFLOW_TRACKING_URI)

mlflow.set_tracking_uri(MLFLOW_TRACKING_URI)

# Metrics not visible in mlflow UI

I encountered the following issue: I was able to run experiments and the different model parameters were visible. However, the metrics, including the “handmade” metric rmse in the training script, were not visible (empty field).

I solved my problem by making sure to specify the “key” and “value” explicitly when using mlflow.log_metric:

mlflow.log_metric(key="rmse", value=rmse)

# Unable to create new Experiment

Following the instructions in the video did not work, even though the Jupyter notebook indicates it was successfully created.

It is recommended to set the URI to the listener directly. This discrepancy might be due to differences in the "mlflow" package versions between the video and the latest version we are using. The documentation for the latest "mlflow" package suggests setting the URI as follows:

mlflow.set_tracking_uri(uri="http://127.0.0.1:5000")

# Hash Mismatch Error with Package Installation

Problem:

When attempting to install MLFlow using pip install mlflow, an error occurs related to a hash mismatch for the Numpy package:

ERROR: THESE PACKAGES DO NOT MATCH THE HASHES FROM THE REQUIREMENTS FILE.

Error Details:

During the installation on 27th May 2022, the following occurred while Numpy was being installed:

Collecting numpy

Downloading numpy-1.22.4-cp310-cp310-win_amd64.whl (14.7 MB)

|██████████████ | 6.3 MB 107 kB/s eta 0:01:19

ERROR: THESE PACKAGES DO NOT MATCH THE HASHES FROM THE REQUIREMENTS FILE.

If you have updated the package versions, please update the hashes. Otherwise, examine the package contents carefully; someone may have tampered with them.

- Expected SHA256:

3e1ffa4748168e1cc8d3cde93f006fe92b5421396221a02f2274aab6ac83b077 - Got:

15e691797dba353af05cf51233aefc4c654ea7ff194b3e7435e6eec321807e90

Solution:

Install Numpy Separately:

Try installing Numpy separately using:

pip install numpy

Install MLFlow:

After successfully installing Numpy, proceed with reinstalling MLFlow:

pip install mlflow

This approach resolved the issue in this instance, although the problem may not be consistently reproducible. Be aware that similar hash mismatch errors might occur during package installations.

# How to Delete an Experiment Permanently from MLFlow UI

After deleting an experiment from the UI, it may still persist in the database. To delete this experiment permanently, follow these steps:

Install

ipython-sqlpip install ipython-sqlLoad SQL Magic Scripts in Jupyter Notebook

%load_ext sqlLoad the Database

Replace

nameofdatabase.dbwith your actual database name:%sql sqlite:///nameofdatabase.dbRun SQL Script

Use SQL commands to delete the experiment permanently. Refer to this link for a detailed guide.

# How to Update Git Public Repo Without Overwriting Changes

Problem: I cloned the public repo, made edits, committed, and pushed them to my own repo. Now I want to get the recent commits from the public repo without overwriting my own changes to my own repo. Which command(s) should I use?

Below is the Git configuration:

[core]

repositoryformatversion = 0

filemode = true

bare = false

logallrefupdates = true

ignorecase = true

precomposeunicode = true

[remote "origin"]

url = git@github.com:my_username/mlops-zoomcamp.git

fetch = +refs/heads/*:refs/remotes/origin/*

[branch "main"]

remote = origin

merge = refs/heads/main

Solution:

- Fork the original repository from DataClubsTak instead of cloning it directly.

- On GitHub, navigate to your forked repository.

- Click “Fetch and Merge” under the “Fetch upstream” menu on the main page of your own repository.

# Image size of 460x93139 pixels is too large. It must be less than 2^16 in each direction.

This issue is caused by mlflow.xgboost.autolog() in version 1.6.1 of XGBoost. To resolve this:

- Downgrade XGBoost to version 1.6.0 using the following command:

pip install xgboost==1.6.0

- Alternatively, update your requirements file to specify

xgboost==1.6.0.

# MlflowClient object has no attribute 'list_experiments'

Since version 1.29, the list_experiments method was deprecated and then removed in later versions.

You should use the following code instead:

# Register the best model

model_uri = f"runs:/{best_run.info.run_id}/model"

mlflow.register_model(model_uri=model_uri, name="RandomForestBestModel")

For more details, refer to the Mlflow documentation.

# MLflow Autolog not working

Make sure mlflow.autolog() (or framework-specific autolog) is written before with mlflow.start_run(), not after.

Also, ensure that all dependencies for the autologger are installed, including matplotlib. A warning about uninstalled dependencies will be raised.

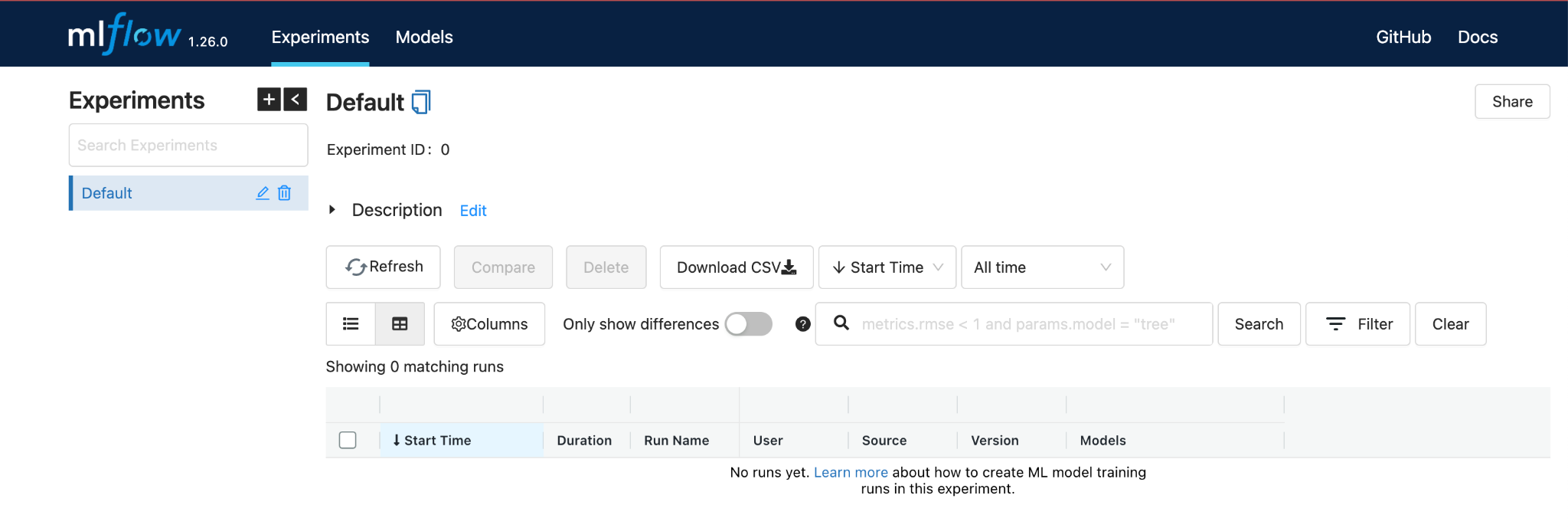

# MLflow URL ([127.0.0.1:5000](http://127.0.0.1:5000)) doesn't open.

If you’re running MLflow on a remote VM, you need to forward the port too, like we did in Module 1 for the Jupyter notebook port 8888. Simply connect your server to VS Code, as we did, and add 5000 to the PORT.

If you are running MLflow locally and 127.0.0.1:5000 shows a blank page, navigate to localhost:5000 instead.

# MLflow.xgboost Autolog Model Signature Failure

Got the same warning message as Warrie Warrie when using mlflow.xgboost.autolog():

It turned out that this was just a warning message, and upon checking MLflow UI (making sure that no "tag" filters were included), the model was actually automatically tracked in MLflow.

# MlflowException: Unable to Set a Deleted Experiment

raise MlflowException(

mlflow.exceptions.MlflowException: Cannot set a deleted experiment 'random-forest-hyperopt' as the active experiment. You can restore the experiment, or permanently delete the experiment to create a new one.

To resolve this issue, consider the following options:

Restore or Permanently Delete the Experiment: Refer to guidance on Stack Overflow for methods to permanently delete an experiment in MLflow.

Command Line Resolution: If you have deleted the experiment from the MLflow UI, run the following command in the CLI. Make sure to use the correct database filename.

mlflow gc --backend-store-uri sqlite:///backend.dbEnsure .trash is Empty: If the above command does not work and your .trash folder is already empty, confirm this by executing:

rm -rf mlruns/.trash/*Note: Ensure no files remain in

.trash/that could be interfering with the experiment reset.

# MlflowException: Unable to Set a Deleted Experiment with Postgres backend

If you’re using a Postgres backend locally or remotely and don’t want to delete the entire backend, you can run this script to permanently delete an experiment. This script assumes you have a separate env.py file to retrieve your environment variables.

import os

import sys

import psycopg2

sys.path.insert(0, os.getcwd())

from env import DB_NAME, DB_PASSWORD, DB_PORT, DB_USER

def perm_delete_exp():

connection = psycopg2.connect(

database=DB_NAME,

user=DB_USER,

password=DB_PASSWORD,

host="localhost",

port=int(DB_PORT)

)

with connection.cursor() as cursor:

queries = """

DELETE FROM experiment_tags WHERE experiment_id=ANY(SELECT experiment_id FROM experiments where lifecycle_stage='deleted');

DELETE FROM latest_metrics WHERE run_uuid=ANY(SELECT run_uuid FROM runs WHERE experiment_id=ANY(SELECT experiment_id FROM experiments where lifecycle_stage='deleted'));

DELETE FROM metrics WHERE run_uuid=ANY(SELECT run_uuid FROM runs WHERE experiment_id=ANY(SELECT experiment_id FROM experiments where lifecycle_stage='deleted'));

DELETE FROM tags WHERE run_uuid=ANY(SELECT run_uuid FROM runs WHERE experiment_id=ANY(SELECT experiment_id FROM experiments where lifecycle_stage='deleted'));

DELETE FROM params WHERE run_uuid=ANY(SELECT run_uuid FROM runs where experiment_id=ANY(SELECT experiment_id FROM experiments where lifecycle_stage='deleted'));

DELETE FROM runs WHERE experiment_id=ANY(SELECT experiment_id FROM experiments where lifecycle_stage='deleted');

DELETE FROM datasets WHERE experiment_id=ANY(SELECT experiment_id FROM experiments where lifecycle_stage='deleted');

DELETE FROM experiments where lifecycle_stage='deleted';

"""

for query in queries.splitlines()[1:-1]:

cursor.execute(query.strip())

connection.commit()

connection.close()

if __name__ == "__main__":

perm_delete_exp()

# No Space Left on Device - OSError[Errno 28]

You do not have enough disk space to install the requirements. Here are some solutions:

Increase EBS Volume on AWS: Follow this guide to increase the base EBS volume.

Add an External Disk on AWS: Add and configure an external disk to your instance, then configure conda installation to happen on this external disk.

Add Persistent Disk on GCP:

- Add another disk to your VM and follow this guide to mount the disk.

- Confirm the mount by running the following command in the bash shell:

df -H- Delete Anaconda and use Miniconda instead. Download Miniconda on the additional disk that you mounted.

- During the Miniconda installation, enter the path to the extra disk instead of the default disk, so that conda is installed on the extra disk.

</ANSWER>

# Homework: Parameters Mismatch in Homework Q3

I was using an old version of sklearn, which caused a mismatch in the number of parameters. In the latest version, min_impurity_split for RandomForestRegressor was deprecated. Upgrading to the latest version resolved the issue.

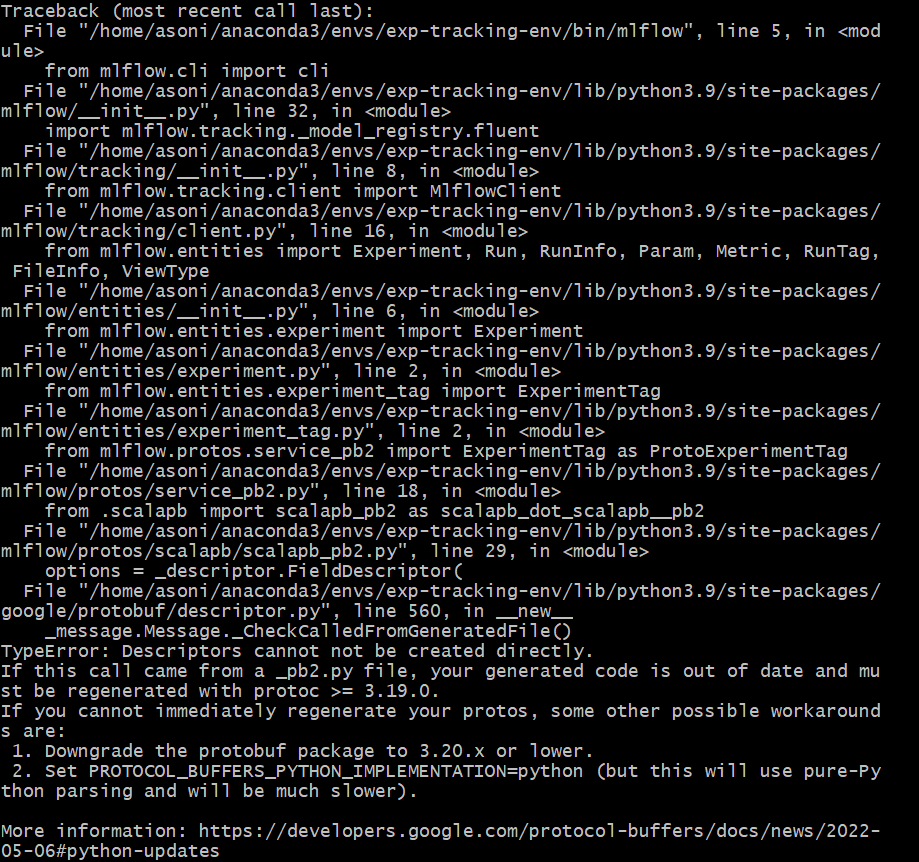

# Protobuf error when installing MLflow

Error:

I installed all the libraries from the requirements.txt document in a new environment with the following command:

pip install -r requirements.txt

Then, when I run mlflow from my terminal like this:

mlflow

I get this error:

Solution:

You need to downgrade the version of the protobuf module to 3.20.x or lower. Initially, it was version 4.21. Use the following command to install the compatible version:

pip install protobuf==3.20

After doing this, I was able to run mlflow from my terminal.

# SSH: Connection to AWS EC2 instance from local machine WSL getting terminated frequently within a minute of inactivity.

If the SSH connection from your local machine’s WSL to an AWS EC2 instance is frequently getting terminated after a short period of inactivity, you might see the following message displayed:

To fix this issue, add the following lines to your config file in the .ssh directory of your WSL environment:

ServerAliveInterval 60

ServerAliveCountMax 3

For example, after adding these lines, your SSH configuration should look somewhat like this:

Host mlops-zoomcamp

HostName 45.80.32.7

User ubuntu

IdentityFile ~/.ssh/siddMLOps.pem

StrictHostKeyChecking no

ServerAliveInterval 60

ServerAliveCountMax 3

# Setting up Artifacts folders

Please check your current directory while running the mlflow ui command. You need to run mlflow ui or mlflow server command in the right directory.

# Setting up MLflow experiment tracker on GCP

If you have problems setting up MLflow for experiment tracking on GCP, you can check these two links:

# Setuptools Replacing Distutils - MLflow Autolog Warning

Downgrade setuptools:

- Change from version 62.3.2 to 49.1.0

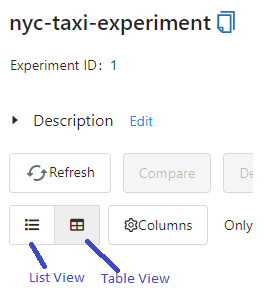

# Sorting runs in MLflow UI

I can’t sort runs in MLflow

Make sure you are in table view (not list view) in the MLflow UI.

# TypeError: send_file() unexpected keyword 'max_age' during MLflow UI Launch

Problem: When running $ mlflow ui on a remote server and attempting to open it in a local browser, the following exception occurs, and the MLflow UI page does not load.

Solution:

Uninstall Flask on your remote server by using:

pip uninstall flaskReinstall Flask with:

pip install FlaskThis issue arises because the base conda environment includes a version of Flask that's less than 1.2. Cloning this environment retains the older version, causing the error. Installing a newer version of Flask resolves the issue.

# mlflow ui on Windows FileNotFoundError: [WinError 2] The system cannot find the file specified

Problem: After successfully installing mlflow using pip install mlflow on a Windows system, running the mlflow ui command results in the error:

FileNotFoundError: [WinError 2] The system cannot find the file specified

Solution:

Add C:\Users\{User_Name}\AppData\Roaming\Python\Python39\Scripts to the PATH.

# Unsupported Operand Type Error in hpo.py

Running the command:

python hpo.py --data_path=./your-path --max_evals=50

leads to the following error:

TypeError: unsupported operand type(s) for -: 'str' and 'int'

Full Traceback:

File "~/repos/mlops/02-experiment-tracking/homework/hpo.py", line 73, in <module>

run(args.data_path, args.max_evals)

File "~/repos/mlops/02-experiment-tracking/homework/hpo.py", line 47, in run

fmin(

File "~/Library/Caches/pypoetry/virtualenvs/mlflow-intro-SyTqwt0D-py3.9/lib/python3.9/site-packages/hyperopt/fmin.py", line 540, in fmin

return trials.fmin(

File "~/Library/Caches/pypoetry/virtualenvs/mlflow-intro-SyTqwt0D-py3.9/lib/python3.9/site-packages/hyperopt/base.py", line 671, in fmin

return fmin(

File "~/Library/Caches/pypoetry/virtualenvs/mlflow-intro-SyTqwt0D-py3.9/lib/python3.9/site-packages/hyperopt/fmin.py", line 586, in fmin

rval.exhaust()

File "~/Library/Caches/pypoetry/virtualenvs/mlflow-intro-SyTqwt0D-py3.9/lib/python3.9/site-packages/hyperopt/fmin.py", line 364, in exhaust

self.run(self.max_evals - n_done, block_until_done=self.asynchronous)

Solution:

The --max_evals argument in hpo.py is not defined with a datatype, leading to it being interpreted as a string. It should be an integer to ensure the script functions correctly. Modify the argument definition as follows:

parser.add_argument(

"--max_evals",

type=int,

default=50,

help="the number of parameter evaluations for the optimizer to explore."

)

# Unsupported Scikit-Learn version

Getting the following warning when running mlflow.sklearn:

2022/05/28 04:36:36 WARNING mlflow.utils.autologging_utils: You are using an unsupported version of sklearn. If you encounter errors during autologging, try upgrading / downgrading sklearn to a supported version, or try upgrading MLflow.

Solution:

- Use scikit-learn version between 0.24.1 and 1.4.2.

Reference: MLflow Documentation

# Mlflow CLI does not return experiments

Problem

CLI commands (mlflow experiments list) do not return experiments.

Solution

You need to set the environment variable for the Tracking URI:

export MLFLOW_TRACKING_URI=http://127.0.0.1:5000

# Viewing MLflow Experiments using MLflow CLI

Problem:

After starting the tracking server, when trying to use the MLflow CLI commands as listed here, most commands can't find the experiments that have been run with the tracking server.

Solution:

Set the environment variable

MLFLOW_TRACKING_URIto the URI of the SQLite database:export MLFLOW_TRACKING_URI=sqlite:///{path to sqlite database}After setting the environment variable, you can view the experiments from the command line using commands like:

mlflow experiments searchNote: Commands like

mlflow gcmay still not get the tracking URI and need to be passed explicitly as an argument every time the command is run.

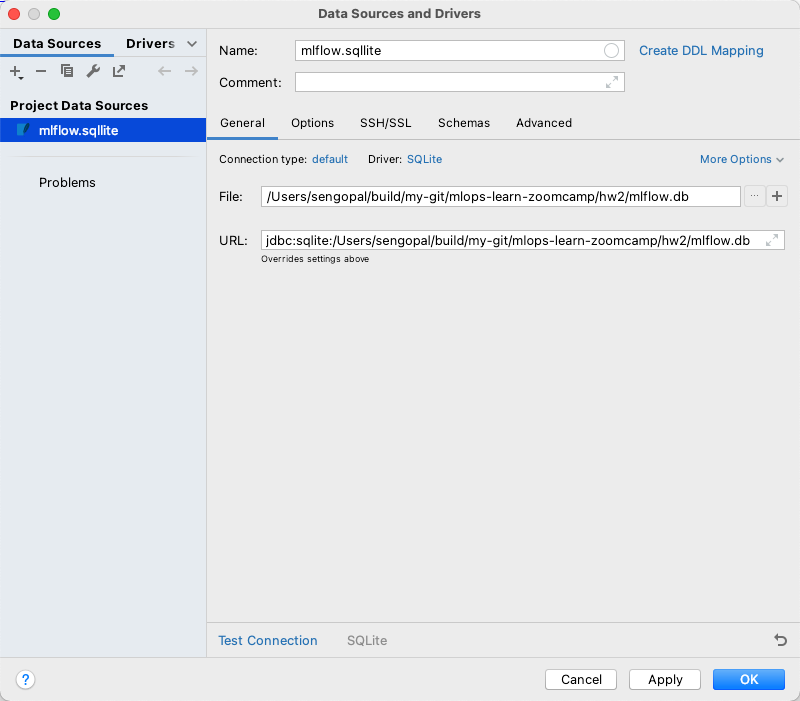

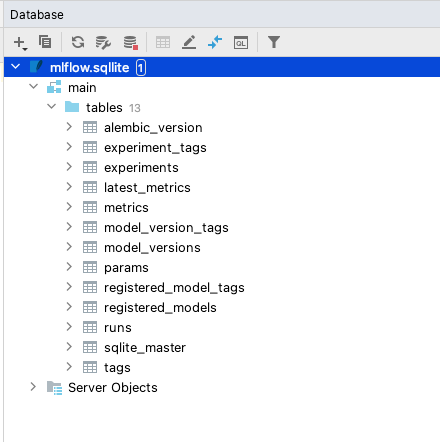

# Viewing SQLite Data Raw & Deleting Experiments Manually

All the experiment and other tracking information in MLflow are stored in an SQLite database provided while initiating the mlflow ui command. This database can be inspected using PyCharm’s Database tab by selecting the SQLite database type.

Once the connection is created, the tables can be queried and inspected using standard SQL. The same applies to any SQL-backed database such as PostgreSQL.

This approach is useful to understand the entity structure of the data being stored within MLflow and is beneficial for systematic archiving of model tracking for extended periods.

# What does launching the tracking server locally mean?

Launching the tracking server locally means starting an MLflow server on your machine for remote hosting. This setup is useful when multiple colleagues are collaborating and need to connect to the same MLflow server instead of running it individually on their laptops.

# Parameter adding in case of max_depth not recognized

Problem: Parameter was not recognized during the model registry.

Solution: Parameters should be added prior to the model registry. Use the following method to add parameters:

mlflow.log_params(params)

This way, the dictionary can be directly appended to data.run.params.

# Max_depth is not recognize even when I add the mlflow.log_params

Problem:

Max_depth is not recognized even when I add the mlflow.log_params.

Solution:

The mlflow.log_params(params) should be added to the hpo.py script. If you run it, it will append the new model to the previous run that doesn’t contain the parameters. You should either:

- Remove the previous experiment

- Change it

# AttributeError: 'tuple' object has no attribute 'tb_frame'

Problem: About week_2 homework: The register_model.py script, when copied into a Jupyter notebook, fails and produces the following error:

AttributeError: 'tuple' object has no attribute 'tb_frame'

Solution: Remove click decorators.

# WandB API error

Problem: When running the preprocess_data.py file, you encounter the following error:

wandb: ERROR api_key not configured (no-tty). call wandb.login(key=[your_api_key])

Solution:

Go to your WandB profile and navigate to user settings.

Scroll down to the “Danger Zone” and copy your API key.

Before running

preprocess_data.py, add and run the following cell in your notebook:%%bash wandb login <YOUR_API_KEY_HERE>

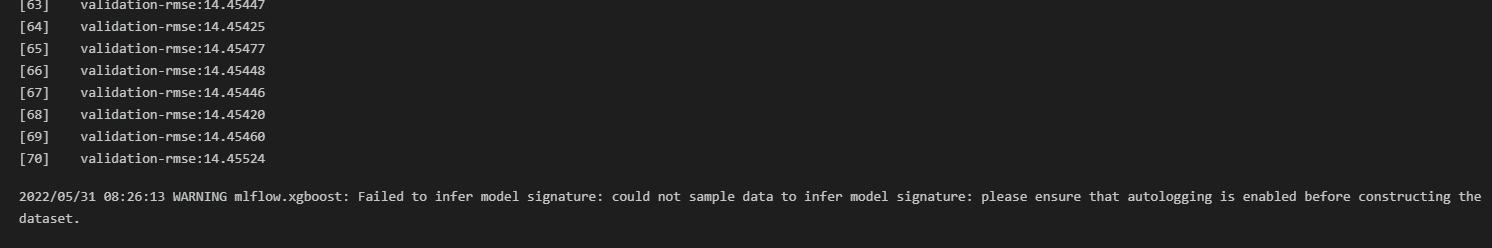

# WARNING mlflow.xgboost: Failed to infer model signature: could not sample data to infer model signature: please ensure that autologging is enabled before constructing the dataset.

Please make sure you follow the order below, enabling autologging before constructing the dataset. If you still have this issue, check that your data is in a format compatible with XGBoost.

Enable MLflow autologging for XGBoost

mlflow.xgboost.autolog()Construct your dataset

X_train, y_train = ...Train your XGBoost model

import xgboost as xgb model = xgb.XGBRegressor(...) model.fit(X_train, y_train)

# Old version of glibc when running XGBoost

Starting from version 2.1.0, XGBoost distributes its Python package in two variants:

- manylinux_2_28: For recent Linux distributions with glibc 2.28 or newer. This variant includes all features, such as GPU algorithms and federated learning.

- manylinux2014: For older Linux distributions with glibc versions older than 2.28. This variant lacks support for GPU algorithms and federated learning.

If you're installing XGBoost via pip, the package manager automatically selects the appropriate variant based on your system's glibc version. Starting May 31, 2025, the manylinux2014 variant will no longer be distributed.

This means that systems with glibc versions older than 2.28 will not be able to install future versions of XGBoost via pip unless they upgrade their glibc version or build XGBoost from source.

# wget not working

Problem

Using the wget command to download either data or Python scripts on Windows, I am using the notebook provided by Visual Studio and despite having a Python virtual environment, it did not recognize the pip command.

Solution

- Use

python -m pip, this applies to any other command as well, e.g.,python -m wget.

# Open/run github notebook(.ipynb) directly in Google Colab

Problem: Open/run GitHub notebook (.ipynb) directly in Google Colab

Solution:

- Change the domain from

github.comtogithubtocolab.com. The notebook will open in Google Colab. - Note: This only works with public repositories.

Navigating in Wandb UI became difficult to me, I had to intuit some options until I found the correct one.

Solution: Refer to the official documentation.

# Why do we use Jan/Feb/March for Train/Test/Validation Purposes?

We use this type of split approach instead of a random split to address specific needs in model evaluation, primarily focusing on seasonality and preventing data leakage.

Solution:

"Out of Time" Validations:

- Check for Seasonality:

- By using specific periods like Jan/Feb/March, we can assess if there are seasonal effects in the data.

- Example: If the RMSE for the test period is 5, but the RMSE for validation is 20, this indicates significant seasonality. This might suggest switching to Time Series approaches.

- Check for Seasonality:

Prevent Data Leakage:

- When predicting future outcomes, a "random sample" train/test split can introduce data leakage, resulting in overfitting and poor model performance in production.

- It's crucial not to use future information when predicting the present in a model context.

Approach:

- Train: January

- Test: February

- Validate: March

The validation process is essential for reporting model metrics to leadership, regulators, auditors, and for analyzing target drift in the models.

<Problem and approach discussed were provided by an internal source.>

# WARNING: mlflow.sklearn: Failed to log training dataset information to MLflow Tracking.

Problem

When using MLflow’s autolog function, you may encounter the following warning:

WARNING mlflow.sklearn: Failed to log training dataset information to MLflow Tracking. Reason: 'numpy.ndarray' object has no attribute 'toarray'

This occurs because the autolog function is attempting to log your dataset. MLflow expects the dataset to be in a pd.DataFrame format. If you’re following course code that provides a numpy.ndarray, MLflow fails as the numpy.ndarray is already an array.

Solution

Since we are not processing datasets in this zoomcamp, use the following parameter in the autolog function to prevent logging datasets:

log_datasets = False

Problem

Error when running the mlflow server on AWS CLI with an S3 bucket and POSTGRES database:

Reproducible Command:

mlflow server -h 0.0.0.0 -p 5000 --backend-store-uri postgresql://<DB_USERNAME>:<DB_PASSWORD>@<DB_ENDPOINT>:<DB_PORT>/<DB_NAME> --default-artifact-root s3://<BUCKET_NAME>

Error Message:

urllib3 v2.0 only supports OpenSSL 1.1.1+, currently

ImportError: urllib3 v2.0 only supports OpenSSL 1.1.1+, currently the 'ssl' module is compiled with 'OpenSSL 1.0.2k-fips 26 Jan 2017'. See: [GitHub](https://github.com/urllib3/urllib3/issues/2168)

Solution

Upgrade mlflow to address compatibility issues:

pip3 install --upgrade mlflow

Resolution

This process will downgrade urllib3 from version 2.0.3 to 1.26.16, ensuring compatibility with mlflow and ssl version 1.0.2. You should see the following output after the upgrade:

Installing collected packages: urllib3

Attempting uninstall: urllib3

Found existing installation: urllib3 2.0.3

Uninstalling urllib3-2.0.3:

Successfully uninstalled urllib3-2.0.3

Successfully installed urllib3-1.26.16

# ImportError: urllib3 v2.0 only supports OpenSSL 1.1.1+

If you're encountering an error while running S3 buckets, ensure to resolve the dependencies issue by downgrading urllib3 to a compatible version:

pip3 install "urllib3<1.27"

# AttributeError: 'MlflowClient' object has no attribute 'list_run_infos'

Problem: In the scenario 2 notebook, the error

AttributeError: 'MlflowClient' object has no attribute 'list_run_infos'

is thrown when running:

run_id = client.list_run_infos(experiment_id='1')[0].run_id

Solution: Use the following code instead:

run_id = client.search_runs(experiment_ids='1')[0].info.run_id

Scenario: This solution works for MLflow version 2.12.2 and might work for other recent versions as of May, 2024.

# When using Autologging, do I need to set a training parameter to track it on Mlflow UI?

No, in the official documentation it’s mentioned that autologging keeps track of the parameters even when you do not explicitly set them when calling .fit.

You can run the training, only setting the parameters you want, but you can check all the parameters in the MLflow UI.

# Hyperopt is not installable with Conda

Description

When setting up your virtual environment with

conda install --file requirements.txt

you may encounter the following error:

PackagesNotFoundError: The following packages are not available from current channels:

- hyperopt

Solution

Your conda installation might be out of date. You can update Conda with:

conda update -n base -c defaults condaIf updating does not solve the issue, consider installing the package via the Intel channel, as advised on the conda page:

conda install intel::hyperopt

# Error importing xgboost in python with OS mac: library not loaded: @rpath/libomp.dylib

To fix this error, run the following command:

brew install libomp

# Size limit when uploading to GitHub

To manage size limits effectively when uploading to GitHub, add the mlruns and artifacts directories to your .gitignore, like this:

02-experiment-tracking/mlruns

02-experiment-tracking/runnin-mflow-examples/mlruns

02-experiment-tracking/homework/mlruns

02-experiment-tracking/homework/artifacts

Module 3: Orchestration

# Why does MlflowClient no longer support list_experiments?

Older versions of MLflow used client.list_experiments(), but in recent versions, this method was replaced.

Use client.search_experiments() instead.

# Mage shortcut key to open Text Editor is not working on Windows

On Windows, use the shortcut key CTRL+WIN+..

For MacOS, the shortcut is CMD+..

# Mage: Pipeline breaks with `[Errno 2] No such file or directory: '/home/src/mage_data/{…} /.variables/{...}/output_1/object.joblib'`

- Export the pipeline as a zip file.

- Create a new Mage project.

- Import the pipeline zip to the new project.

Additionally, check the following:

- Review the logs of the upstream block that was expected to generate

object.joblib. Ensure it completed successfully. - Verify that the expected output (often named

output_1) was created and saved. - Check in the Mage UI or directly in the file system (if accessible) to confirm whether the file exists in the

.variablesdirectory for that upstream block.

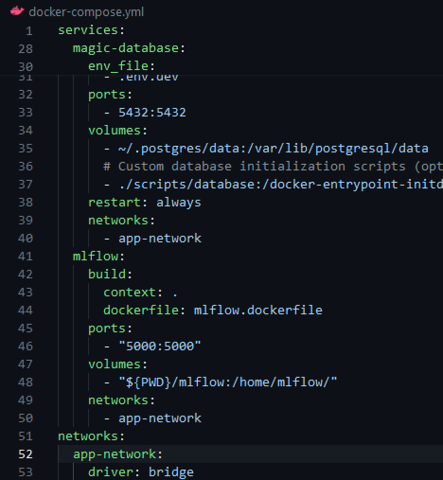

# Docker: Update docker-compose to initiate Mage

When running ./scripts/start.sh, the following error occurs:

ERROR: The Compose file './docker-compose.yml' is invalid because:

Unsupported config option for networks: 'app-network'

Unsupported config option for services: 'magic-platform'

Solution:

Download the latest version of Docker Compose

sudo curl -L "https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-composeApply executable permissions to the binary

sudo chmod +x /usr/local/bin/docker-compose

# Mage in Codespaces in a subfolder under mlops-zoomcamp repository

Issue 1: Errors such as:

[+] Running 1/1

✘ magic-database Error too many requests: You have reached your pull rate limit. You may increase the limit by authenticating and upgra...

Error response from daemon: too many requests: You have reached your pull rate limit. You may increase the limit by authenticating and upgrading: [docker.com](https://www.docker.com/increase-rate-limit)

Issue 2: Popups with different percentage values indicating space is in single digits.

Solution: It is not recommended to set up Mage as a subfolder of mlops-zoomcamp. See findings in this thread for more information.

# Mage in Codespaces

The below errors seem to occur only when using Mage in Codespaces.

Errors

Error (1)

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?Error (2)

Error response from daemon: invalid volume specification: '/workspaces/mage-mlops:/:rw': invalid mount config for type "bind": invalid specification: destination can't be '/'Solution for (1) & (2):

- Stay tuned…still testing.

- Running

docker infoanddocker –versionworks fine. - Executing

docker compose down, stopping Codespaces, and reconnecting resolved the errors, though it might not be reproducible for everyone.

Error (3)

warning: unable to access '/home/codespace/.gitconfig': Is a directorySolution (3):

This is targeted for 3.5.x Deploying with Mage. If not deploying:

Comment line #20 in

docker-compose.yml.Place a dummy empty file named

.gitconfigin your repo’s root folder.Copy it in the Dockerfile with this line, place it below line #9:

COPY .gitconfig /root/.gitconfig

The reason this happens is that when the file is missing, Docker auto-creates it as a directory instead of a file. Creating a dummy file prevents this.

# Mage updated in UI

When you see the mage version change in the UI after you’ve started the container, and you want to update, follow these steps. Read the release notes first to see if there’s a fix that affected your work and would benefit from an update.

If you want to remain in the previous version, it's also fine unless the fixes were specifically for our zoomcamp coursework (check the repository for any new instructions or PRs added).

Close the browser page.

In the terminal console, bring down the container:

docker compose downRebuild the container with the new mage image:

docker compose build --no-cacheVerify that you see:

[magic-platform 1/4] FROM docker.io/mageai/mageai:alphaThis means that the container is being rebuilt with a new version.

If the image is not updated, press

ctrl+cto cancel the process and pull the image manually:docker pull mageai/mageai:alphaThen rebuild.

Restart the docker container as before:

./scripts/start.sh

Note: This is the same sequence of steps if you want to switch to the latest tagged image instead of using the alpha image.

What does alpha and latest mean?

Latest is the fully released version ready for production use, and it has gone through verification, testing, QA, and whatever else the release cycle entails.

Alpha is the potentially buggy version with fresh new fixes and newly added features; but not yet put through the full beta test (if there’s one), integration testing, and other QA steps. Expect issues to occur.

# Mage Time Series Bar Chart Not Showing