ML Zoomcamp FAQ

Table of Contents

- General Course-Related Questions

- Module 1. Introduction to Machine Learning

- Module 1 Homework

- Module 2. Machine Learning for Regression

- Module 2 Homework

- Module 3. Machine Learning for Classification

- Module 3 Homework

- Module 4. Evaluation Metrics for Classification

- Module 4 Homework

- Module 5. Deploying Machine Learning Models

- Module 5 Homework

- Module 6. Decision Trees and Ensemble Learning

- Module 6 Homework

- Projects (Midterm and Capstone)

- Module 8. Neural Networks and Deep Learning

- Module 9. Serverless Deep Learning

- Module 10. Kubernetes and TensorFlow Serving

- Module 11. KServe

- Miscellaneous

General Course-Related Questions

# How do I submit homework?

- Do the tasks locally

- Publish your code (e.g., in your own GitHub repo)

- Submit your answers via the homework form and include the URL to your code

- You will see the answers only after the deadline

- Homeworks are in the cohorts folder, e.g. for 2025 it's

cohorts/2025 - The forms for submitting the homework are in the course management platform

# What’s new in the 2025 edition?

- Deployment module updated to FastAPI (replacing Flask) and new tools.

- Neural networks taught with PyTorch (theory videos in Keras are kept; an additional PyTorch implementation video is provided).

- Deep learning deployment uses ONNX Runtime on AWS Lambda (replacing TensorFlow Lite).

# Are Jupyter Notebooks used?

Yes. You’ll work extensively with notebooks alongside standard Python files and CLI tools.

# Do I need prior machine learning experience?

No. The course starts from first principles. We do an introduction to ML, a gentle math refresher (only what you need), and the basics of linear algebra taught via code. You can begin with zero ML background and ramp up through hands-on exercises.

# Will the repo already have all FastAPI/uv/PyTorch updates?

The repository does not lock in every update at once. Some updates for FastAPI/uv and PyTorch are released as the cohort progresses. For theory, older videos remain; for implementation, updated workshops and videos are linked (for example, the FastAPI+uv workshop and the PyTorch add-on). To find the latest materials, check the module pages for updated workshop links. If a critical update is pushed, instructors will annotate or replace the relevant material accordingly.

# How do I sign up?

In the course GitHub repository, there’s a link to sign up. Here it is: airtable.com

# When is the next cohort?

The course is available in a self-paced mode, allowing you to go through the materials anytime. If you prefer to join a cohort with other students, live cohorts start every September.

Zoomcamps are scheduled throughout the year. For more information, refer to A Guide to Free Online Courses at DataTalks.Club.

Course videos are pre-recorded and available to watch immediately. We also occasionally host office hours for live Q&A, which are recorded and made available later. You can find these recordings and pre-recorded course videos on the Course Channel’s Bookmarks or DTC’s YouTube channel.

# Should I star the GitHub repo?

Yes, please star the repository: https://github.com/DataTalksClub/machine-learning-zoomcamp/. Starring helps it trend so others can discover the course, and the instructor explicitly asks attendees to star it to boost visibility.

# How do I join Slack if the invite email didn’t arrive?

Go to DataTalks.Club, request a Slack invite, or use the manual request form (processed daily). After joining, browse channels and join #course-ml-zoomcamp.

# How do I get help if I’m stuck?

- Slack: paste your code and errors, and use threads to keep discussions organized.

- this FAQ and past office hours: check these resources for guidance before asking.

- AI assistants like ChatGPT: use them for quick guidance or brainstorming.

- Peers and instructors: they may chime in when available.

# Do I need to enroll in the course before submitting homework?

No enrollment is required to submit homework. Just log into the homework form when it opens. The Airtable registration you may see is only for announcements; actual submissions are made on the course platform forms and via your GitHub as specified in the homework guidelines.

# What if I miss a session?

Everything is recorded, so you won’t miss office hours or any content. You can ask your questions in advance, and they will be covered during the live stream. Additionally, you can ask questions in Slack.

# What other prerequisites should I have?

- Be ready to use the terminal. You’ll use Git, the command line, Docker, and basic OS tasks. These are demonstrated in the course, but you should be comfortable running commands and reading errors.

- If you’re new to any of these tools, consider a quick hands-on practice session before the course starts.

- You don’t need to master everything before day one, but a basic comfort level with the command line will help you keep up as you learn.

# How do donations/sponsorships work?

- The course is free.

- Sponsors may be added.

- Voluntary donations help too. You can donate here: https://github.com/sponsors/alexeygrigorev.

- If you have a company training budget, you can request an invoice to support the course.

# How much theory will you cover?

The bare minimum. The focus is more on practice, and we'll cover the theory only on the intuitive level.

For example, we won't derive the gradient update rule for logistic regression (there are other great courses for that), but we'll cover how to use logistic regression and make sense of the results.

# Do I need to know Python already?

Not strictly required, but you must be comfortable programming. If you know another major language (Java, JavaScript, Rust, etc.), you’ll pick up the small subset of Python we use. Expect a lot of coding and 'code-along' sessions.

# I don't know math. Can I take the course?

Math is not strictly a prerequisite to start learning machine learning (ML), but having a strong foundation in certain mathematical concepts can significantly improve your understanding and ability to work with ML models.

Yes! We'll cover some linear algebra in the course, but in general, there will be very few formulas, mostly code.

Here are some interesting videos covering linear algebra that you can already watch:

- ML Zoomcamp 1.8 - Linear Algebra Refresher from Alexey Grigorev

- The excellent playlist from 3Blue1Brown: Vectors | Chapter 1, Essence of linear algebra

Never hesitate to ask the community for help if you have any questions.

# What’s the recent pass/completion rate?

In the most recent cohort's dashboard snapshot, roughly 85% of project attempts passed.

# Will we cover Spark or big-data tooling?

No Spark in this course. The focus is on core ML engineering and deployment patterns. We do cover deployment tooling relevant to ML (e.g., Kubernetes, TensorFlow Serving, KServe) as part of the deployment modules; see Modules 5–11 for details.

# I filled the form, but haven't received a confirmation email. Is it normal?

Normally, you'd receive the email shortly after you signed up.

- Check your promotions tab in Gmail as well as spam, as the email might have been filtered there.

- If you unsubscribed from our newsletter, you won't receive course-related updates.

Don't worry, it’s not a problem. To make sure you don’t miss anything, join the #course-ml-zoomcamp channel in Slack and our telegram channel with announcements. This is sufficient to follow the course.

# How long is the course?

Approximately 4 months, but it may take longer if you want to engage in extra activities such as an additional project or writing an article.

# How much time do I need for this course?

Around ~10 hours per week.

You can see how much time people spend on the 2024 edition here.

# Will I get a certificate?

Yes, if you finish at least 2 out of 3 projects and review 3 peers’ projects by the deadline, you will get a certificate. This is what it looks like: this.

# How can I get answers to common questions?

Start with the FAQ document (general + per-module Q&A). There’s also a Zoomcamp Q&A bot in Slack—use it thoughtfully; often the FAQ or recent messages already contain your answer.

# Can this program help data engineers move into DS/ML engineering?

Yes. The program’s project-first flow, deployment modules (FastAPI/Lambda/Kubernetes), and evaluation practices map well to ML engineer roles. Practically, you’ll gain experience with end-to-end ML workflows—from data engineering pipelines and model deployment to evaluation and monitoring—using tools commonly used in DS/ML roles.

# Will I get a certificate if I missed the midterm project?

Yes, it's possible. See the previous answer.

# How much Python should I know?

Check this article. If you know everything in this article, you know enough. If not, read the article and consider joining the course Introduction to Python – Machine Learning Bootcamp.

You can also follow the free English course "Learn Python Basics for Data Analysis" on the OpenClassrooms e-learning platform: Learn Python Basics for Data Analysis - OpenClassrooms.

It's important to know some basics such as:

- How to run a Jupyter notebook

- How to import libraries (and understand what libraries are)

- How to declare a variable (and understand what variables are)

- Some important operations regarding data analysis

# Do I need any special hardware?

For the Machine Learning part, all you need is a working laptop with an internet connection. The Deep Learning part is more resource intensive, but for that you can use a cloud. We use SaturnCloud, but it can be anything else.

# I’m new to Slack and can’t find the course channel. Where is it?

Here’s how you join in Slack: https://slack.com/help/articles/205239967-Join-a-channel

- Click “All channels” at the top of your left sidebar. If you don't see this option, click “More” to find it.

- Browse the list of public channels in your workspace, or use the search bar to search by channel name or description.

- Select a channel from the list to view it.

- Click Join Channel.

Do we need to provide the GitHub link to only our code corresponding to the homework questions?

Yes. You are required to provide the URL to your repo in order to receive a grade.

# The course has already started. Can I still join it?

Yes, you can. Even though you missed the start date, you can register for the course. You won’t be able to submit some of the homeworks, but you can still take part in the course.

In order to get a certificate, you need to submit 2 out of 3 course projects and review 3 peers by the deadline. It means that if you join the course at the end of November and manage to work on two projects, you will still be eligible for a certificate.

# How do announcements work?

Announcements are posted in the Telegram channel and mirrored into Slack. It’s best to join Telegram for clean, broadcast-only updates; otherwise you’ll still see mirrored posts in Slack.

# Are homeworks required to get the certificate?

Homeworks are optional for certification, but strongly recommended to check understanding. Certification is based on projects, not homework scores.

# Can I submit the homework after the due date?

No, it’s not possible. The form is closed after the due date. But don’t worry, homework is not mandatory for finishing the course.

# Where can I find all course materials and how can I keep track of lessons and modules?

All course materials and links live in the main ML Zoomcamp GitHub repository:

- Repository: https://github.com/DataTalksClub/machine-learning-zoomcamp/

- Modules: folders for each unit, with a README/markdown per unit, plus videos and notes.

- Cohort-specific items (homework and dates) are under cohorts/2025.

- YouTube playlist: https://www.youtube.com/playlist?list=PL3MmuxUbc_hIhxl5Ji8t4O6lPAOpHaCLR contains all lessons.

- Year-specific playlists (2021–2025) contain cohort-specific streams and extras.

- Quick access tips: browse the repo, check the modules folders, and look in cohorts/2025 for cohort-specific items. The syllabus is also available in the course repo mlzoomcamp.com/#syllabus.

# What are the deadlines in this course?

For the 2025 cohort, you can find the deadlines here (it’s taken from the 2025 cohort page) or in Google Calendar.

# Submitting learning in public links

When you post about what you learned from the course on your social media pages, use the tag #mlzoomcamp. When you submit your homework, there’s a section in the form for putting the links there. Separate multiple links by any whitespace character (linebreak, space, tab, etc).

- For posting the learning in public links, you get extra scores.

- The number of scores is limited to 7 points: if you put more than 7 links in your homework form, you’ll get only 7 points.

- The same content can be posted to 7 different social sites and still earn you 7 points if you add 7 URLs per week.

For midterms/capstones, the awarded points are doubled as the duration is longer. So for projects, the points are capped at 14 for 14 URLs.

# Can I share my answers of the Homework with the community to compare before I submit them?

We kindly ask you not to share your answers.

# Can I finish early and get the certificate in under four months?

No. Project timelines structure the cohort. The earliest certificates typically land around January (after Capstone 1/2 windows and reviews).

# Can I skip topics I already know?

Yes. All lesson content is optional; only the projects are mandatory. Move ahead at your own pace (you don’t need to wait for a “module start” date).

# Are lessons live or recorded?

Core lessons are pre-recorded and already available. Occasional live streams (like the launch/Q&A) happen, but there are no weekly office hours at this time. For help, please use the recorded office hours from prior years and check the FAQ for related guidance.

# What are the Slack “house rules”?

Slack house rules:

- Ask course questions in

#course-ml-zoomcamp(not#general). - Use threads to reply.

- Paste text/code instead of screenshots or phone photos.

- Don’t tag instructors; many peers can help and instructors see messages anyway.

- Keep the channel tidy and on-topic.

# Which tools do we use for environments: uv or Anaconda?

- Install Python easily with Anaconda (especially on Windows).

- Use uv for virtual environments and package installs (recommended over conda for this course).

- The uv + FastAPI workshop is included in Module 5.

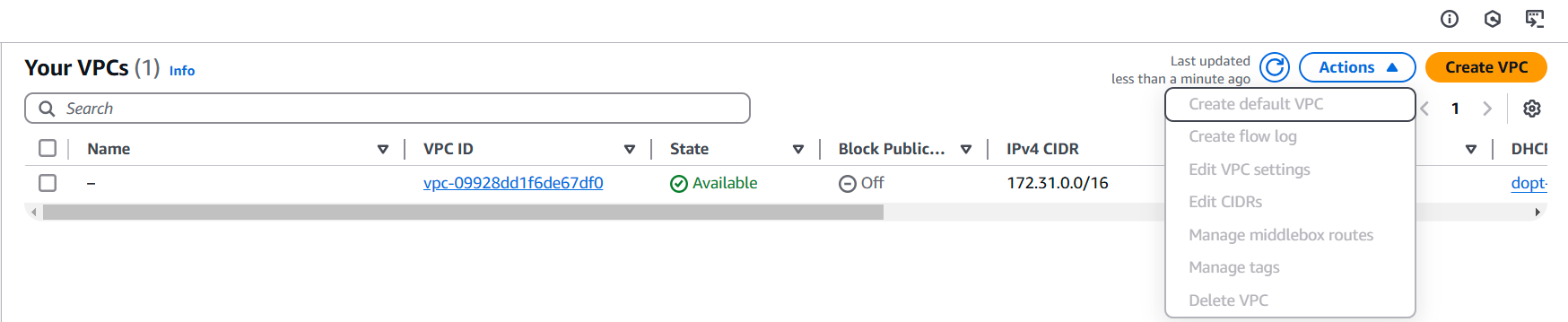

# Which cloud provider is used?

Examples use AWS (you should have or create an AWS account). Concepts transfer to other clouds (GCP/Azure) with minor adjustments.

# Is system design included?

There is no dedicated system-design module. The course emphasizes end-to-end ML projects and deployments (web services, Lambda, Kubernetes). You will learn by building and deploying models rather than focusing on standalone system-design concepts.

# Is there any advantage to high homework/leaderboard scores?

Indirectly. The leaderboard highlights active learners (correct answers + learning-in-public links). That visibility helps with networking and recruiter attention, even though the certificate itself is pass/fail.

# How can I show business impact from ML projects? Does the course teach this?

The course focuses on building and deploying real ML services, which gives you practical, portfolio-worthy projects. Business impact depends on the domain context and is best learned on the job. By the end, you’ll have a visible, high-quality portfolio you can discuss with employers. For each project, consider the problem, approach, measurable outcomes, and a clear narrative to make the impact easy to communicate in interviews.

# What strategy do you recommend for an unemployed SWE pivoting to ML/AI soon?

- Ship projects and learn in public daily (posts, blogs, code).

- Publish everything on GitHub, write about your approach/results, engage on Slack/LinkedIn, and build a network.

- Use the course projects and optional activities (Kaggle, articles) as portfolio centerpieces.

# Why learn traditional ML if LLMs can do it for me?

Assistants are great accelerators, but you still need conceptual understanding to debug, adapt, and own your systems when AI tools make mistakes or hit limits.

The course teaches you to build, reason about, and deploy ML systems you control.

Module 1. Introduction to Machine Learning

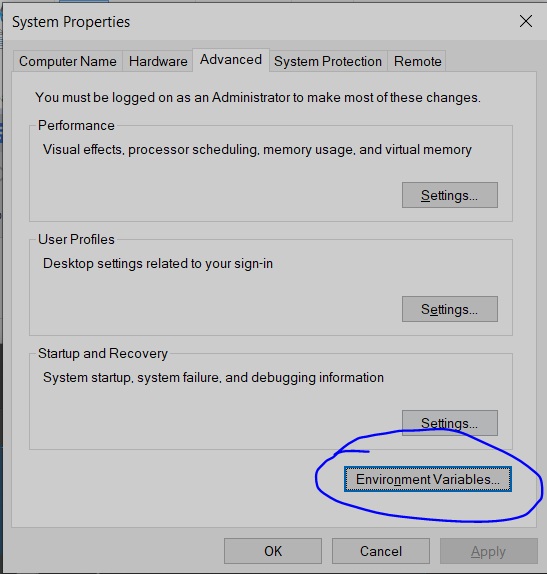

# wget is not recognized as an internal or external command

If you encounter the error "wget is not recognized as an internal or external command", you need to install it.

On Ubuntu, run:

sudo apt-get install wgetOn Windows, you can use Chocolatey:

choco install wgetOr download a binary from here and add it to your PATH (e.g.,

C:/tools/).On Mac, use Homebrew:

brew install wget

Alternatively, you can use Python libraries:

Python

wgetlibrary:Install it first:

pip install wgetThen, in your Python code:

import wget wget.download("URL")Using

pandasto read a CSV directly from a URL:import pandas as pd url = "https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv" df = pd.read_csv(url)Valid URL schemes include http, ftp, s3, gs, and file.

Bypassing HTTPS checks (if needed):

import ssl ssl._create_default_https_context = ssl._create_unverified_contextUsing Python's

urllibfor downloading files:import urllib.request url = "https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv" urllib.request.urlretrieve(url, "housing.csv")The

urlretrieve()function allows you to download files from URLs and save them locally. It is part of the standard Python libraryurllib.request, available on all devices and platforms.

# Downloading a csv file inside notebook

The best way is to use pandas and give it the URL directly:

url = 'https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv'

df = pd.read_csv(url)

You can also execute cmd/bash commands inside Jupyter:

!wget https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv

The exclamation mark ! lets you execute shell commands inside your notebooks. This works for shell commands such as ls, cp, mkdir, mv, etc.

For instance, if you then want to move your data into a data directory alongside your notebook-containing directory, you could execute the following:

!mkdir -p ../data/

!mv housing.csv ../data/

# Windows: WSL and VS Code

If you have a Windows 11 device and would like to use the built-in WSL to access Linux, you can use the Microsoft Learn link Set up a WSL development environment | Microsoft Learn.

To connect this to VS Code, download the Microsoft verified VS Code extension ‘WSL’. This will allow you to remotely connect to your WSL Ubuntu instance as if it were a virtual machine.

# Uploading the homework to Github

If you encounter the following error when trying to use Git for the first time:

error: src refspec master does not match any

error: failed to push some refs to 'https://github.com/XXXXXX/1st-Homework.git'

Solution:

Make an initial commit using:

git commit -m "initial commit"Push to the main branch instead:

git push origin main

For a comprehensive guide on using GitHub, visit GitHub Quickstart.

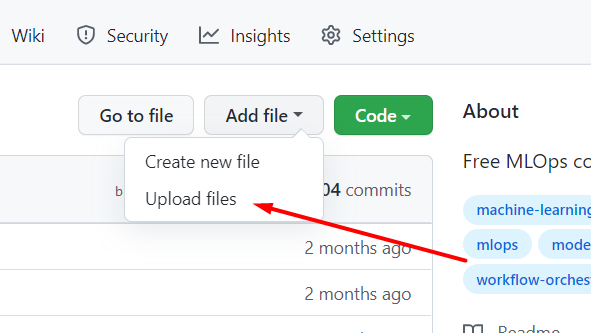

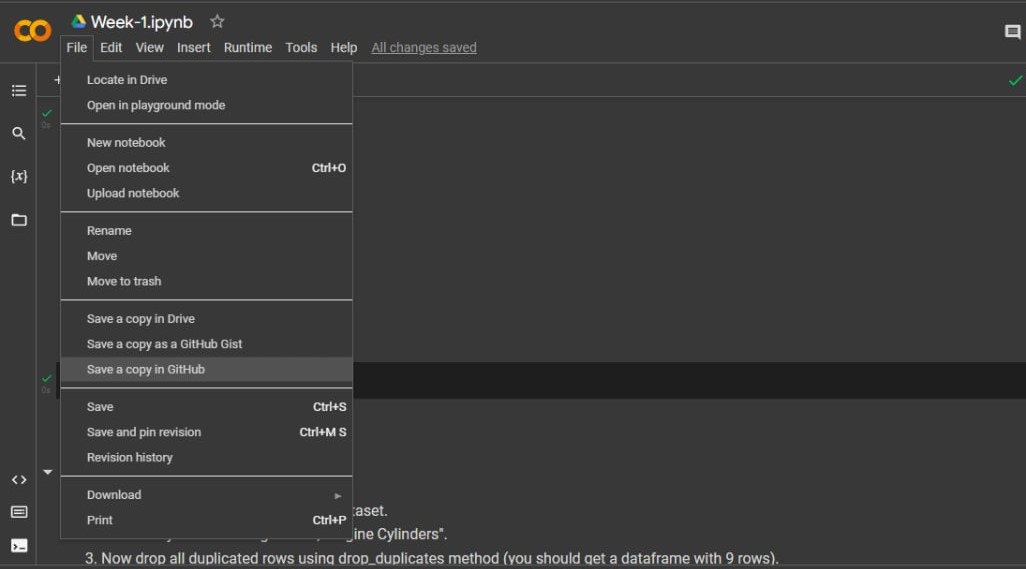

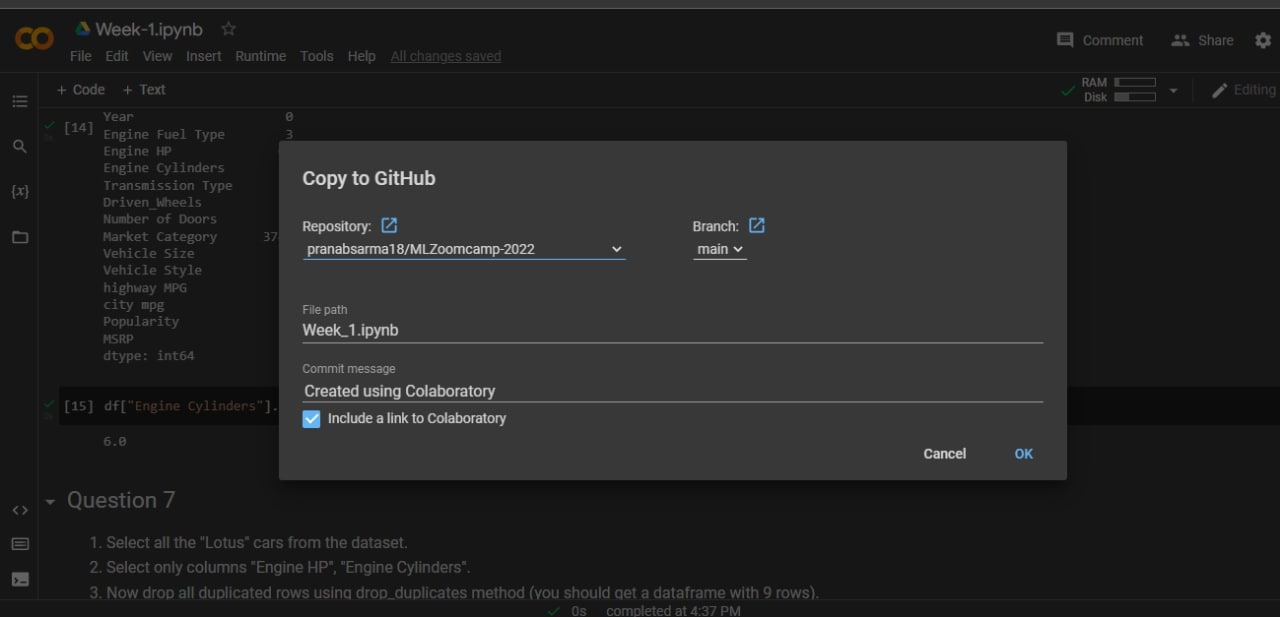

You can also use GitHub's "upload file" feature, or share your Google Colab notebooks directly to GitHub:

# Singular Matrix Error

I'm trying to invert the matrix but I got an error that the matrix is singular.

The singular matrix error is caused because not every matrix can be inverted. In particular, it happens when dealing with multiplication (using the method .dot) since multiplication is not commutative. X.dot(Y) is not necessarily equal to Y.dot(X). Respect the order; otherwise, you get the wrong matrix.

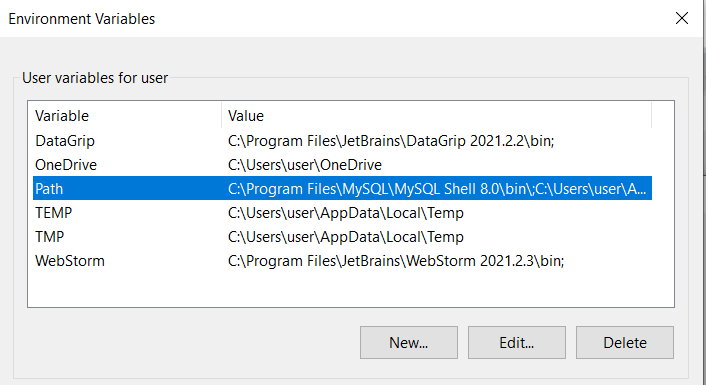

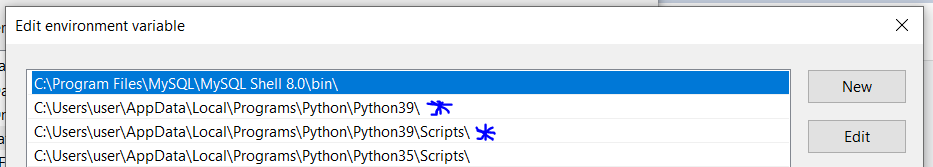

# Conda is not an internal command

If you encounter an issue where the command:

conda create -n ml-zoomcamp python=3.13

doesn't work, try the following solutions:

Use Anaconda Prompt:

- If you are on Windows, use Anaconda's own terminal called "Anaconda Prompt". This should automatically configure your environment to recognize Conda commands.

Install Anaconda or Miniconda:

- If you do not have Anaconda or Miniconda installed, download and install one of them first.

Note: Any version of Python starting from 3.10 should be suitable.

# Read-in the File in Windows OS

How do I read the dataset with Pandas in Windows?

I used the code below but it's not working:

df = pd.read_csv('C:\Users\username\Downloads\data.csv')

Unlike Linux/Mac OS, Windows uses the backslash (\) to navigate the files, which causes a conflict with Python. In Python, the \ is used for escape sequences, e.g., \n for a new line or \t for a tab. To avoid this issue, add an r before the file path to treat it as a raw string:

df = pd.read_csv(r'C:\Users\username\Downloads\data.csv')

# '403 Forbidden' error message when you try to push to a GitHub repository

To resolve a '403 Forbidden' error when pushing to a GitHub repository, follow these steps:

Check the current remote URL configuration by running:

git config -l | grep urlThe output should be similar to:

remote.origin.url=https://github.com/github-username/github-repository-name.gitChange the URL format to include your GitHub username:

git remote set-url origin "https://github-username@github.com/github-username/github-repository-name.git"Verify the change is reflected using the command in step 1. Make sure the URL is correctly updated.

# Git: Fatal: Authentication failed for https://github.com/username

I encountered a problem when trying to push code from Git Bash:

remote: Support for password authentication was removed on August 13, 2021.

remote: Please see https://docs.github.com/en/get-started/getting-started-with-git/about-remote-repositories#cloning-with-https-urls for information on currently recommended modes of authentication.

fatal: Authentication failed for 'https://github.com/username'

Solution:

- Create a personal access token from your GitHub account.

- Use this token to authenticate when you push your changes.

For more details, see the documentation on generating a new SSH key and adding it to the SSH agent.

# Kaggle: wget: unable to resolve host address raw.githubusercontent.com

In Kaggle, when you attempt to !wget a dataset from GitHub or any other public repository, you might encounter the following error:

--2022-09-17 16:55:24-- https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... failed: Temporary failure in name resolution.

wget: unable to resolve host address 'raw.githubusercontent.com'

Solution:

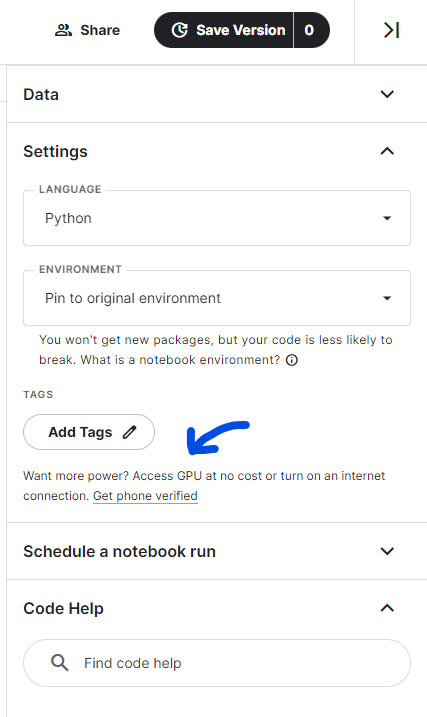

- In your Kaggle notebook settings, enable internet access for your session. This option is found in the settings panel on the right-hand side of the Kaggle screen.

- You will need to verify your phone number to confirm you are not a bot.

# Setting up an environment using VS Code

I found this video quite helpful: Creating Virtual Environment for Python from VS Code

Native Jupyter Notebooks Support in VS Code

In VS Code, you can have native Jupyter Notebooks support, i.e., you do not need to open a web browser to code in a Notebook. If you have port forwarding enabled, run a jupyter notebook command from a remote machine, and have a remote connection configured in .ssh/config (as Alexey’s video suggests), VS Code can execute remote Jupyter Notebook files on a remote server from your local machine: Visual Studio Code Jupyter Notebooks.

Git Support in VS Code

You can work with GitHub from VS Code. Staging and commits are easy from the VS Code’s UI:

# Port-Forwarding with SSH

If you prefer using the terminal for port forwarding, configure it in your SSH config file.

Open your SSH config file:

nano ~/.ssh/configAdd the following line to forward your Jupyter server:

LocalForward 8888 localhost:8888

# Conda Environment Setup: Do we need to run 'conda create' and 'conda activate' every time?

To set up a Conda environment for the project:

Initial Setup: Run the following command only once to create the environment:

conda create -n ml-zoomcampActivating Environment: Each time you want to work on the project, activate the environment:

conda activate ml-zoomcampExporting Environment: To export your existing environment to a YAML file:

conda env export > environment.ymlRecreating Environment: Use the YAML file to recreate the environment:

conda env create -f environment.yml

# What does pandas.DataFrame.info() do?

It prints the information about the dataset, including:

- Index datatype

- Number of entries

- Column information with not-null count and datatype

- Memory usage by the dataset

We use it as:

df.info()

# NameError: name 'np' is not defined

If you're using numpy or pandas, make sure to import the libraries before using them:

import pandas as pd

import numpy as np

# How to select column by dtype

To select columns by data type, you can use the following methods:

To get columns with numeric data:

df.select_dtypes(include=np.number).columns.tolist()To get columns with object (string) data:

df.select_dtypes(include='object').columns.tolist()

# How to identify the shape of dataset in Pandas

To identify the shape of a dataset in Pandas, you can use the .shape attribute:

df.shape: Returns a tuple representing the dimensionality of the DataFrame.df.shape[0]: Returns the number of rows.df.shape[1]: Returns the number of columns.

You can also use the built-in len function to find the total number of rows:

len(df)

# Error launching Jupyter notebook

If you encounter the error below when launching a Jupyter notebook in a new environment:

ImportError: cannot import name 'contextfilter' from 'jinja2' (anaconda\lib\site-packages\jinja2\__init__.py)

Follow these steps:

Switch to the main environment.

Run the following command:

pip install nbconvert --upgrade

# wget hangs on MacOS Ventura M1

Executing the following command hangs on MacOS Ventura M1:

wget https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv

If you encounter this and see IPv6 addresses in the terminal, follow these steps:

- Go to System Settings.

- Select Network.

- Choose your network connection and click Details.

- Set Configure IPv6 to Manually.

- Click OK.

- Try the command again.

# Using macOS and having trouble with WGET

Wget doesn't ship with macOS, but you can use curl as an alternative.

Example command:

curl -o ./housing.csv https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv

Explanation:

- curl: A utility for retrieving information from the internet.

- -o: Specifies the output filename for the file being downloaded.

- filename: Your choice for naming the file.

- URL: The web address from which

curlwill download the data and save it using the specified filename.

For more information, you can refer to the Curl Documentation

# How to output only a certain number of decimal places

You can use the round() function or f-strings:

Using

round()function:round(number, 4) # This will round number up to 4 decimal placesUsing f-strings:

print(f'Average mark for the Homework is {avg:.3f}') # Formats the number to 3 decimal placesUsing

pandas.Series.roundif you need to round values in a whole Series:See the documentation for more information: pandas.Series.round

# Can’t get Jupyter running locally on your machine?

If you’re struggling to get a Jupyter notebook running locally on your machine or have other issues (like only having a cellphone available), consider using the following online platforms that don't require installation:

# Can I start Module 1 now and move ahead faster?

Yes. Lessons are already available. You can proceed to later modules without waiting for official start dates.

# Is it mandatory to use Python version 3.11?

No, Python 3.11 is not strictly mandatory for the course. You have flexibility in choosing your Python version, but here are some important considerations:

Recommended versions: (as of 2025)

- Python 3.10 or later is recommended for best compatibility

- Python 3.11 and 3.12 work well with all course materials

- Python 3.13 should also work fine for most use cases

Version compatibility check: You can verify if your Python version is still supported and secure by checking: https://endoflife.date/python

This website shows which Python versions are:

- Currently supported with security updates

- In maintenance mode (security fixes only)

- End-of-life (no longer receiving updates)

Why version matters:

- Newer Python versions include performance improvements and new features

- Some libraries used in the course may require minimum Python versions

- Using a supported version ensures you receive security updates

- Consistency with course materials helps avoid compatibility issues

How to check your version:

python --version

# or

python3 --version

As long as you're using Python 3.10 or later and it's not end-of-life, you should be able to complete all course exercises successfully.

Module 1 Homework

# Floating Point Precision

I was doing Question 7 from Week 1 Homework and with step 6: Invert XTX. I created the inverse. Now, an inverse when multiplied by the original matrix should return an identity matrix. But when I multiplied the inverse with the original matrix, it gave a matrix like this:

Inverse * Original:

[[ 1.00000000e+00 -1.38777878e-16]

[ 3.16968674e-13 1.00000000e+00]]

Solution:

It's because floating point math doesn't work well on computers as shown here: https://stackoverflow.com/questions/588004/is-floating-point-math-broken

# How to avoid Value errors with array shapes in homework?

First of all, use np.dot for matrix multiplication. When you perform matrix-matrix multiplication, remember that the order of multiplication is crucial and affects the result.

Dimension Mismatch

To perform matrix multiplication, the number of columns in the first matrix should match the number of rows in the second matrix. Rearrange the order to satisfy this condition.

# Homework Q5: How to replace NaNs with the average?

You would first get the average of the column and save it to a variable, then replace the NaN values with the average variable.

This method is called imputing - when you have NaN/null values in a column, but you do not want to get rid of the row because it has valuable information contributing to other columns.

# Homework Q5: Why the mode returns a Series instead of a single value?

When you calculate the mode using the mode() function in pandas, the function always returns a Series. This design choice allows mode() to handle cases where there may be multiple modes (i.e., multiple values with the same highest frequency). Even when there is only one mode, the function will still return a Series with that single value.

If you are certain that your column has only one mode and you want to extract it as a single value, you can access the first element of the Series returned by mode():

single_mode_value = your_dataframe['your_column'].mode()[0]

# Question 7: Mathematical formula for linear regression

In Question 7, we are asked to calculate the following:

X^T X(X^T X)^{-1}w = (X^T X)^{-1} X^T y

The initial problem w = X^{-1} y can be solved by this, where a matrix X is multiplied by some unknown weights w resulting in the target y.

Additional Reading and Videos

# Homework Q7: Final multiplication not having 5 column

This is most likely because you interchanged the first step of the multiplication.

Ensure you use:

- Correct:

XTX = XT X - Instead of incorrect:

XTX = X XT

# Homework Q7: Multiplication operators.

Matrix multiplication, such as matrix-matrix or matrix-vector multiplication, is often represented using the * operator. However, in NumPy, it is performed using the @ operator or np.matmul(). The * operator in NumPy is used for element-wise multiplication, also known as the Hadamard product).

For matrix-matrix multiplication, using the @ operator or np.matmul() is preferred, as noted in the NumPy documentation.

When multiplying by a scalar, it's preferred to use numpy.multiply() or the * operator.

References:

# Homework Q7: What do the weights represent?

The weight vector, w, contains the coefficients for a linear model fit between the target variable, y, and the input features in X, with the model estimate of y, y_est, defined as follows:

$$y_{est} = w[0]X[0] + w[1]X[1]$$

where the values in brackets refer to each column of the feature matrix, X, and the corresponding row of the weight vector, w. Each value in w describes the slope of the trend line that fits y the best for each feature. As we'll learn in Module 2, least squares yields a "best" fit that minimizes the squared difference between y and y_est. The weights, w, can be checked to see if they're reasonable by multiplying X by the weight vector, w:

$$y_{est} = X.dot(w)$$

This should produce a vector, y_est that is similar, plus or minus some error, to the original target variable, y.

Module 2. Machine Learning for Regression

# How to avoid accidentally pushing CSV files

To avoid accidentally pushing CSV files (or any specific file type) to a Git repository, you can use a .gitignore file.

Add a rule to ignore CSV files by including:

*.csvIf the CSV files have already been committed, you can remove them from Git tracking but keep them locally by using the command:

git rm --cached filename.csv

# Checking long tail of data

To analyze the long tail of data, you can use a histogram or check skewness and descriptive statistics.

Using Histogram

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Load the data

url = 'https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv'

df = pd.read_csv(url)

# EDA

sns.histplot(df['median_house_value'], kde=False)

plt.show()

Check Skewness and Descriptive Statistics

# Describe the 'median_house_value'

print(df['median_house_value'].describe())

# Calculate the skewness of the 'median_house_value' variable

skewness = df['median_house_value'].skew()

# Print the skewness value

print("Skewness of 'median_house_value':", skewness)

# LinAlgError: Singular matrix

It’s possible that when you follow the videos, you’ll get a Singular Matrix error. This will be explained in the Regularization video. Don’t worry, it’s normal to encounter this.

You might also receive this error if you invert matrix X more than once in your code.

# Getting NaNs after applying .mean()

I was using for loops to apply RMSE to a list of y_val and y_pred. However, the resulting RMSE was all NaN.

I discovered that the issue occurred during the mean calculation step in the RMSE function, after squaring the error. There were NaNs in the array, which I traced back to the initial data splitting step. I had only used fillna(0) on the training data, not on the validation and test data.

The problem was resolved by applying fillna(0) to all datasets (train, validation, and test). My for loops now successfully compute RMSE for all seed values.

# Target variable transformation

Why should we transform the target variable to logarithm distribution? Do we do this for all machine learning projects?

Only if you see that your target is highly skewed. The easiest way to evaluate this is by plotting the distribution of the target variable.

Transforming to a logarithmic scale can help address skewness and improve the distribution of your data set.

For more information, you can refer to Skewness on Wikipedia.

# Loading the dataset directly through Kaggle Notebooks

To load a dataset in Kaggle Notebooks, you can use the following command. Remember that the ! before wget is essential.

!wget https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv

Once the dataset is loaded onto the Kaggle Notebook server, it can be read using the following pandas command:

df = pd.read_csv('housing.csv')

# Filter a dataset by using its values

We can filter a dataset by using its values as shown below:

# Using OR condition

df = df[(df['ocean_proximity'] == '<1H OCEAN') | (df['ocean_proximity'] == 'INLAND')]

You can use | for 'OR', and & for 'AND'.

Alternative method:

# Using isin()

df = df[df['ocean_proximity'].isin(['<1H OCEAN', 'INLAND'])]

# Alternative way to load the data using requests

Here's another way to load a dataset using the requests library:

import requests

url = 'https://raw.githubusercontent.com/alexeygrigorev/datasets/master/housing.csv'

response = requests.get(url)

if response.status_code == 200:

with open('housing.csv', 'wb') as file:

file.write(response.content)

else:

print("Download failed.")

# Null column is appearing even if I applied .fillna()

When creating a duplicate of your dataframe, if you do the following:

X_train = df_train

X_val = df_val

You're still referencing the original variable. This is called a shallow copy. To ensure that no references are attaching both variables and to keep a copy of the data, create a deep copy:

X_train = df_train.copy()

X_val = df_val.copy()

# Can I use Scikit-Learn’s train_test_split for this week?

Yes, you can. Here we implement it ourselves to better understand how it works, but later we will only rely on Scikit-Learn’s functions. If you want to start using it earlier — feel free to do it.

# Can I use LinearRegression from Scikit-Learn for this week?

Yes, you can. We will also do that next week, so don’t worry, you will learn how to do it.

# Using Scikit-Learn for regression with and without regularization

What are the equivalents in Scikit-Learn for linear regression with and without regularization used in week 2?

Without Regularization:

sklearn.linear_model.LinearRegression

With Regularization:

sklearn.linear_model.Ridge

For more information, you can refer to the Scikit-Learn documentation on linear models:

# Why linear regression doesn’t provide a “perfect” fit?

Linear regression often provides a good approximation of the underlying relationship but rarely achieves a "perfect" fit in real-world applications.

Q: Why is y_pred different from y?

In lesson 2.8, the question arises: after training X_train to get the weights, shouldn't multiplying by X_train give exactly y?

A: Linear regression is a simple model and should not fit 100%, as this would indicate overfitting. Consider a single feature X:

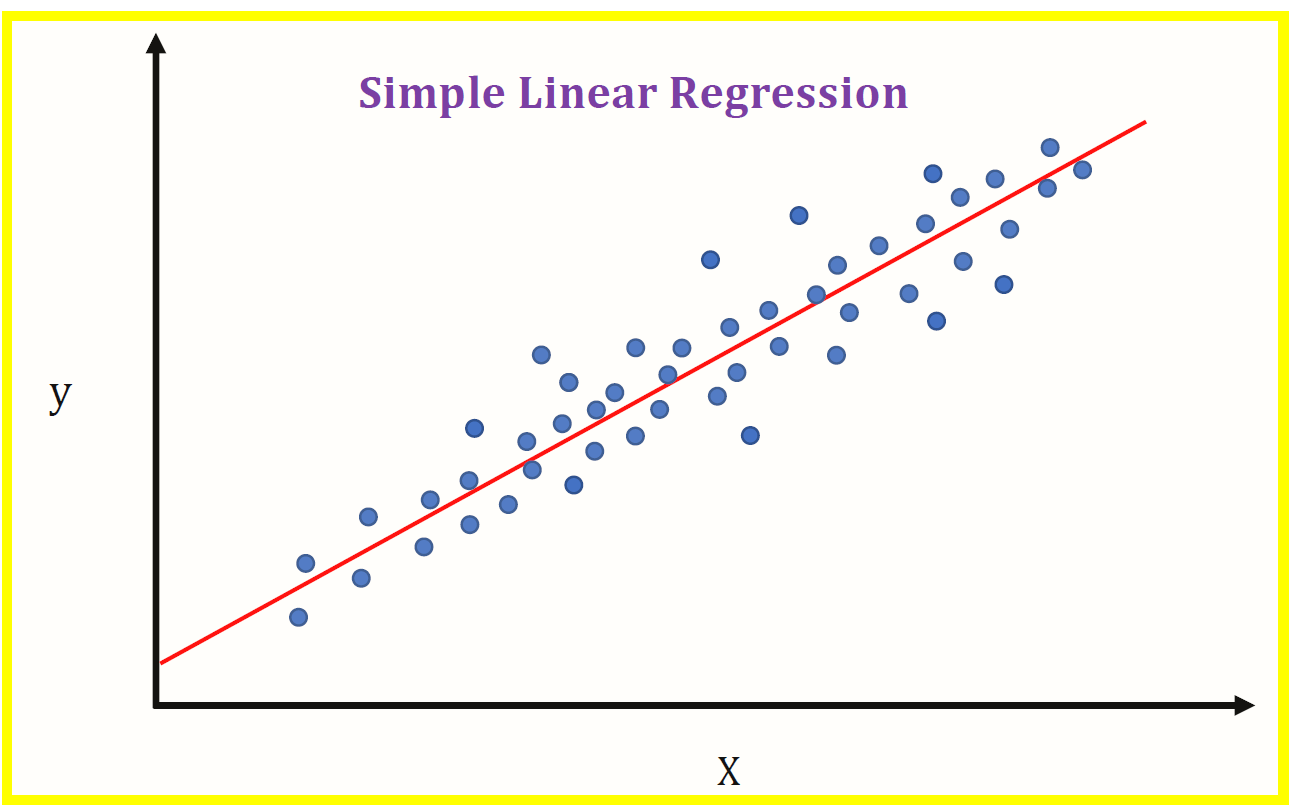

As the model is linear, how would you draw a line to fit all the "dots"?

You could "fit" all the "dots" using something like scipy.optimize.curve_fit (non-linear least squares), but consider how it would perform on previously unseen data.

Refer to: scipy.optimize.curve_fit

# Random seed 42

One of the questions on the homework calls for using a random seed of 42. When using 42, all my missing values ended up in my training dataframe and not my validation or test dataframes, why is that?

The purpose of the seed value is to randomly generate the proportion split. Using a seed of 42 ensures that all learners are on the same page by getting the same behavior (in this case, all missing values ending up in the training dataframe). If using a different seed value (e.g. 9), missing values will then appear in all other dataframes.

# Shuffling the initial dataset using pandas built-in function

It is possible to shuffle the dataset using the pandas built-in function pandas.DataFrame.sample. To shuffle the complete dataset and reset the index, use the following commands:

- Set

frac=1to return a shuffled version of the complete dataset. - Set

random_state=seedfor consistent randomization.

df_shuffled = df.sample(frac=1, random_state=seed)

df_shuffled.reset_index(drop=True, inplace=True)

# Shuffling data using Numpy’s Generator Feature

While the lectures have you use the shuffle function to shuffle the index of the dataframe, it no longer accepts random seed as a parameter. This is because Numpy converted this feature into its own "Generator Class". In order to assign the random generator a seed, you have to specify the object (rng) that you are going to utilize in your code:

# Create index from range of values in array

idx = np.arange(n)

# Create random generator object and set seed

rng = np.random.default_rng(random_seed)

# Shuffle values using Generator object

rng.shuffle(idx)

# When should we transform the target variable to logarithm distribution?

When the target variable has a long tail distribution, such as prices with a wide range, you can transform it using the np.log1p() method. However, be aware that this method will not work if your target variable contains negative values.

# ValueError: shapes not aligned

X_train = prepare_X(df_train)

w_0, w = train_linear_regression(X_train, y_train)

X_val = prepare_X(df_val)

y_pred = w_0 + X_val.dot(w)

rmse(y_val, y_pred)

We get:

ValueError Traceback (most recent call last)

Input In [132], in <cell line: 5>()

2 w_0, w = train_linear_regression(X_train, y_train)

4 X_val = prepare_X(df_val)

----> 5 y_pred = w_0 + X_val.dot(w)

7 rmse(y_val, y_pred)

ValueError: shapes (4128,) and (1,) not aligned: 4128 (dim 0) != 1 (dim 0)

If we try to perform an arithmetic operation between two arrays of different shapes or dimensions, it throws an error like operands could not be broadcast together with shapes. Broadcasting can occur in certain scenarios and will fail in others.

To solve this issue, you can use the * operator instead of the dot() method:

X_train = prepare_X(df_train)

w_0, w = train_linear_regression(X_train, y_train)

X_val = prepare_X(df_val)

y_pred = w_0 + (X_val * w)

rmse(y_val, y_pred)

Output:

0.5713144443358035

# How to copy a dataframe without changing the original dataframe?

Copy a dataframe using:

X_copy = X.copy()

This creates a deep copy of the dataframe. If you use X_copy = X, it will create a "view" and any changes to X_copy will affect the original dataframe X. This is not a real copy.

# What is standard deviation?

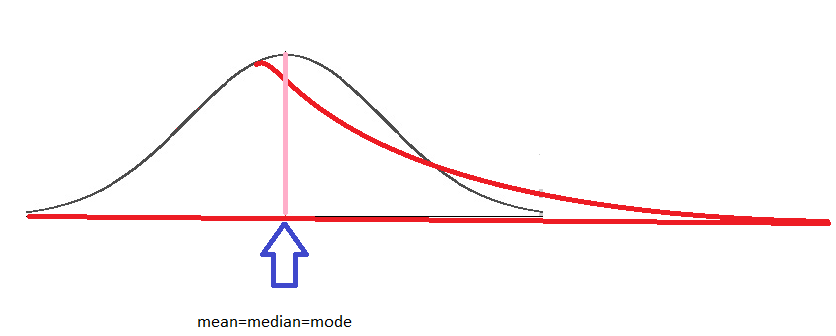

One of the most important characteristics of the normal distribution is that mean = median = mode, meaning the most popular value, the mean of the distribution, and 50% of the sample are under the same value. This is equivalent to saying that the area under the curve (black) is the same on the left and on the right. The long tail (red curve) results from a few observations with high values, altering the behavior of the distribution. Consequently, the area is different on each side, and the mean, median, and mode become different. The mean is no longer representative, the range is larger, and the probability of being on the left or right is not the same.

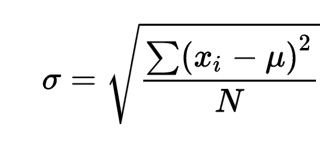

In statistics, the standard deviation is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, while a high standard deviation indicates that the values are spread out over a wider range. Wikipedia The formula to calculate standard deviation is:

# Do we need to apply regularization techniques always? Or only in certain scenarios?

The application of regularization depends on the specific situation and problem. It is recommended to consider it when training machine learning models, especially with small datasets or complex models, to prevent overfitting. However, its necessity varies depending on the data quality and size. Evaluate each case individually to determine if it is needed.

# Shortcut: define functions for faster execution

Defining functions can speed up development significantly. You can create a function like prepare_df(initial_df, seed, fill_na_type) to prepare all three dataframes and y_vectors. The fillna() operation can be applied before splitting the initial_df.

Additionally, you can reuse functions such as rmse() and train_linear_regression(X, y, r) from the class notebook.

# Warning about modifying Dataframes inside functions

When applying a function to a DataFrame, it is important to consider that if you do not want to alter the original DataFrame, you should create a copy of it first. Failing to do so may result in unintended modifications to the original dataset.

To preserve the integrity of your data, always use df.copy() before making any changes.

# Find standard deviation with Pandas

To find the standard deviation of a list or series of data using Pandas, you can convert the list into a Pandas Series and use the .std() function. For example:

import pandas as pd

x = [1, 2, 3, 4, 5]

standard_deviation = pd.Series(x).std()

print(standard_deviation)

This will calculate the standard deviation of the list x.

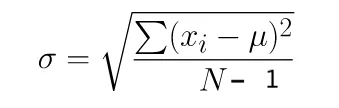

# Standard Deviation Differences in Numpy and Pandas

Numpy and Pandas use different equations to compute the standard deviation. Numpy uses the population standard deviation by default, whereas Pandas uses the sample standard deviation.

Numpy

Pandas

Pandas computes the standard deviation using one degree of freedom by default. You can modify the degree of freedom in Numpy to achieve a similar result by using the ddof parameter:

import numpy as np

np.std(df.weight, ddof=1)

The result will be similar if we set ddof=1 in Numpy.

# Standard deviation using Pandas built in Function

In pandas, you can use the built-in function std() to calculate the standard deviation. For example:

To get the standard deviation of a single column:

df['column_name'].std()To get the standard deviation of multiple columns:

df[['column_1', 'column_2']].std()

# How to combine train and validation datasets

Use pandas.concat function (pandas documentation) to combine two dataframes. To combine two numpy arrays, use numpy.concatenate (numpy documentation).

The code would be as follows:

df_train_combined = pd.concat([df_train, df_val])

y_train = np.concatenate((y_train, y_val), axis=0)

# Understanding RMSE and how to calculate RMSE score

The Root Mean Squared Error (RMSE) is one of the primary metrics to evaluate the performance of a regression model. It calculates the average deviation between the model's predicted values and the actual observed values, offering insight into the model's ability to accurately forecast the target variable. To calculate the RMSE score:

Import the necessary libraries:

import numpy as np from sklearn.metrics import mean_squared_errorCalculate the Mean Squared Error (MSE):

mse = mean_squared_error(actual_values, predicted_values)Compute the RMSE:

rmse = np.sqrt(mse) print("Root Mean Squared Error (RMSE):", rmse)

# Deep dive into normal equation for regression

I found this video useful for understanding how we derive the normal form in linear regression: Normal Equation Derivation for Regression.

# Useful Resource for Missing Data Treatment

# What sklearn version is Alexey using in the YouTube videos?

Version 0.24.2 and Python 3.8.11.

Module 2 Homework

# Homework Q4: Is r same as alpha in Scikit-Learn Ridge?

In the context of regression, particularly with regularization:

rtypically represents the regularization parameter in some algorithms. It controls the strength of the penalty applied to the coefficients of the regression model to prevent overfitting.In

sklearn.Ridge(), the parameteralphaserves the same purpose asr. It specifies the amount of regularization applied to the model. A higher value ofalphaincreases the amount of regularization, which can reduce model complexity and improve generalization.

r and alpha are both regularization parameters and control the "strength" of regularization. Increasing these values will lead to stronger regularization. However, the mathematical implementation differs:

sklearn.Ridge():||y - Xw||^2_2 + alpha * ||w||^2_2Lesson's Notebook (

train_linear_regression_regfunction):XTX = XTX + r * np.eye(XTX.shape[0])radds “noise” to the main diagonal to prevent multicollinearity, which “breaks” finding the inverse matrix.

For further reference, see the sklearn.Ridge documentation and the lesson’s notebook.

# Homework: The answer I get for one of the homework questions doesn't match any of the options. What should I do?

That’s normal. We all have different environments: our computers have different versions of OS and different versions of libraries — even different versions of Python.

If it’s the case, just select the option that’s closest to your answer.

# Homework: Q3: Meaning of mean

In question 3 of HW02 it is mentioned: ‘For computing the mean, use the training only’. What does that mean?

It means that you should use only the training data set for computing the mean, not the validation or test data set. This is how you can calculate the mean:

# Calculate mean for a specific column in the training data

mean_value = df_train['column_name'].mean()

Another option:

# Get descriptive statistics, including the mean

stats = df_train['column_name'].describe()

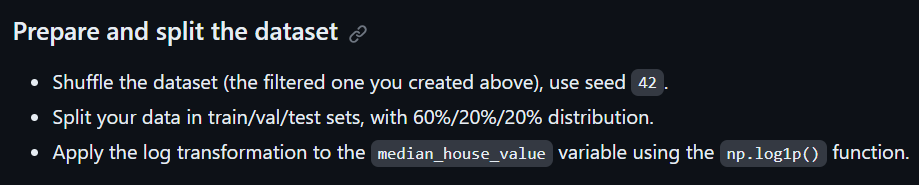

# Caution for applying log transformation in Week-2 2023 cohort homework

The instruction for applying log transformation to the ‘median_house_value’ variable is provided before Q3 in the homework for Week-2 under the ‘Prepare and split the dataset’ heading.

However, this instruction is absent in the subsequent questions of the homework, and you might encounter issues like a huge RMSE. Remember to apply log transformation to the target variable for each question.

# Homework: is the RMSE result close to the options?

My result is about 12.4 different from the closest option. For previous questions, my answers were close, so I'm unsure why there's a large discrepancy for question 6.

For questions 5 and 6, please ensure you reinitialize with:

Idx = np.arange(n)

This should be done for each iteration of r in question 5 and also for question 6.

Module 3. Machine Learning for Classification

# What is the best way to handle missing values in the dataset before training a regression model?

You can handle missing values by:

- Imputing the missing values with the mean, median, or mode.

- Using algorithms that support missing values inherently (e.g., some tree-based methods).

- Removing rows or columns with missing data, depending on the extent of missingness.

- Utilizing feature engineering to derive new features from incomplete data.

# Error: Could not convert string to float: 'Nissan'

The error message "could not convert string to float: 'Nissan'" typically occurs when a machine learning model or function is expecting numerical input but receives a string instead. In this case, it seems like the model is trying to convert the car brand 'Nissan' into a numerical value, which isn’t possible.

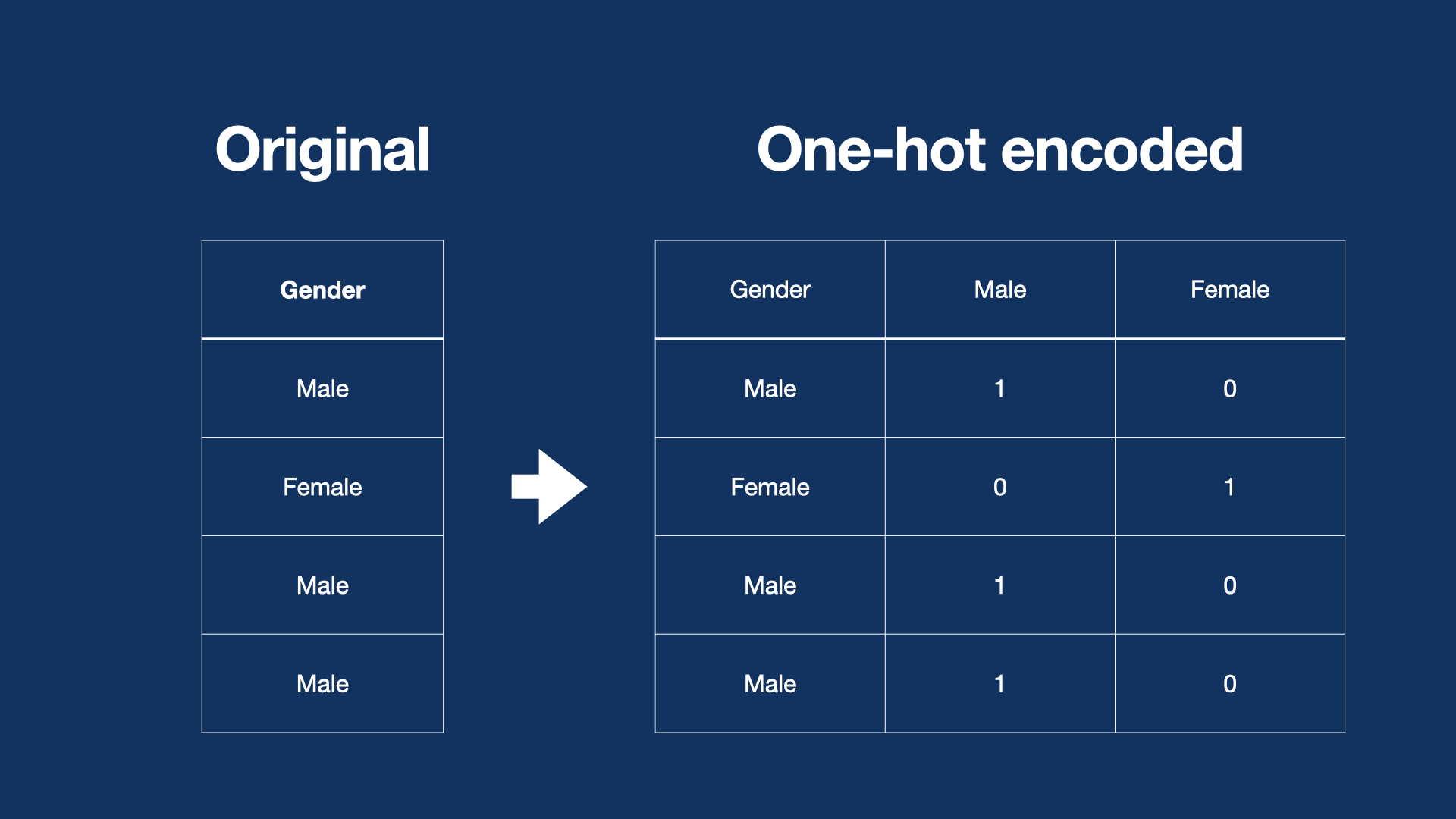

To resolve this issue, you can encode categorical variables like car brands into numerical values. One common method is one-hot encoding, which creates new binary columns for each category/label present in the original column.

Here’s an example of how you can perform one-hot encoding using pandas:

import pandas as pd

# Assuming 'data' is your DataFrame and 'brand' is the column with car brands

data_encoded = pd.get_dummies(data, columns=['brand'])

In this code, pd.get_dummies() creates a new DataFrame where the brand column is replaced with binary columns for each brand (e.g., brand_Nissan, brand_Toyota, etc.). Each row in the DataFrame has a 1 in the column that corresponds to its brand and 0 in all other brand columns.

# Homework: Why did we change the targets to binary format when calculating mutual information score in the homework?

Mutual Information score calculates the relationship between categorical or discrete variables. In the homework, the target, median_house_value, was continuous. Thus, we changed it to a binary format to make its values discrete (either 0 or 1).

Keeping the target as a continuous variable would require the algorithm to divide it into bins, which would be highly subjective. This is why continuous variables are not used for mutual information score calculation.

# How do you find the correlation matrix?

First, you have to consider whether the data is numerical or categorical. If it’s numerical, you can correlate it directly. If it’s categorical, you can find the correlations indirectly by vectorizing the data using One-Hot encoding or a similar method.

To determine if data is numerical, check the dtypes of the DataFrame. Data types such as integer and float are numerical, while types such as objects are categorical. You can correlate the numerical data by specifying which columns are numerical and using that as input to a correlation matrix.

Example:

numerical = ['tenure', 'monthlycharges', 'totalcharges']

correlation_matrix = df[numerical].corr()

print(correlation_matrix)

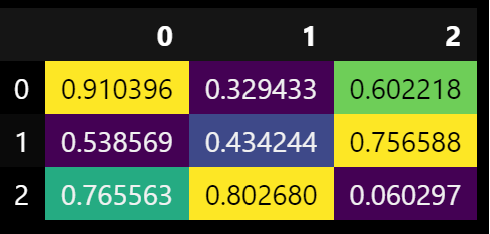

# Coloring the background of the pandas.DataFrame.corr correlation matrix directly

The background of any DataFrame, including the correlation matrix, can be colored based on its numerical values using the method pandas.io.formats.style.Styler.background_gradient.

Here is an example of how to color the correlation matrix. A color map of choice can be passed; here, 'viridis' is used:

- Ensure the DataFrame contains only numerical values before calling

corr:

corr_mat = df_numerical_only.corr()

corr_mat.style.background_gradient(cmap='viridis')

- Here is an example of how the coloring will look using a DataFrame containing random values and applying

background_gradientto it:

np.random.seed = 3

df_random = pd.DataFrame(data=np.random.random(3*3).reshape(3,3))

print(df_random.style.background_gradient(cmap='viridis'))

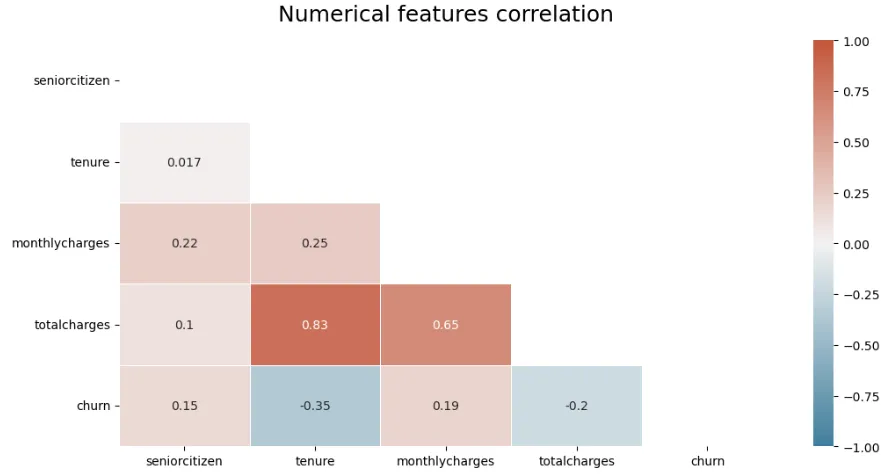

# Identifying highly correlated feature pairs easily through unstack

To identify highly correlated feature pairs using unstack:

import pandas as pd

data_corr = pd.DataFrame(data_num.corr().round(3).abs().unstack().sort_values(ascending=False))

print(data_corr.head(10))

You can also use seaborn to create a heatmap with the correlation:

import seaborn as sns

sns.heatmap(

df[numerical_features].corr(),

annot=True,

square=True,

fmt=".2g",

cmap="crest"

)

To refine your heatmap and plot only a triangle, with a blue to red color gradient, that will show every correlation between your numerical variables without redundant information:

# Set figure size: modify it here or create new function arguments

plt.figure(figsize=(12, 6))

# define the mask to set the values in the upper triangle to True

mask = np.triu(np.ones_like(dataframe.corr(numeric_only=True), dtype=bool))

# Generate a custom diverging colormap

cmap = sns.diverging_palette(230, 20, as_cmap=True)

heatmap = sns.heatmap(

dataframe.corr(numeric_only=True),

mask=mask,

cmap=cmap,

vmin=-1,

vmax=1,

annot=True,

linewidths=0.5,

)

heatmap.set_title(title, fontdict={"fontsize": 18}, pad=16)

plt.show()

Which outputs, in the case of a churn dataset:

# What data should be used for EDA?

It's indeed good practice to only rely on the train dataset for EDA. Including validation might be okay. But we aren't supposed to touch the test dataset; even just looking at it isn't a good idea. We indeed pretend that this is the future unseen data.

# DictVectorizer: Fitting on validation data

Validation datasets are used to optimize models by providing an estimate of performance on unseen data. Understanding how to properly use the DictVectorizer class is crucial for maintaining this separation between training and validation.

- Fitting on Training Data: The

fitmethod ofDictVectorizeranalyzes the training dataset to determine how to map dictionary values. Categorical features are one-hot encoded, while numeric features remain unchanged. - Avoid Fitting on Validation Data: Applying the

fitmethod to validation data can lead to information leakage, as it exposes the model to data it should not see during training. - Appropriate Usage:

- Use

fit_transformon the training dataset. - Use

transformonly on validation and test datasets.

- Use

By following these practices, the model's performance on new data can be more accurately assessed.

# FutureWarning: Function get_feature_names is deprecated; get_feature_names is deprecated in 1.0 and will be removed in 1.2

In newer versions of scikit-learn, the method has been replaced by get_feature_names_out().

Instead, use the method .get_feature_names_out() from the DictVectorizer function to resolve the warning.

# Example usage

from sklearn.feature_extraction import DictVectorizer

# Initialize the vectorizer

vectorizer = DictVectorizer()

# After fitting the vectorizer

vectorizer.fit_transform(...)

# Get feature names

feature_names = vectorizer.get_feature_names_out()

Note: The warning indicates that get_feature_names will be removed, so switching to get_feature_names_out is recommended even though the warning itself won't cause issues yet.

# Logistic regression crashing Jupyter kernel

Fitting the logistic regression takes a long time or the kernel crashes when calling predict() with the fitted model.

Ensure that the target variable for the logistic regression is binary.

# Understanding Ridge

Ridge regression is a linear regression technique used to mitigate the problem of multicollinearity (when independent variables are highly correlated) and prevent overfitting in predictive modeling. It adds a regularization term to the linear regression cost function, penalizing large coefficients.

sag Solver: The sag solver stands for "Stochastic Average Gradient." It's particularly suitable for large datasets, as it optimizes the regularization term using stochastic gradient descent (SGD). sag can be faster than some other solvers for large datasets.

Alpha: The alpha parameter controls the strength of the regularization in Ridge regression. A higher alpha value leads to stronger regularization, which means the model will have smaller coefficient values, reducing the risk of overfitting.

from sklearn.linear_model import Ridge

ridge = Ridge(alpha=alpha, solver='sag', random_state=42)

ridge.fit(X_train, y_train)

# pandas.get_dummies() and DictVectorizer(sparse=False) produce the same type of one-hot encodings:

DictVectorizer(sparse=True) produces CSR) format, which is both more memory efficient and converges better during fit().

It stores non-zero values and indices instead of adding a column for each class of each feature, which can result in large numbers of columns (e.g., models of cars).

Using "sparse" format is slower (around 6-8 minutes for Q6 task - Linear/Ridge Regression) for a high number of classes (like car models) and produces slightly worse results in both Logistic and Linear/Ridge Regression.

It also generates convergence warnings for Linear/Ridge Regression.

# ConvergenceWarning: The max_iter was reached

If you're encountering the following warning:

ConvergenceWarning: The max_iter was reached which means the coef_ did not converge

This usually happens because the solver the model uses is sensitive to feature scales.

You can do the following to address it:

Normalize Numerical Features

- Scale your numerical features using techniques like

StandardScalerorMinMaxScaler. - This ensures that all numerical features are on a similar scale, which helps the solver converge.

- Scale your numerical features using techniques like

Encode Categorical Features

- Apply

OneHotEncoder(OHE) to categorical features to represent them as binary vectors. - Use

sparse=Falsewhen necessary to return a dense array.

- Apply

Separate and Combine Features

- Process numerical and categorical features separately (scaling for numerical, OHE for categorical).

- Combine them afterward into a single feature matrix (

X_train) to use as input for Ridge regression.

Experiment with Different Scalers

- If issues persist, try different scalers as Ridge can behave differently depending on feature scaling.

By following these steps, you can reduce convergence errors and improve model stability. For a detailed example, see this notebook: notebook-scaling-ohe.ipynb.

# Sparse matrix compared to dense matrix

A sparse matrix is more memory-efficient because it only stores the non-zero values and their positions in memory. This is particularly useful when working with large datasets with many zero or missing values.

The default DictVectorizer configuration is a sparse matrix. For Week 3, Question 6, using the default sparse configuration is beneficial due to the size of the matrix. Training the model was also more performant and didn’t produce an error message like dense mode.

# How to Disable/avoid Warnings in Jupyter Notebooks

The warnings in Jupyter notebooks can be disabled or avoided with the following commands:

import warnings

warnings.filterwarnings("ignore")

# Homework: Could you please help me with HW3 Q3: "Calculate the mutual information score with the (binarized) price for the categorical variable that we have. Use the training set only." What is the second variable that we need to use to calculate the mutual information score?

You need to calculate the mutual information score between the binarized price (above_average) variable and ocean_proximity, the only original categorical variable in the dataset.

# What is the difference between OneHotEncoder and DictVectorizer?

Both work in similar ways to convert categorical features to numerical variables for use in training a model. The difference lies in the input:

- OneHotEncoder uses an array as input.

- DictVectorizer uses a dictionary.

Both will produce the same result. However, with OneHotEncoder, features are sorted alphabetically. With DictVectorizer, you stack features as desired.

# What is the difference between pandas get_dummies and sklearn OnehotEncoder?

They are basically the same. There are some key differences with regards to their input/output types, handling of missing values, etc., but they are both techniques to one-hot-encode categorical variables with identical results.

- pandas get_dummies: A convenient choice when working with Pandas DataFrames.

- sklearn OneHotEncoder: More suitable for building a scikit-learn-based machine learning pipeline to handle categorical data as part of that pipeline.

# Why am I getting TypeError while creating OneHotEncoder Object?

In scikit-learn >= 1.2, OneHotEncoder no longer accepts the sparse parameter. It now uses the sparse_output parameter to control whether the output is sparse or dense.

What to do:

- For a dense array: use

OneHotEncoder(sparse_output=False). - For a sparse matrix: use

OneHotEncoder(sparse_output=True).

If you previously wrote ohe = OneHotEncoder(sparse=False) and get a TypeError, replace it with ohe = OneHotEncoder(sparse_output=False).

Example:

from sklearn.preprocessing import OneHotEncoder

ohe = OneHotEncoder(sparse_output=False)

X_train_cat = ohe.fit_transform(df_train[categorical_columns].values)

Notes:

- A dense array will be returned if

sparse_output=False; otherwise, you’ll get a scipy sparse matrix. - If you need a dense array from a sparse result, call

.toarray()on the result. - This change ensures better memory efficiency and consistency in newer scikit-learn versions.

# Correlation before or after splitting the data

Correlation should be calculated after splitting the data, specifically on the train dataset.

To find the two most correlated features:

- Generate the correlation matrix of the train dataset.

- Identify the pair of features with the highest absolute correlation coefficient.

# Transforming Non-Numerical Columns into Numerical Columns

Use sklearn.preprocessing encoders and scalers, e.g. OneHotEncoder, OrdinalEncoder, and StandardScaler.

# What is the better option FeatureHasher or DictVectorizer?

These methods both receive a dictionary as input. While the DictVectorizer will store a large vocabulary and take up more memory, FeatureHasher creates vectors with a predefined length. They are both used for handling categorical features.

- If you have high cardinality in categorical features, it's better to use

FeatureHasher. - If you want to preserve feature names in transformed data and have a small number of unique values, use

DictVectorizer.

Your choice will depend on your data. For more information, you can visit scikit-learn.org

# Isn't it easier to use DictVertorizer or get dummies before splitting the data into train/val/test? Is there a reason we wouldn't do this? Or is it the same either way?

The reason it's recommended to do it after splitting is to avoid data leakage. You don't want any data from the test set influencing the training stage, similarly from the validation stage in the initial training. See e.g. scikit-learn documentation on "Common pitfalls and recommended practices": https://scikit-learn.org/stable/common_pitfalls.html

# Encoding Techniques

This article explains different encoding techniques used.

# Error in use of accuracy_score from sklearn in Jupyter (sometimes)

I got this error multiple times; here is the code:

accuracy_score(y_val, y_pred >= 0.5)

TypeError: 'numpy.float64' object is not callable

I solved it using:

from sklearn import metrics

metrics.accuracy_score(y_train, y_pred >= 0.5)

# What is the difference between .decision_function() and .predict_proba()?

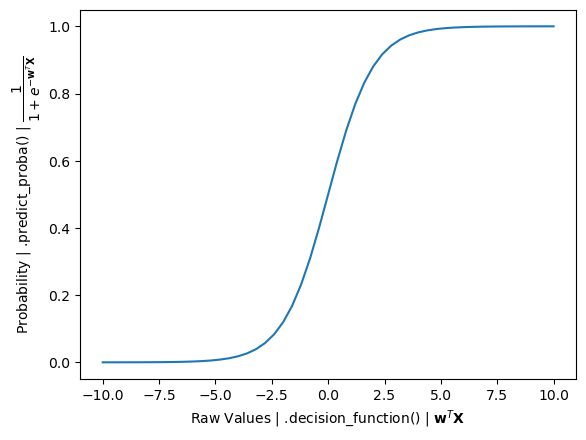

In Scikit-Learn’s LogisticRegression, a model that is trained will have raw values and the predicted probabilities.

.decision_function() returns raw values that are a linear combination of the features and weights, similar to the output of Linear Regression.

.predict_proba() goes one step further by inputting these raw values into the sigmoid function to convert them into probabilities (between 0 and 1).

# Why do I get a KeyError when dropping features after one-hot encoding?

The error occurs because some features you try to drop have been one-hot encoded into multiple columns. After encoding, the original column may no longer exist, leading to the KeyError. To resolve this, identify and drop all related one-hot encoded columns (e.g., those starting with the original feature name) instead of the original feature itself.

For example, after one-hot encoding, the column marital could have been split into columns like marital_single, marital_married, etc. This means that the original column marital no longer exists, leading to the KeyError.

Module 3 Homework

# What data should we use for correlation matrix?

Q2 asks about the correlation matrix and converting median_house_value from numeric to binary. Just to clarify, we are only dealing with df_train, not df_train_full, correct? The question explicitly mentions the train dataset.

Yes, it is only on df_train. The reason is that df_train_full also contains the validation dataset. At this stage, we don't want to make conclusions based on the validation data, since we want to test how we did without using that portion of the data.

# Feature elimination

For Q5 in homework, should we calculate the smallest difference in accuracy in real values (i.e. -0.001 is less than -0.0002) or in absolute values (i.e. 0.0002 is less than 0.001)?

We should select the “smallest” difference, and not the “lowest”, meaning we should reason in absolute values.

If the difference is negative, it means that the model actually became better when we removed the feature.

# How to select the alpha parameter in Q6

To select the alpha parameter, you need to find the RMSE for each alpha. If RMSE scores are equal, choose the lowest alpha.

# Features for homework: Q5

Do we need to train the model only with the features: total_rooms, total_bedrooms, population, and households, or with all the available features, then remove each of the previous features one at a time to compare accuracy?

- Create a list of all features and evaluate the model to obtain the original accuracy.

- Remove one feature at a time.

- Train the model each time, calculate the accuracy, and find the difference between the original accuracy and the new accuracy.

- Identify which feature has the smallest absolute accuracy difference.

While calculating differences between accuracy scores, use the smallest absolute difference. For example, if the differences are -4 and -2, the smallest absolute difference is abs(-2). Use this value to determine the impact of the feature on accuracy.

# Homework 3: Use of random seed

For the test_train_split question on week 3's homework, are we supposed to use 42 as the random_state in both splits or only the first one?

Answer: For both splits, random_state = 42 should be used.

# Homework Q6: Choosing smaller C that leads to best accuracy

When searching for the best value of C that yields the highest accuracy, be mindful that you should be looking for the maximum accuracy, not the minimum.

Although the goal is to find the smallest C value, ensure that it corresponds to the highest accuracy achieved. Always prioritize accuracy maximization while minimizing C.

# Homework: I am getting 1.0 as accuracy. Should I use the closest option?

If you are getting 1.0 as accuracy, it is possible that you have overfitted the model. Dropping the column msrp/price can help you solve this issue.

# Homework Q6: Train a regularized logistic regression with C=0.0

This is not possible since the parameter C represents the inverse of the regularization strength. Setting C to 0 means infinite regularization. Attempting this with the scikit-learn module of Logistic Regression will result in a ValueError.

# Should I fill missing values before or after splitting data (Q4)?

When working on Q4, should I handle missing values (fillna) before splitting the data, or should I do it in each individual question?

This is an important ML workflow design decision. Here are the tradeoffs:

Option A: Handle missing values before splitting

- Clean data once, use everywhere. All future scripts for q3, q4, q5, q6, etc get clean data automatically.

- More efficient as we don't repeat cleaning logic

- CAUTION: You need to be careful about data leakage (see below)

Option B: Handle missing values in each question script (after splitting)

- Each script is self-contained

- More flexible if different questions need different fillna strategies

- You'd need to repeat cleaning logic multiple times

So the critical question comes to "does your strategy means data leakage?":

- If you fill numerical with mean → you MUST compute mean from train only (so you need to do it after the split!)

- If you fill numerical with constant, like 0.0 → Safe to do before split! Because this means there would be no leakage.

For example, if the homework specifies specific fillna values:

- Categorical → 'NA' (constant)

- Numerical → 0.0 (constant)

It means that both strategies use constants, so it's safe to fill missing values before splitting and there's no data leakage risk! This is because we're not computing statistics from the data.

If the fillna in the homework uses constants as fills, filling before splitting the data is more efficient since you clean the data once and all subsequent scripts use clean data automatically. Otherwise, you need to be extremely very careful about the data splits and fillna in your scripts

In production systems, the source data is rarely filled in for misisng values during the initial ingestion step. This is because you likely want to be able to reproduce all transformation on your side starting from "source as is".

Module 4. Evaluation Metrics for Classification

# Homework: How do I import data from 'bank-full.csv'?

Import the data using the following command:

import pandas as pd

df = pd.read_csv("bank-full.csv", sep=';')

Note that the data is separated by a semicolon, not a comma.

# What exactly is taught in the evaluation module?

In Module 4, you’ll learn the core evaluation concepts used in classification tasks. Topics include:

- Metrics and diagnostics: precision, recall, ROC curves, and precision-recall curves

- Evaluation mindsets: how to think critically about metrics and validation in ML projects

- Common pitfalls: data leakage, improper validation, misinterpretation of metrics and curves

- Practical interpretation: selecting metrics based on context (class balance, costs of errors) and conveying results clearly

- Real-world applicability: how these concepts guide model comparison, threshold selection, and reporting This module is designed to be conceptual and abstract, yet practical for real-world ML work.

# Why do we sometimes use random_state and not at other times?

Refer to the sklearn documentation, random_state is used to ensure the "randomness" that is used to shuffle the dataset is reproducible. It typically requires both random_state and shuffle parameters to be set accordingly.

# How to get all classification metrics?

To get classification metrics like precision, recall, F1 score, and accuracy simultaneously, use classification_report from sklearn.

For more information, check here.

# ValueError: This solver needs samples of at least 2 classes in the data, but the data contains only one class: 0

This error indicates that your dataset's churn column only contains the class 0, but at least two classes are required.

Check your data processing steps where binary conversion might be applied. Specifically, ensure that the line:

df.churn = (df.churn == 'yes').astype(int)is operating correctly. Verify that there are indeed records where

churnshould evaluate to1(i.e., cases wherechurnequals'yes').If all values are

0, make sure your original dataset and preprocessing steps are correctly implemented to represent cases with both classes (0and1).Review data preprocessing steps and confirm the filtering, transformation, or data importing steps do not inadvertently drop or misclassify the non-zero class records.

This should resolve the error by ensuring your data contains at least one record for each class.

# Method to get beautiful classification report

Use Yellowbrick. Yellowbrick is a library that combines scikit-learn with matplotlib to produce visualizations for your models. It produces colorful classification reports.

# Use AUC to evaluate feature importance of numerical variables

Check the solutions from the 2021 iteration of the course. You should use roc_auc_score.

# How to use AUC for numerical features?

When calculating the ROC AUC score using sklearn.metrics.roc_auc_score, the function expects two parameters: y_true and y_score. For each numerical value in the dataframe, it will be passed as the y_score to the function, and the target variable will be passed as y_true each time.

# What does KFold do?

KFold is a cross-validation technique that splits your dataset into k equal parts (folds). It trains the model k times, each time using a different fold as the validation set while training on the remaining folds. This process helps provide a more reliable estimate of a model's performance by ensuring every data point gets to be in both the training and validation sets. The average score across all folds offers a robust evaluation, minimizing the risk of overfitting to a specific train-test split.

What does this line do?

KFold(n_splits=n_splits, shuffle=True, random_state=1)

Positioning in Code: Whether you instantiate KFold inside the loop over different regularization values like

[0.01, 0.1, 1, 10]or outside, it typically does not affect your answer. This is becauseKFoldis essentially a generator object containing the informationn_splits,shuffle, andrandom_state.Impact of Random State: Changing the