Data Engineering Zoomcamp FAQ

Table of Contents

General Course-Related Questions

# Course: When does the course start?

The next cohort starts January 13th, 2025. More info at DTC.

- Register before the course starts using this link.

- Join the course Telegram channel with announcements.

- Don’t forget to register in DataTalks.Club's Slack and join the channel.

# Course: What are the prerequisites for this course?

To get the most out of this course, you should have:

- Basic coding experience

- Familiarity with SQL

- Experience with Python (helpful but not required)

No prior data engineering experience is necessary. See Readme on GitHub.

# Course: Can I still join the course after the start date?

Yes, even if you don't register, you're still eligible to submit the homework.

Be aware, however, that there will be deadlines for turning in homeworks and the final projects. So don't leave everything for the last minute.

# Course: I have registered for the Data Engineering Bootcamp. When can I expect to receive the confirmation email?

You don't need a confirmation email. You're accepted. You can start learning and submitting homework without registering. Registration was just to gauge interest before the start date.

# Course: What can I do before the course starts?

Start by installing and setting up all the dependencies and requirements:

- Google Cloud account

- Google Cloud SDK

- Python 3 (installed with Anaconda)

- Terraform

- Git

Look over the prerequisites and syllabus to see if you are comfortable with these subjects.

# Course: how many Zoomcamps in a year?

There are multiple Zoomcamps in a year, as of 2025. More info at DTC Article.

They are five separate courses, estimated to be during these months:

- Data-Engineering: Jan - Apr

- Stock Market Analytics: Apr - May

- MLOps: May - Aug

- LLM: June - Sep

- Machine Learning: Sep - Jan

There's only one Data-Engineering Zoomcamp “live” cohort per year for the certification, similar to the other Zoomcamps. They follow pretty much the same schedule for each cohort. For Data-Engineering, it is generally from Jan-Apr of the year.

If you’re not interested in the Certificate, you can take any Zoomcamp at any time, at your own pace, out of sync with any “live” cohort.

# Course: Is the current cohort going to be different from the previous cohort?

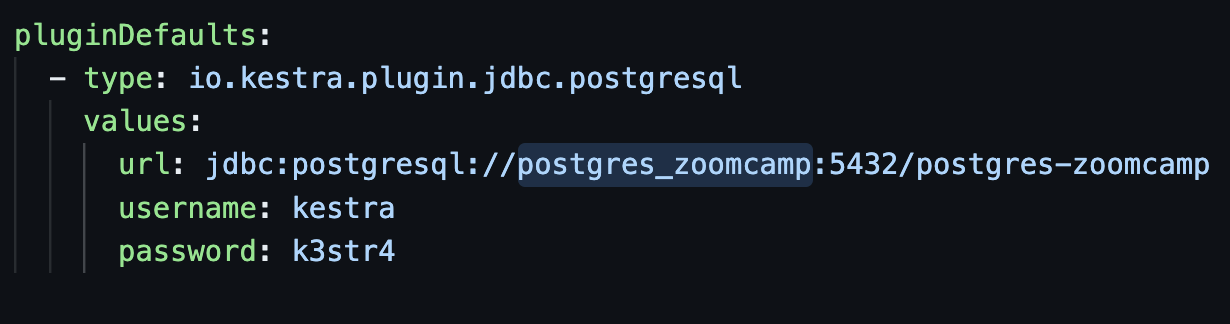

For the 2025 edition, we are using Kestra (see Demo) instead of MageAI (Module 2). Look out for new videos. See Playlist.

For the 2024 edition, we used Mage AI instead of Prefect and re-recorded the Terraform videos. For 2023, we used Prefect instead of Airflow. See playlists on YouTube and the cohorts folder in the GitHub repo.

# Course - Can I follow the course after it finishes?

Yes, we will keep all the materials available, so you can follow the course at your own pace after it finishes.

You can also continue reviewing the homeworks and prepare for the next cohort. You can also start working on your final capstone project.

# Course: Can I get support if I take the course in the self-paced mode?

Yes, the Slack channel remains open and you can ask questions there. However, always search the channel first and check the FAQ, as most likely your questions are already answered here.

You can also tag the bot @ZoomcampQABot to help you conduct the search, but don’t rely on its answers 100%.

# Course: Which playlist on YouTube should I refer to?

All the main videos are stored in the "DATA ENGINEERING ZOOMCAMP" main playlist. The GitHub repository is updated to include each video with a thumbnail, linking directly to the relevant playlist.

Refer to the Main Playlist for the core content, and then check specific year playlists for additional videos such as office hours.

# Course: How many hours per week am I expected to spend on this course?

It depends on your background and previous experience with modules. It is expected to require about 5 - 15 hours per week.

You can also calculate it yourself using this data and then update this answer.

# Office Hours: I can’t attend the “Office hours” / workshop, will it be recorded?

Yes! Every "Office Hours" will be recorded and available a few minutes after the live session is over; so you can view (or rewatch) whenever you want.

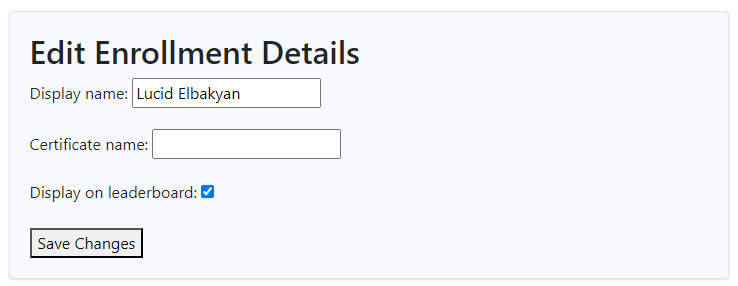

# Edit Course Profile.

The display name listed on the leaderboard is an auto-generated randomized name. You can edit it to be a nickname or your real name if you prefer. Your entry on the Leaderboard is the one highlighted in light green.

The Certificate name should be your actual name that you want to appear on your certificate after completing the course.

The "Display on Leaderboard" option indicates whether you want your name to be listed on the course leaderboard.

# Certificate: Do I need to do the homeworks to get the certificate?

No, as long as you complete the peer-reviewed capstone projects on time, you can receive the certificate. You do not need to do the homeworks if you join late, for example.

# Certificate - Can I follow the course in a self-paced mode and get a certificate?

No, you can only get a certificate if you finish the course with a “live” cohort. We don't award certificates for the self-paced mode. The reason is you need to peer-review capstone(s) after submitting a project. You can only peer-review projects at the time the course is running.

# Homework: What are homework and project deadlines?

2025 deadlines will be announced on the course website and in Google Calendar.

You can find the 2024 deadlines here: 2024 Deadlines Spreadsheet.

Also, take note of announcements from @Au-Tomator for any extensions or other news. The form may also show the updated deadline if the instructor(s) have updated it.

# Homework: Are late submissions of homework allowed?

No, late submissions are not allowed. However, if the form is still open after the due date, you can still submit the homework. Confirm your submission by checking the date-timestamp on the Course page. Ensure you are logged in.

# Homework and Leaderboard: What is the system for points in the course management

After you submit your homework, it will be graded based on the number of questions in that particular assignment. You can see the number of points you have earned at the top of the homework page. Additionally, in the leaderboard, you will find the sum of all points you've earned: points for Homeworks, FAQs, and Learning in Public.

Point System Overview:

- Homework: Points vary by assignment based on the number of questions.

- FAQ Contribution: You get a maximum of 1 point for contributing to the FAQ in the respective week.

- Learning in Public: For each learning in public link, you earn one point. You can achieve a maximum of 7 points.

Check this video for more details.

# Homework: What is the homework URL in the homework link?

Answer: In short, it’s your repository on GitHub, GitLab, Bitbucket, etc.

In long, your repository or any other location where you have your code, and a reasonable person would look at it and think, yes, you went through the week and exercises. Think of it like a portfolio you could present to an employer.

# Leaderboard: how do find myself on the leaderboard?

When you set up your account, you are automatically assigned a random name, such as "Lucid Elbakyan." If you want to see what your display name is, follow these steps:

- Go to your profile.

- Log in.

- Your display name is shown. You can also change it if you wish.

- Ensure your certificate name is correct, as this name will later be printed on your certificate.

# Environment: Is Python 3.9 still the recommended version to use in 2024?

Yes, for simplicity and stability when troubleshooting against recorded videos.

But Python 3.10 and 3.11 should work fine.

# Environment - Should I use my local machine, GCP, or GitHub Codespaces for my environment?

You can set it up on your laptop or PC if you prefer to work locally. However, Windows users might face some challenges.

If you prefer to work on the local machine, you can start with the Week 1 Introduction to Docker.

Alternatively, if you prefer to set up a virtual machine, consider the following:

- Using GitHub Codespaces

- Setting up the environment on a cloud VM: Refer to this video for guidance.

Working on a virtual machine is beneficial if you have different devices for home and office, allowing you to work virtually anywhere.

# Environment - Is GitHub codespaces an alternative to using cli/git bash to ingest the data and create a docker file?

GitHub Codespaces offers you computing Linux resources with many pre-installed tools (Docker, Docker Compose, Python).

You can also open any GitHub repository in a GitHub Codespace.

# Environment: Do we really have to use GitHub codespaces? I already have PostgreSQL & Docker installed.

It's up to you which platform and environment you use for the course.

GitHub Codespaces or GCP VM are just possible options, but you can do the entire course from your laptop.

# Environment - Do I need both GitHub Codespaces and GCP?

Choose the approach that aligns the most with your idea for the end project.

One should suffice; however, BigQuery, which is part of GCP, will be used, so learning that is probably a better option. Alternatively, you can set up a local environment for most of this course.

# Environment - Could not establish connection to "MyServerName": Got bad result from install script

This issue occurs when attempting to connect to a GCP VM using VSCode on a Windows machine. You can resolve it by changing a registry value in the registry editor.

Open the Run command window:

- Use the shortcut keys

Windows + R, or - Right-click "Start" and click "Run".

Open the Registry Editor:

- Type

regeditin the Run command window, then press Enter.

Change the registry value:

- Navigate to

HKEY_CURRENT_USER\Software\Microsoft\Command Processor. - Change the "Autorun" value from "if exists" to a blank.

Alternatively, you can delete the saved fingerprint within the known_hosts file:

In Windows, locate the file at C:\Users\<your_user_name>\.ssh\known_hosts and remove the entry for the server.

# Environment - Why are we using GCP and not other cloud providers?

For uniformity.

You can use other cloud platforms since you get every service provided by GCP in Azure and AWS. You’re not restricted to GCP and can use other platforms like AWS if you’re more comfortable.

Because everyone usually has a Google account, GCP offers a free trial period with $300 in credits for new users. Additionally, we are working with BigQuery, which is part of GCP.

Note that to sign up for a free GCP account, you must have a valid credit card.

# Should I pay for cloud services?

It's not mandatory. You can take advantage of their free trial.

# Environment: The GCP and other cloud providers are unavailable in some countries. Is it possible to provide a guide to installing a home lab?

You can do most of the course without a cloud. Almost everything we use (excluding BigQuery) can be run locally. We won’t be able to provide guidelines for some things, but most of the materials are runnable without GCP.

For everything in the course, there’s a local alternative. You could even do the whole course locally. Note that Homework 3 requires BigQuery.

# Environment: Is GCP Sandbox enough or we need the Free Trial?

Google Cloud Platform (GCP) provides two free trial options: the Free Trial with $300 credit and the Sandbox. Users can switch between these options by managing billing details.

However, completing the course solely using the GCP Sandbox option is not feasible due to its limited features. The Sandbox lacks some services required for the course, such as VMs, GCS Buckets, and other paid services that are integral to the curriculum.

The course will eventually require utilizing the following:

- VMs and GCS Buckets: These resources are not fully available in the Sandbox.

- BigQuery: A key component of GCP, and the Sandbox may not support all necessary functionalities.

Therefore, it is recommended to use the GCP Free Trial with billing details to access all needed features and ensure a smooth learning experience.

# Environment: I want to use AWS. May I do that?

Yes, you can. Just remember to adapt all the information from the videos to AWS. Additionally, the final capstone will be evaluated based on these tasks:

- Create a data pipeline

- Develop a visualization

Consider that when seeking help, you might need to rely on fellow coursemates who use AWS, which could be fewer compared to those using GCP.

Also, see "Is it possible to use x tool instead of the one tool you use?"

# Besides the “Office Hour” which are the live zoom calls?

We will probably have some calls during the Capstone period to clear some questions, but it will be announced in advance if that happens.

See Google Calendar

# Can I use Airflow instead for my final project?

Yes, you can use any tool you want for your project.

# Is it possible to use tool “X” instead of the one tool you use in the course?

Yes, this applies if you want to use Airflow or Prefect instead of Mage, AWS or Snowflake instead of GCP products, or Tableau instead of Metabase or Google Data Studio.

The course covers two alternative data stacks: one using GCP and one using local installation of everything. You can use one of them or use your tool of choice.

Considerations:

- We can’t support you if you choose to use a different stack.

- You would need to explain the different choices of tools for the peer review of your capstone project.

# How can we contribute to the course?

- Star the repository.

- Share it with friends if you find it useful.

- Create a pull request (PR) if you can improve the text or structure of the repository.

- Update this FAQ.

# Environment: Is the course [Windows/macOS/Linux/...] friendly?

Yes! Linux is ideal but technically it should not matter. Students in the 2024 cohort used all 3 OSes successfully.

# Environment: Roadblock for Windows users in modules with *.sh (shell scripts)

Later modules (module-05 & RisingWave workshop) use shell scripts in *.sh files. Most Windows users not using WSL will encounter issues and may not be able to continue, even in Git Bash or MINGW64. It is recommended to set up a WSL environment from the start.

# Any books or additional resources you recommend?

Yes to both! Check out this document: Awesome Data Engineering Resources

# Project: What is Project Attempt #1 and Project Attempt #2 exactly?

You will have two attempts for a project.

- If the first project deadline is over and you’re late, or you submit the project and fail the first attempt, you have another chance to submit the project with the second attempt.

# How to troubleshoot issues

First Steps:

- Attempt to solve the issue independently. Familiarize yourself with documentation as a crucial skill for problem-solving.

- Use shortcuts like

[ctrl+f]to search within documents and browsers. - Analyze the error message for descriptions, instructions, and possible solutions.

- Restart your application, server, or computer as needed.

Search for Solutions:

- Use search engines like Google, ChatGPT, or Bing AI to research the issue. It is rare to encounter a unique problem.

- Form your search queries using:

<technology> <problem statement>. E.g.,pgcli error column c.relhasoids does not exist. - Consult the technology’s official documentation for guidance.

Uninstallation and Reinstallation:

- Uninstall and then reinstall the application if needed, including a system restart.

- Note that reinstalling without prior uninstallation might not resolve the issue.

Seeking Help:

- Post questions on platforms like StackOverflow. Ensure your question adheres to guidelines: how-to-ask.

- Consider asking experts or colleagues in the future.

Community Resources:

- Check Slack channels for pinned messages and use its search function.

- Refer to this FAQ using search

[ctrl+f]or utilize the@ZoomcampQABotfor assistance.

When Asking for Help:

- Provide detailed information: coding environment, OS, commands, videos followed, etc.

- Share errors received, with specifics, including line numbers and actions taken.

- Avoid screenshots; paste code or errors directly. Use ``` for code formatting.

- Maintain thread consistency; respond in the same thread instead of creating multiple ones.

Re-evaluation:

- If the issue recurs, create a new post detailing changes in the environment.

- Communicate additional troubleshooting steps in the same thread.

- Occasionally take a break to gain a fresh perspective on the problem.

Documentation Contribution:

- If your problem solution is not listed, consider adding it to the FAQ to assist others.

# How to ask questions

When the troubleshooting guide does not help resolve your issue and you need another pair of eyes, include as much information as possible when asking a question:

- What are you coding on? What operating system are you using?

- What command did you run, and which video or tutorial did you follow?

- What error did you get? Does it have a line number pointing to the problematic code, and have you checked it for typos?

- What have you tried that did not work? This is crucial because, without it, helpers might suggest steps mentioned in the error log first. Or just refer to this FAQ document.

# How do I use Git / GitHub for this course?

After you create a GitHub account, clone the course repo to your local machine using the process outlined in this video:

Git for Everybody: How to Clone a Repository from GitHub.

Having this local repository on your computer will make it easy to access the instructors’ code and make pull requests if you want to add your own notes or make changes to the course content.

You will probably also create your own repositories to host your notes and versions of files. Here is a great tutorial that shows you how to do this:

How to Create a Git Repository.

Remember to ignore large databases, .csv, and .gz files, and other files that should not be saved to a repository. Use .gitignore for this:

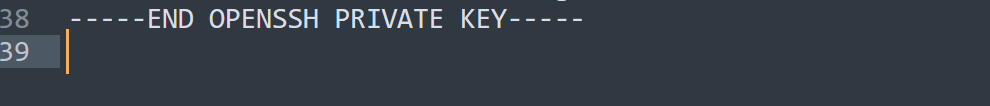

Important:

NEVER store passwords or keys in a git repo (even if the repo is set to private). Put files containing sensitive information (.env, secret.json, etc.) in your .gitignore.

This is also a great resource: Dangit, Git!?!

# VS Code: Tab using spaces

Error:

Makefile:2: *** missing separator. Stop.

Solution:

Tabs in documents should be converted to Tab instead of spaces. Follow this stack.

# Opening an HTML file with a Windows browser from Linux running on WSL

If you’re running Linux on Windows Subsystem for Linux (WSL) 2, you can open HTML files from the guest (Linux) with any Internet Browser installed on the host (Windows). Just install wslu and open the page using wslview:

wslview index.html

You can customize which browser to use by setting the BROWSER environment variable first. For example:

export BROWSER='/mnt/c/Program Files/Firefox/firefox.exe'

# Set up Chrome Remote Desktop for Linux on Compute Engine

This tutorial shows you how to set up the Chrome Remote Desktop service on a Debian Linux virtual machine (VM) instance on Compute Engine. Chrome Remote Desktop allows you to remotely access applications with a graphical user interface.

# How do I get my certificate?

There'll be an announcement in Telegram and the course channel for:

- Checking that your full name is displayed correctly on the Certificate (see Editing course profile on the Course Management webpage).

- Notifying when the grading is completed.

You will find it in your course profile (you need to be logged it).

For 2025 the link to the course profile is this:

https://courses.datatalks.club/de-zoomcamp-2025/enrollment

For other editions, change "2025" to your edition.

After the second announcement, follow instructions in certificates.md on how to generate the Certificate document yourself.

Module 1: Docker and Terraform

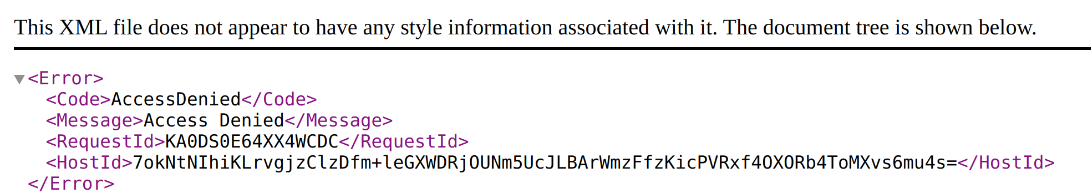

# Taxi Data: Yellow Taxi Trip Records downloading error

When attempting to download the 2021 data from the TLC website, you may encounter the following error:

ERROR 403: Forbidden

We have a backup, so use it instead: nyc-tlc-data

So the link should be yellow_tripdata_2021-01.csv.gz.

Note: Make sure to unzip the "gz" file (no, the "unzip" command won’t work for this).

# Taxi Data: How to handle *.csv.gz taxi data files?

In this video, the data file is stored as output.csv. If the file extension is csv.gz instead of csv, it won't store correctly.

To handle this:

Replace

csv_name = "output.csv"with the file name extracted from the URL. For example, for the yellow taxi data, use:url = "https://github.com/DataTalksClub/nyc-tlc-data/releases/download/yellow/yellow_tripdata_2021-01.csv.gz" csv_name = url.split("/")[-1]When you use

csv_namewithpandas.read_csv, it will work correctly becausepandas.read_csvcan directly read files with thecsv.gzextension.

Example:

import pandas as pd

url = "https://github.com/DataTalksClub/nyc-tlc-data/releases/download/yellow/yellow_tripdata_2021-01.csv.gz"

csv_name = url.split("/")[-1]

data = pd.read_csv(csv_name)

# Taxi Data: Data Dictionary for NY Taxi data?

Yellow Trips: Data Dictionary

Green Trips: Data Dictionary - LPEP Trip Records May 1, 2018

# Taxi Data: Unzip Parquet file

You can unzip the downloaded parquet file from the command line. The result is a CSV file which can be imported with pandas using pd.read_csv() as shown in the videos.

gunzip green_tripdata_2019-09.csv.gz

Solution for Using Parquet Files Directly in Python Script ingest_data.py

In the

def main(params), add this line:parquet_name = 'output.parquet'Edit the code which downloads the files:

os.system(f"wget {url} -O {parquet_name}")Convert the downloaded

.parquetfile to CSV and rename it tocsv_nameto keep it relevant to the rest of the code:df = pd.read_parquet(parquet_name) df.to_csv(csv_name, index=False)

# wget is not recognized as an internal or external command

If you encounter the error "wget is not recognized as an internal or external command," wget needs to be installed.

This error may also cause messages like "No such file or directory: 'output.csv.gz'."

Installation Instructions:

On Ubuntu:

sudo apt-get install wgetOn macOS:

Use Homebrew:

brew install wgetOn Windows:

Use Chocolatey:

choco install wgetAlternatively, download a binary from GnuWin32 and place it in a location that is in your PATH (e.g.,

C:/tools/).

Alternative Windows Installation:

- Download the latest wget binary for Windows from eternallybored.

- If you downloaded the zip, extract all files (use 7-zip if the built-in utility gives an error).

- Rename the file

wget64.exetowget.exeif necessary. - Move

wget.exeto yourGit\mingw64\bin\directory.

Python Alternative:

Use the Python wget library:

First, install using pip:

pip install wgetUse it with Python:

python -m wget

You can also paste the file URL into your web browser to download normally, then move the file to your working directory.

Additional Recommendation:

Consider using the Python library requests for loading gz files.

# wget - ERROR: cannot verify <website> certificate (MacOS)

Firstly, make sure that you add ! before wget if you’re running your command in a Jupyter Notebook or CLI. Then, you can check one of these two things (from CLI):

Using the Python library wget installed with pip:

python -m wget <url>Use the usual command and add

--no-check-certificateat the end:!wget <website_url> --no-check-certificate

# Git Bash: Backslash as an escape character in Git Bash for Windows

For those who wish to use the backslash as an escape character in Git Bash for Windows, type the following in the terminal:

bash.escapeChar=\

(Note: There is no need to include this in your .bashrc file.)

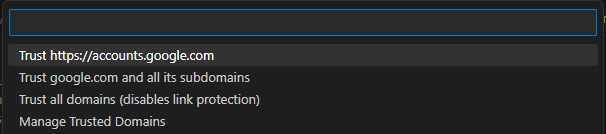

# GitHub Codespaces: How to store secrets

Instruction on how to store secrets that will be available in GitHub Codespaces. See Managing your account-specific secrets for GitHub Codespaces - GitHub Docs.

# GitHub Codespaces: Running pgadmin in Docker

With the default instructions, running pgadmin in Docker may result in a blank screen after logging into the pgadmin console. To resolve this, add the following two environment variables to your pgadmin configuration to allow it to work with Codespaces’ reverse proxy:

PGADMIN_CONFIG_PROXY_X_HOST_COUNT: 1

PGADMIN_CONFIG_PROXY_X_PREFIX_COUNT: 1

# Docker: Cannot connect to Docker daemon at unix:///var/run/docker.sock. Is the Docker daemon running?

Make sure you're able to start the Docker daemon. Check the issue immediately as described below:

Ensure the Docker daemon is running.

Update WSL in PowerShell with the following command:

wsl --update

# Docker - error during connect: In the default daemon configuration on Windows, the docker client must be run with elevated privileges

If you get this error:

docker: error during connect: In the default daemon configuration on Windows, the docker client must be run with elevated privileges to connect.: Post "http://%2F%2F.%2Fpipe%2Fdocker_engine/v1.24/containers/create": open //./pipe/docker_engine: The system cannot find the file specified.

See 'docker run --help'.

To resolve it on Windows, follow these guidelines based on your version:

Windows 10 Pro / 11 Pro Users:

- Ensure Hyper-V is enabled, as Docker can use it as a backend.

- Follow the Enable Hyper-V Option on Windows 10 / 11 tutorial.

Windows 10 Home / 11 Home Users:

- The 'Home' version doesn't support Hyper-V, so use WSL2 (Windows Subsystem for Linux).

- Refer to install WSL on Windows 11 for detailed instructions.

If you encounter the "WslRegisterDistribution failed with error: 0x800701bc" error:

- Update the WSL2 Linux Kernel by following the guidelines at GitHub: WSL Issue 5393.

# Docker: docker pull dbpage

Whenever a docker pull is performed (either manually or by docker-compose up), it attempts to fetch the given image name from a repository. If the repository is public, the fetch and download occur without any issues.

For instance:

docker pull postgres:13

docker pull dpage/pgadmin4

Be Advised: The Docker images we'll be using throughout the Data Engineering Zoomcamp are all public, unless otherwise specified. This means you are not required to perform a docker login to fetch them.

If you encounter the message:

docker login': denied: requested access to the resource is denied.

This is likely due to a typo in your image name. For instance:

$ docker pull dbpage/pgadmin4

This command will throw an exception:

Error response from daemon: pull access denied for dbpage/pgadmin4, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

This occurs because the actual image name is dpage/pgadmin4, not dbpage/pgadmin4.

How to fix it:

$ docker pull dpage/pgadmin4

Extra Notes: In some professional environments, the Docker image may be in a private repository that your DockerHub username has access to. In this case, you must:

- Execute:

$ docker login - Enter your username and password.

- Then perform the

docker pullagainst that private repository.

# Docker: "permission denied" error when creating a PostgreSQL Docker with a mounted volume on macOS M1

When attempting to run a Docker command similar to the one below:

docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="root" \

-e POSTGRES_DB="ny_taxi" \

-v $(pwd)/ny_taxi_postgres_data:/var/lib/postgresql/data \

-p 5432:5432 \

postgres:13

You encounter the error message:

docker: Error response from daemon: error while creating mount source path '/path/to/ny_taxi_postgres_data': chown /path/to/ny_taxi_postgres_data: permission denied.

Solution

Stop Rancher Desktop:

If you are using Rancher Desktop and face this issue, stop Rancher Desktop to resolve compatibility problems.Install Docker Desktop:

Install Docker Desktop, ensuring that it is properly configured and has the required permissions.Retry Docker Command:

Run the Docker command again after switching to Docker Desktop. This step resolves compatibility issues on some systems.

Note: The issue occurred because Rancher Desktop was in use. Switching to Docker Desktop resolves compatibility problems and allows for the successful creation of PostgreSQL containers with mounted volumes for the New York Taxi Database on macOS M1.

# Docker: can’t delete local folder that mounted to docker volume

When a PostgreSQL Docker container is created, it may create a folder on the local machine to mount to a volume inside the container. This folder is often owned by user 999 and has read and write protection, preventing deletion by conventional means such as dragging it to the trash.

If you encounter an access error or need to delete the folder, you can use the following command:

sudo rm -r -f docker_test/

rm: Command to remove files or directories.-r: Recursively remove directories and their contents.-f: Forcefully remove files/directories without prompting.docker_test/: The folder to be deleted.

# Docker: Docker won't start or is stuck in settings (Windows 10 / 11)

Ensure you are running the latest version of Docker for Windows. Download the updated version from Docker's official site. If the upgrade option in the menu doesn't work, uninstall and reinstall with the latest version.

If Docker is stuck on starting, try switching the containers by right-clicking the docker symbol from the running programs, and switch the containers from Windows to Linux or vice versa.

For Windows 10 / 11 Pro Edition:

# Docker: Should I run docker commands from the windows file system or a file system of a Linux distribution in WSL?

If you're running a Home Edition, you can still make it work with WSL2 (Windows Subsystem for Linux) by following the tutorial here.

If even after making sure your WSL2 (or Hyper-V) is set up accordingly, Docker remains stuck, you can try the following options:

- Reset to Factory Defaults

- Perform a fresh install.

# Docker: The input device is not a TTY (Docker run for Windows)

You may encounter this error:

$ docker run -it ubuntu bash

the input device is not a TTY. If you are using mintty, try prefixing the command with 'winpty'

Solution:

Use

winptybefore the Docker command:$ winpty docker run -it ubuntu bashAlternatively, create an alias:

echo "alias docker='winpty docker'" >> ~/.bashrcor

echo "alias docker='winpty docker'" >> ~/.bash_profile

Source: Stack Overflow

# Docker: Cannot pip install on Docker container (Windows)

You may encounter this error:

Retrying (Retry(total=4, connect=None, read=None, redirect=None, status=None)) after connection broken by 'NewConnectionError('<pip._vendor.urllib3.connection.HTTPSConnection object at 0x7efe331cf790>: Failed to establish a new connection: [Errno -3] Temporary failure in name resolution')': /simple/pandas/

Possible solution:

Run the following command:

winpty docker run -it --dns=8.8.8.8 --entrypoint=bash python:3.9

# Docker: ny_taxi_postgres_data is empty

Even after properly running the Docker script, the folder may appear empty in VS Code. For Windows, try the following steps:

Solution 1:

Run the Docker command with the absolute path quoted in the -v parameter:

winpty docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="root" \

-e POSTGRES_DB="ny_taxi" \

-v "C:\Users\abhin\dataengg\DE_Project_git_connected\DE_OLD\week1_set_up\docker_sql/ny_taxi_postgres_data:/var/lib/postgresql/data" \

-p 5432:5432 \

postgres:13

This should resolve the visibility issue in the VS Code ny_taxi folder.

Note: Ensure the correct direction for the slashes: / versus \.

Solution 2:

Another possible solution for Windows is to finish the folder path with a forward slash /:

docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="root" \

-e POSTGRES_DB="ny_taxi" \

-v /"$(pwd)"/ny_taxi_postgres_data/:/var/lib/postgresql/data/ \

-p 5432:5432 \

postgres:13

These steps should help resolve the issue of the ny_taxi_postgres_data folder appearing empty in your Docker setup.

# Docker: Setting up Docker on Mac

For setting up Docker on macOS, you have two main options:

Download from Docker Website:

- Visit the official Docker website and download the Docker Desktop for Mac as a

.dmgfile. This method is generally reliable and avoids issues related to licensing changes.

- Visit the official Docker website and download the Docker Desktop for Mac as a

Using Homebrew:

Be aware that there can be conflicts when installing with Homebrew, especially between Docker Desktop and command-line tools. To avoid issues:

- Install Docker Desktop first.

- Then install the command line tools.

Commands:

brew install --cask dockerbrew install docker docker-composeFor more detailed issues related to

brew install, refer to this Issue.

For more details, you can check the article on Setting up Docker in macOS.

# Docker - Could not change permissions of directory "/var/lib/postgresql/data": Operation not permitted

docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="admin" \

-e POSTGRES_DB="ny_taxi" \

-v "/mnt/path/to/ny_taxi_postgres_data":"/var/lib/postgresql/data" \

-p 5432:5432 \

postgres:13

Error Message:

The files belonging to this database system will be owned by user "postgres".

The database cluster will be initialized with locale "en_US.utf8".

The default database encoding has accordingly been set to "UTF8".

Data page checksums are disabled.

fixing permissions on existing directory /var/lib/postgresql/data ...

initdb: error: could not change permissions of directory "/var/lib/postgresql/data": Operation not permitted

Solution:

Create a local Docker volume and map it to the Postgres data directory

/var/lib/postgresql/data.- The volume name

dtc_postgres_volume_localmust match in both commands below:

docker volume create --name dtc_postgres_volume_local -d local- The volume name

Run the Docker container using the created volume:

docker run -it \ -e POSTGRES_USER="root" \ -e POSTGRES_PASSWORD="root" \ -e POSTGRES_DB="ny_taxi" \ -v dtc_postgres_volume_local:/var/lib/postgresql/data \ -p 5432:5432 \ postgres:13Verify the command works in Docker Desktop under Volumes. The

dtc_postgres_volume_localshould be listed, but the folderny_taxi_postgres_datawill be empty as an alternative configuration is used.

Alternate Error:

initdb: error: directory "/var/lib/postgresql/data" exists but is not empty

To resolve this, either remove or empty the directory "/var/lib/postgresql/data", or run initdb.

# Docker: invalid reference format: repository name must be lowercase (Mounting volumes with Docker on Windows)

Mapping volumes on Windows can be tricky. If the approach shown in the course video doesn't work for you, consider the following suggestions:

Move your data to a directory without spaces. For example, move from

C:/Users/Alexey Grigorev/git/...toC:/git/....Replace the

-vpart with one of these options:-v /c:/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data -v //c:/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data -v /c/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data -v //c/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data --volume //driveletter/path/ny_taxi_postgres_data/:/var/lib/postgresql/dataAdd

winptybefore the whole command:winpty docker run -it \ -e POSTGRES_USER="root" \ -e POSTGRES_PASSWORD="root" \ -e POSTGRES_DB="ny_taxi" \ -v /c:/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data \ -p 5432:5432 \ postgres:1Try adding quotes:

-v "/c:/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data" -v "//c:/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data" -v “/c/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data" -v "//c/some/path/ny_taxi_postgres_data:/var/lib/postgresql/data" -v "c:\some\path\ny_taxi_postgres_data":/var/lib/postgresql/dataNote: If Windows automatically creates a folder called

ny_taxi_postgres_data;C, it suggests a problem with volume mapping. Try deleting both folders and replacing the-vpart with other options. Using//c/instead of/c/might work, as it creates the correct folderny_taxi_postgres_data.A possible solution is using

"$(pwd)"/ny_taxi_postgres_data:/var/lib/postgresql/dataand pay attention to the placement of quotes.If none of these work, use a volume name instead of the path:

-v ny_taxi_postgres_data:/var/lib/postgresql/dataFor Mac, you can wrap

$(pwd)with quotes:docker run -it \ -e POSTGRES_USER="root" \ -e POSTGRES_PASSWORD="root" \ -e POSTGRES_DB="ny_taxi" \ -v "$(pwd)"/ny_taxi_postgres_data:/var/lib/postgresql/data \ -p 5432:5432 \ postgres:13

Source: StackOverflow

# Docker: Error response from daemon: invalid mode: \Program Files\Git\var\lib\postgresql\data.

Change the mounting path. Replace it with one of the following:

-v /e/zoomcamp/...:/var/lib/postgresql/data

Or

-v /c:/.../ny_taxi_postgres_data:/var/lib/postgresql/data

(Note: Include a leading slash in front of c:)

# Docker: Error response from daemon: error while creating buildmount source

You may get this error:

error while creating buildmount source path '/run/desktop/mnt/host/c/<your path>': mkdir /run/desktop/mnt/host/c: file exists

When you encounter the error above while rerunning your Docker command, it indicates that you should not mount on the second run. Here’s the initial problematic command:

docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="root" \

-e POSTGRES_DB="ny_taxi" \

-v <your path>:/var/lib/postgresql/data \

-p 5432:5432 \

postgres:13

To resolve the issue, use the revised command without the volume mount:

docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="root" \

-e POSTGRES_DB="ny_taxi" \

-p 5432:5432 \

postgres:13

# Docker: build error: error checking context: 'can't stat '/home/user/repos/data-engineering/week_1_basics_n_setup/2_docker_sql/ny_taxi_postgres_data''.

This error appeared when running the command:

docker build -t taxi_ingest:v001 .

The issue often arises because the user ID of the directory ny_taxi_postgres_data was changed, causing permission errors when accessing it. To resolve this error, use a directory containing only the necessary files, Dockerfile and ingest_data.py.

If you need to change permissions, use the following command on Ubuntu:

sudo chown -R $USER dir_path

On Windows, follow the instructions in this guide: The Geek Page.

For more information, refer to this explanation on Stack Overflow: Docker build error checking context.

# Docker: ERRO[0000] error waiting for container: context canceled

You might have installed Docker via snap. Run the following command to verify:

sudo snap status docker

If you receive the response:

error: unknown command "status", see 'snap help'.

Then uninstall Docker and install it via the official website.

Error message: "Bind for 0.0.0.0:5432 failed: port is already allocated."

# Docker: build error checking context: can’t stat ‘/home/fhrzn/Projects/…./ny_taxi_postgres_data’

This issue occurs due to insufficient authorization rights to the host folder, which may cause it to appear empty.

Solution:

Add permission for everyone to the folder:

sudo chmod -R 777 <path_to_folder>

Example:

sudo chmod -R 777 ny_taxi_postgres_data/

# Docker: failed to solve with frontend dockerfile.v0: failed to read dockerfile: error from sender: open ny_taxi_postgres_data: permission denied.

This issue occurs on Ubuntu/Linux systems when attempting to rebuild the Docker container.

$ docker build -t taxi_ingest:v001 .

A folder is created to host the Docker files. When the build command is executed again, a permission error may occur because there are no permissions on this new folder. To resolve this, grant permissions by running the command:

$ sudo chmod -R 755 ny_taxi_postgres_data

If issues persist, use:

$ sudo chmod -R 777 ny_taxi_postgres_data

Note: 755 grants write access only to the owner.

# Docker: Docker network name

Get the network name via:

docker network ls

For more details, refer to the Docker network ls documentation.

# Docker: Error response from daemon: Conflict. The container name "pg-database" is already in use by container "xxx". You have to remove (or rename) that container to be able to reuse that name.

Sometimes, when you try to restart a Docker container configured with a network name, the error message appears.

To resolve this issue:

If the container is in a running state, stop it using:

docker stop <container_name>Then remove the container:

docker rm pg-database

Alternatively, you can use docker start instead of docker run to restart the Docker container without removing it.

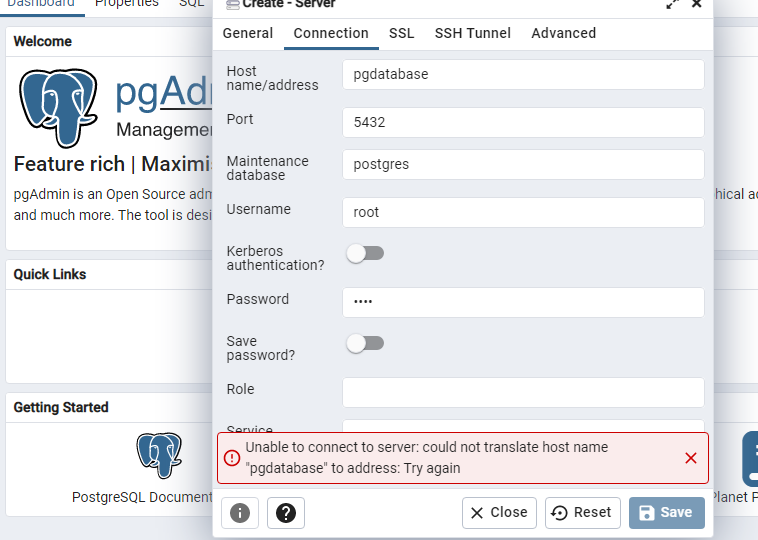

# Docker: ingestion when using docker-compose could not translate host name

Typical error:

n.exc.OperationalError: (psycopg2.OperationalError) could not translate host name "pgdatabase" to address: Name or service not known

Solution:

- Run

docker-compose up -dto start your containers. - Check which network is created by Docker, as it may differ from your expectations.

- Use the actual network name in your ingestion script instead of "pg-network".

- Confirm the correct database service name, replacing "pgdatabase" accordingly.

Example:

- "pg-network" might become "2docker_default".

# Docker: Cannot install docker on MacOS/Windows 11 VM running on top of Linux (due to Nested virtualization).

Before starting your VM, you need to enable nested virtualization. Run the following commands based on your CPU:

For Intel CPU:

modprobe -r kvm_intel modprobe kvm_intel nested=1For AMD CPU:

modprobe -r kvm_amd modprobe kvm_amd nested=1

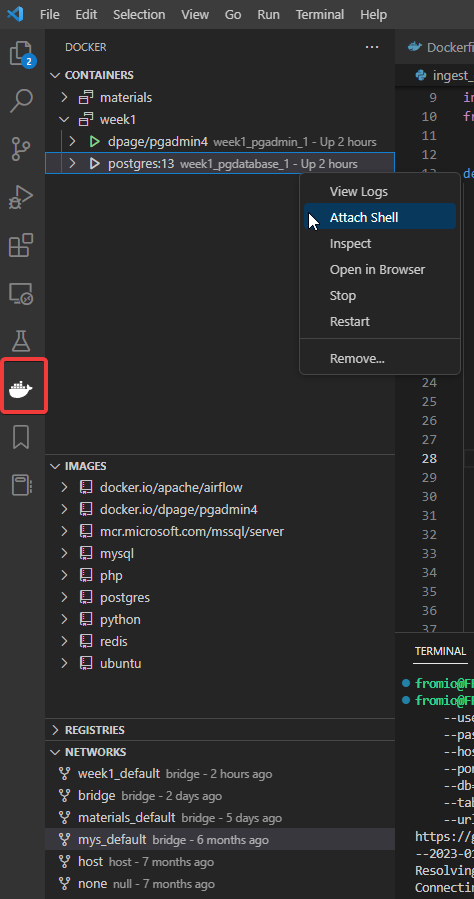

# Docker: Connecting from VS Code

It’s very easy to manage your Docker container, images, network, and compose projects from VS Code.

Install the official extension and launch it from the left side icon.

It will work even if your Docker runs on WSL2, as VS Code can easily connect with your Linux.

# Docker: How to stop a container?

Use the following command:

docker stop <container_id>

# Docker: PostgreSQL Database directory appears to contain a database. Database system is shut down

When you see this in logs, your container with PostgreSQL is not accepting any requests. Attempting to connect may result in the error:

connection failed: server closed the connection unexpectedly

This probably means the server terminated abnormally before or while processing the request.

To resolve this issue:

Delete Data Directory: Delete the directory with data (the one you map to the container using the

-vflag) and restart the container.Preserve Critical Data: If your data is critical, you may be able to reset the write-ahead log from within the Docker container. For more details, see here.

docker run -it \ -e POSTGRES_USER="root" \ -e POSTGRES_PASSWORD="root" \ -e POSTGRES_DB="ny_taxi" \ -v $(pwd)/ny_taxi_postgres_data:/var/lib/postgresql/data \ -p 5432:5432 \ --network pg-network \ postgres:13 \ /bin/bash -c 'gosu postgres pg_resetwal /var/lib/postgresql/data'

# Docker: Docker not installable on Ubuntu

On some versions of Ubuntu, the snap command can be used to install Docker.

sudo snap install docker

# Docker-Compose: mounting error

error: could not change permissions of directory "/var/lib/postgresql/data": Operation not permitted

If you have used the previous answer and created a local Docker volume, then you need to inform the compose file about the named volume:

dtc_postgres_volume_local: # Define the named volume here

- Services mentioned in the compose file automatically become part of the same network.

Steps:

Use the command:

docker volume inspect dtc_postgres_volume_localto see the location by checking the value of

Mountpoint.In some cases, after running

docker compose up, the mounting directory created is nameddocker_sql_dtc_postgres_volume_localinstead of the existingdtc_postgres_volume_local.Rename the existing

dtc_postgres_volume_localtodocker_sql_dtc_postgres_volume_local:- Be careful when performing this operation.

Remove the newly created one.

Run

docker compose upagain and check if the table is there.

# Docker-Compose: Error translating host name to address

Couldn’t translate host name to address

# Docker-Compose: Data retention (could not translate host name "pg-database" to address: Name or service not known)

Make sure the PostgreSQL database is running. Use the command to start containers in detached mode:

docker-compose up -d

Example output:

% docker compose up -d

[+] Running 2/2

⠿ Container pg-admin Started

⠿ Container pg-database Started

To view the containers use:

docker ps

Example output:

% docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

faf05090972e postgres:13 "docker-entrypoint.s…" 39 seconds ago Up 37 seconds 0.0.0.0:5432->5432/tcp pg-database

6344dcecd58f dpage/pgadmin4 "/entrypoint.sh" 39 seconds ago Up 37 seconds 443/tcp, 0.0.0.0:8080->80/tcp pg-adminhw

To view logs for a container:

docker logs <containerid>

Example logs for PostgreSQL:

% docker logs faf05090972e

PostgreSQL Database directory appears to contain a database; Skipping initialization

2022-01-25 05:58:45.948 UTC [1] LOG: starting PostgreSQL 13.5 (Debian 13.5-1.pgdg110+1) on aarch64-unknown-linux-gnu, compiled by gcc (Debian 10.2.1-6) 10.2.1 20210110, 64-bit

2022-01-25 05:58:45.948 UTC [1] LOG: listening on IPv4 address "0.0.0.0", port 5432

2022-01-25 05:58:45.948 UTC [1] LOG: listening on IPv6 address "::", port 5432

2022-01-25 05:58:45.954 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

2022-01-25 05:58:45.984 UTC [28] LOG: database system was interrupted; last known up at 2022-01-24 17:48:35 UTC

2022-01-25 05:58:48.581 UTC [28] LOG: database system was not properly shut down; automatic recovery in progress

2022-01-25 05:58:48.602 UTC [28] LOG: redo starts at 0/872A5910

2022-01-25 05:59:33.726 UTC [28] LOG: invalid record length at 0/98A3C160: wanted 24, got 0

2022-01-25 05:59:33.726 UTC [28] LOG: redo done at 0/98A3C128

2022-01-25 05:59:48.051 UTC [1] LOG: database system is ready to accept connections

If docker ps doesn’t show pg-database running, use:

docker ps -a

- This will show all containers, either running or stopped.

- Get the container ID for

pg-database-1and run the appropriate command.

If you lose database data after executing docker-compose up and cannot successfully execute your ingestion script due to the following error:

sqlalchemy.exc.OperationalError: (psycopg2.OperationalError) could not translate host name /data_pgadmin:/var/lib/pgadmin"pg-database" to address: Name or service not known

- Docker Compose may be creating its own default network since it is no longer specified in the command or file.

- Check logs after executing

docker-compose upto find the network name and change the network name argument in your ingestion script.

If problems persist with pgcli, consider using HeidiSQL.

# Docker-Compose: Hostname does not resolve

When encountering the error:

Error response from daemon: network 66ae65944d643fdebbc89bd0329f1409dec2c9e12248052f5f4c4be7d1bdc6a3 not found

Try the following steps:

- Run

docker ps -ato see all stopped and running containers. - Remove all containers to clean up the environment.

- Execute

docker-compose up -dagain.

If facing issues connecting to the server at localhost:8080 with the error:

Unable to connect to server: could not translate host name 'pg-database' to address: Name does not resolve

Consider these solutions:

- Use a new hostname without dashes, e.g.,

pgdatabase. - Make sure to specify the Docker network and use the same network in both containers in your

docker-compose.ymlfile.

Example docker-compose.yml:

services:

pgdatabase:

image: postgres:13

environment:

- POSTGRES_USER=root

- POSTGRES_PASSWORD=root

- POSTGRES_DB=ny_taxi

volumes:

- "./ny_taxi_postgres_data:/var/lib/postgresql/data:rw"

ports:

- "5431:5432"

networks:

- pg-network

pgadmin:

image: dpage/pgadmin4

environment:

- PGADMIN_DEFAULT_EMAIL=admin@admin.com

- PGADMIN_DEFAULT_PASSWORD=root

ports:

- "8080:80"

networks:

- pg-network

networks:

pg-network:

name: pg-network

# Docker-Compose: PgAdmin – no database in PgAdmin

When you log into PgAdmin and see an empty database, the following solution can help:

Run:

docker-compose up

And at the same time run:

docker build -t taxi_ingest:v001 .

# NETWORK NAME IS THE SAME AS THAT CREATED BY DOCKER COMPOSE

docker run -it \

--network=pg-network \

taxi_ingest:v001 \

--user=postgres \

--password=postgres \

--host=db \

--port=5432 \

--db=ny_taxi \

--table_name=green_tripdata \

--url=${URL}

It's important to use the same --network as stated in the docker-compose.yaml file.

The docker-compose.yaml file might not specify a network, as shown below:

services:

db:

container_name: postgres

image: postgres:17-alpine

environment:

...

ports:

- '5433:5432'

volumes:

- ...

pgadmin:

container_name: pgadmin

image: dpage/pgadmin4:latest

environment:

...

ports:

- '8080:80'

volumes:

- ...

volumes:

vol-pgdata:

name: vol-pgdata

vol-pgadmin_data:

name: vol-pgadmin_data

If the network name is not specified, it is generated automatically: The name of the directory containing the docker-compose.yaml file in lowercase + _default.

You can find the network’s name when running docker-compose up:

pg-database Pulling pg-database Pulled

Network week_1_default Creating

Network week_1_default Created

# Docker-Compose: Persist PGAdmin docker contents on GCP

One common issue when running Docker Compose on GCP is that PostgreSQL might not persist its data to the specified path. For example:

services:

...

pgadmin:

...

volumes:

- "./pgadmin:/var/lib/pgadmin:wr"

This setup might not work. To resolve this, use Docker Volume to make the data persist:

services:

...

pgadmin:

...

volumes:

- pgadmin:/var/lib/pgadmin

volumes:

pgadmin:

This configuration change ensures the persistence of the PGAdmin data on GCP.

# Docker: Docker engine stopped_failed to fetch extensions

The Docker engine may crash continuously and fail to work after restart. You might see error messages like "docker engine stopped" and "failed to fetch extensions" repeatedly on the screen.

Solution:

- Check if you have the latest version of Docker installed. Update Docker if necessary.

- If the problem persists, consider reinstalling Docker.

- Note: You will need to fetch images again, but there should be no other issues.

# Docker-Compose: Persist PGAdmin configuration

To persist pgAdmin configuration, such as the server name, modify your docker-compose.yml by adding a "volumes" section:

services:

pgdatabase:

[...]

pgadmin:

image: dpage/pgadmin4

environment:

- PGADMIN_DEFAULT_EMAIL=admin@admin.com

- PGADMIN_DEFAULT_PASSWORD=root

volumes:

- "./pgAdmin_data:/var/lib/pgadmin/sessions:rw"

ports:

- "8080:80"

In the example above, "pgAdmin_data" is a folder on the host machine, and "/var/lib/pgadmin/sessions" is the session settings folder in the pgAdmin container.

Before running docker-compose up on the YAML file, provide the pgAdmin container with access permissions to the "pgAdmin_data" folder. The container runs with a username "5050" and user group "5050". Use the following command to set permissions:

sudo chown -R 5050:5050 pgAdmin_data

# Docker-Compose - dial unix /var/run/docker.sock: connect: permission denied

This happens if you did not create the docker group and add your user. Follow these steps from the link: guides/docker-without-sudo.md at main · sindresorhus/guides · GitHub

- Press

Ctrl+Dto log out and log back in again.

If you are tired of having to set up your database connection each time you start the containers, create a volume for pgAdmin:

In your docker-compose.yaml file, add the following under your pgAdmin service:

services:

pgadmin:

volumes:

- type: volume

source: pgadmin_data

target: /var/lib/pgadmin

Also, add the following to the end of the file:

volumes:

pgadmin_data:

This configuration will maintain the state so that pgAdmin remembers your previous connections.

# Docker: docker-compose still not available after changing .bashrc

This issue can occur after installing Docker Compose in a Google Cloud VM, as demonstrated in video 1.4.1.

If the downloaded Docker Compose file from GitHub is named docker-compose-linux-x86_64, you may need to rename it for convenience. Here's how to resolve the issue:

Rename

docker-compose-linux-x86_64todocker-composeusing the following command:mv docker-compose-linux-x86_64 docker-compose

By doing this, you can use the docker-compose command directly.

# Docker-Compose: Error getting credentials after running docker-compose up -d

Installing pass via sudo apt install pass helped to solve the issue. More about this can be found here: https://github.com/moby/buildkit/issues/1078

# Docker-Compose: Errors pertaining to docker-compose.yml and pgadmin setup

For those experiencing problems with Docker Compose, getting data in PostgreSQL, and similar issues, follow these steps:

- Create a new volume on Docker, either using the command line or Docker Desktop app.

- Modify your

docker-compose.ymlfile as needed to fix any setup issues. - Set

low_memory=Falsewhen importing the CSV file using pandas:

df = pd.read_csv('yellow_tripdata_2021-01.csv', nrows=1000, low_memory=False)

- Use the specified function in your

upload-data.ipynbfor better tracking of the ingestion process.

from time import time

counter = 0

time_counter = 0

while True:

t_start = time()

df = next(df_iter)

df.tpep_pickup_datetime = pd.to_datetime(df.tpep_pickup_datetime)

df.tpep_dropoff_datetime = pd.to_datetime(df.tpep_dropoff_datetime)

df.to_sql(name='yellow_taxi_data', con=engine, if_exists='append')

t_end = time()

t_elapsed = t_end - t_start

print('Chunk Insertion Done! Time taken: %.2f seconds' %(t_elapsed))

counter += 1

time_counter += t_elapsed

if counter == 14:

print('All Chunks Inserted! Total Time Taken: %.2f seconds' %(time_counter))

break

Order of Execution:

- Open the terminal in the

2_docker_sqlfolder and run:docker compose up - Ensure no other containers are running except the ones you just executed (pgAdmin and pgdatabase).

- Open Jupyter Notebook and begin the data ingestion.

- Open pgAdmin and set up a server. Make sure you use the same configurations as your

docker-compose.ymlfile, such as the same name (pgdatabase), port, and database name (ny_taxi).

# Docker: Compose up -d error getting credentials - err: exec: "docker-credential-desktop": executable file not found in %PATH%, out: ``

To resolve this error, follow these steps:

- Locate the

config.jsonfile for Docker, typically found in your home directory atUsers/username/.docker. - Modify the

credsStoresetting tocredStore. - Save the file and re-run your Docker Compose command.

# Docker-Compose: Which docker-compose binary to use for WSL?

To determine which docker-compose binary to download from Docker Compose releases, you can check your system with the following commands:

To check the system type:

uname -s # This will most likely return 'Linux'To check the system architecture:

uname -m # This will return your system's 'flavor'

Alternatively, you can use the following command to download docker-compose directly:

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

# Docker-Compose - Error undefined volume in Windows/WSL

If you wrote the docker-compose.yaml file exactly like the video, you might run into an error:

service "pgdatabase" refers to undefined volume dtc_postgres_volume_local: invalid compose project

To resolve this, include the volume definition in your docker-compose.yaml file by adding:

dt_postgres_volume_local:

This should be added under the volumes section. Make sure your file looks similar to this:

volumes:

dtc_postgres_volume_local:

# Docker-Compose: cannot execute binary file: Exec format error

This error indicates that the docker-compose executable cannot be opened in the current OS. Ensure that the file you download from GitHub matches your system environment.

As of 2025/1/17, docker-compose (v2.32.4) docker-compose-linux-aarch64 does not work. Try v2.32.3 docker-compose-linux-x86_64.

# Docker: Postgres container fails to launch with exit code (1) when attempting to compose

This issue arises because the Postgres database is not initialized before executing docker-compose up -d. While there are other potential solutions discussed in this thread, you can resolve it by initializing the database first. Then, the Docker Compose will work as expected.

docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="root" \

-e POSTGRES_DB="ny_taxi" \

-v $(pwd)/ny_taxi_data:/var/lib/postgresql/data \

-p 5432:5432 \

--network=pg-network \

--name=pg_database \

postgres:13

# WSL: Docker directory permissions error

initdb: error: could not change permissions of directory

WSL and Windows do not manage permissions in the same way, causing conflict if using the Windows file system rather than the WSL file system.

Solution: Use Docker volumes.

Volume is used for storage of persistent data and not for transferring files. A local volume is unnecessary.

This resolves permission issues and allows for better management of volumes.

Note: The user: is not necessary if using Docker volumes but is required if using a local drive.

services:

postgres:

image: postgres:15-alpine

container_name: postgres

user: "0:0"

environment:

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=postgres

- POSTGRES_DB=ny_taxi

volumes:

- "pg-data:/var/lib/postgresql/data"

ports:

- "5432:5432"

networks:

- pg-network

pgadmin:

image: dpage/pgadmin4

container_name: pgadmin

user: "${UID}:${GID}"

environment:

- PGADMIN_DEFAULT_EMAIL=email@some-site.com

- PGADMIN_DEFAULT_PASSWORD=pgadmin

volumes:

- "pg-admin:/var/lib/pgadmin"

ports:

- "8080:80"

networks:

- pg-network

networks:

pg-network:

name: pg-network

volumes:

pg-data:

pg-admin:

# WSL: Insufficient system resources exist to complete the requested service.

Cause:

This error occurs because some applications are not updated. Specifically, check for any pending updates for Windows Terminal, WSL, and Windows Security updates.

Solution:

To update Windows Terminal:

- Open the Microsoft Store.

- Go to your library of installed apps.

- Search for Windows Terminal.

- Update the app.

- Restart your system to apply the changes.

For updating Windows Security updates:

- Go to Windows Updates settings.

- Check for any pending updates, especially security updates.

- Restart your system once the updates are downloaded and installed successfully.

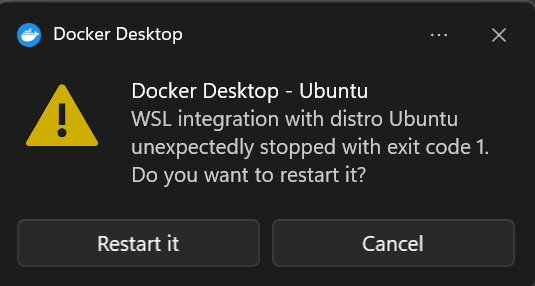

# WSL: WSL integration with distro Ubuntu unexpectedly stopped with exit code 1.

Upon restarting, the same issue appears and occurs unexpectedly on Windows.

Solutions:

Fixing DNS Issue

This solution is credited to reddit and has worked for some users.

reg add "HKLM\System\CurrentControlSet\Services\Dnscache" /v "Start" /t REG_DWORD /d "4" /fRestart your computer and then re-enable it with the following command:

reg add "HKLM\System\CurrentControlSet\Services\Dnscache" /v "Start" /t REG_DWORD /d "2" /fRestart your OS again. It should work.

Switch to Linux Containers

- Right-click on the running Docker icon (next to the clock).

- Choose "Switch to Linux containers."

bash: conda: command not found

Database is uninitialized and superuser password is not specified.

Database is uninitialized and superuser password is not specified.

# WSL: Permissions too open at Windows

Issue when trying to run the GPC VM through SSH via WSL2, likely because WSL2 isn’t looking for .ssh keys in the correct folder. The command attempted:

ssh -i gpc [username]@[my external IP]

Solutions

Use

sudoCommandTry using

sudobefore executing the command:sudo ssh -i gpc [username]@[my external IP]Change Permissions

Navigate to your folder and change the permissions for the private key SSH file:

chmod 600 gpcCreate a

.sshFolder in WSL2Navigate to your home directory:

cd ~Create a

.sshfolder:mkdir .sshCopy the content from the Windows

.sshfolder to the newly created.sshfolder:cp -r /mnt/c/Users/YourUsername/.ssh/* ~/.ssh/Adjust the permissions of the files and folders in the

.sshdirectory if necessary.

# WSL: Could not resolve host name

WSL2 may not be referencing the correct .ssh/config path from Windows. You can create a config file in the home directory of WSL2 by following these steps:

Navigate to your home directory:

cd ~Create the

.sshdirectory:mkdir .sshCreate a

configfile in the.sshfolder with the following content:HostName [GPC VM external IP] User [username] IdentityFile ~/.ssh/[private key]

# PGCLI - connection failed: :1), port 5432 failed: could not receive data from server: Connection refused could not send SSL negotiation packet: Connection refused

To resolve the connection failure with PGCLI, use the following command to connect via socket:

pgcli -h 127.0.0.1 -p 5432 -u root -d ny_taxi

Ensure the database server is running and properly configured to accept connections.

# PGCLI: Should we run pgcli inside another docker container?

In this section of the course, the 5432 port of PostgreSQL is mapped to your computer’s 5432 port. This means you can access the PostgreSQL database via pgcli directly from your computer.

So, no, you don’t need to run it inside another container. Your local system will suffice.

# PGCLI - FATAL: password authentication failed for user "root" (You already have Postgres)

For a more visual and detailed explanation, feel free to check the video 1.4.2 - Port Mapping and Networks in Docker.

If you want to debug the issue on MacOS, you can try the following steps:

Check if something is blocking your port:

Use the

lsofcommand to find out which application is using a specific port on your local machine:lsof -i :5432List running PostgreSQL services:

Use

launchctlto list running postgres services on your local machine.Unload the running service:

Unload the launch agent for the PostgreSQL service, which will stop the service and free up the port:

launchctl unload -w ~/Library/LaunchAgents/homebrew.mxcl.postgresql.plistRestart the service:

launchctl load -w ~/Library/LaunchAgents/homebrew.mxcl.postgresql.plistChange the port:

Changing the port from

5432:5432to5431:5432can help avoid this error.

# PGCLI - PermissionError: [Errno 13] Permission denied: '/some/path/.config/pgcli'

I encountered this error:

pgcli -h localhost -p 5432 -U root -d ny_taxi

Traceback (most recent call last):

File "/opt/anaconda3/bin/pgcli", line 8, in <module>

sys.exit(cli())

File "/opt/anaconda3/lib/python3.9/site-packages/click/core.py", line 1128, in __call__

return self.main(*args, **kwargs)

File "/opt/anaconda3/lib/python3.9/site-packages/click/core.py", line 1053, in main

rv = self.invoke(ctx)

File "/opt/anaconda3/lib/python3.9/site-packages/click/core.py", line 1395, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/opt/anaconda3/lib/python3.9/site-packages/click/core.py", line 754, in invoke

return __callback(*args, **kwargs)

File "/opt/anaconda3/lib/python3.9/site-packages/pgcli/main.py", line 880, in cli

os.makedirs(config_dir)

File "/opt/anaconda3/lib/python3.9/os.py", line 225, in makedirspython

mkdir(name, mode)

PermissionError: [Errno 13] Permission denied: '/Users/vray/.config/pgcli'

Solution 1:

This error indicates that your user doesn’t have the necessary permissions to access or modify the directory or file (/some/path/.config/pgcli). This can occur in Docker environments when privileges are assigned to root instead of the current user.

To resolve this:

Check the file permissions:

ls -l /some/path/.config/pgcliChange the ownership/permissions so that your user has the necessary permissions:

sudo chown -R user_name /Users/user_name/.configsudostands for Super User DO.chownmeans change owner.-Rapplies recursively.user_nameis your PC username (e.g., vray).

Solution 2:

Make sure you install pgcli without using sudo. The recommended approach is to use conda/anaconda to avoid affecting your system Python.

If conda install gets stuck at "Solving environment," try these alternatives:

https://stackoverflow.com/questions/63734508/stuck-at-solving-environment-on-anaconda

# PGCLI - no pq wrapper available.

Error:

ImportError: no pq wrapper available.

Problem Details:

- Could not import

\dt opg 'c' implementation: No module named 'psycopg_c'couldn't import psycopg 'binary' implementation: No module named 'psycopg_binary'couldn't import psycopg 'python' implementation: libpq library not found

Solution:

Check Python Version:

Ensure your Python version is at least 3.9. The

'psycopg2-binary'might fail to install on older versions like 3.7.3.$ python -VEnvironment Setup:

If your Python version is not 3.9, create a new environment:

$ conda create --name de-zoomcamp python=3.9 $ conda activate de-zoomcamp

Install Required Libraries:

Install Postgres libraries:

$ pip install psycopg2-binary $ pip install psycopg_binary

Upgrade pgcli:

If the above steps do not work, try upgrading

pgcli:$ pip install --upgrade pgcli

Install pgcli via Conda:

Make sure to also install

pgcliusing conda:$ conda install -c conda-forge pgcli

If you follow these steps, you should be able to resolve the issue.

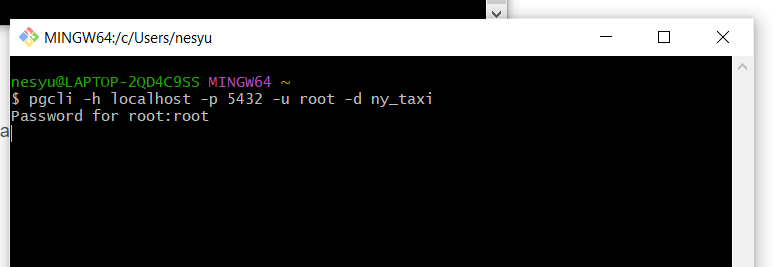

# PGCLI - stuck on password prompt

If your Bash prompt is stuck on the password command for postgres:

Use winpty:

winpty pgcli -h localhost -p 5432 -u root -d ny_taxi

Alternatively, try using Windows Terminal or the terminal in VS Code.

# PGCLI -connection failed: FATAL: password authentication failed for user "root"

The error above was faced continually despite inputting the correct password.

Stop the PostgreSQL service on Windows

Using WSL: Completely uninstall PostgreSQL 12 from Windows and install

postgresql-clienton WSL:

sudo apt install postgresql-client-common postgresql-client libpq-dev

Change the port of the Docker container

Keep the Database Connection:

If you encounter the error:

PGCLI -connection failed: FATAL: password authentication failed for user "root"

It might be because the connection to the Postgres:13 image was closed. Ensure you keep the database connected in order to continue with the tutorial steps, using the following command:

docker run -it \

-e POSTGRES_USER=root \

-e POSTGRES_PASSWORD=root \

-e POSTGRES_DB=ny_taxi \

-v d:/git/data-engineering-zoomcamp/week_1/docker_sql/ny_taxi_postgres_data:/var/lib/postgresql/data \

-p 5432:5432 \

postgres:13

You should see this

2024-01-26 20:14:43.124 UTC [1] LOG: database system is ready to accept connections

Change the Port for Docker PostgreSQL:

After running the command

pgcli -h localhost -p 5432 -u root -d ny_taxi, if prompted for a password, the error may persist due to local Postgres installation. To resolve this port conflict between host and container:- Configure your Docker PostgreSQL container to use a different port. Map it to a different port on your host machine:

docker run -it \

-e POSTGRES_USER="root" \

-e POSTGRES_PASSWORD="root" \

-e POSTGRES_DB="ny_taxi" \

-v c:/workspace/de-zoomcamp/1_intro_to_data_engineering/docker_sql/ny_taxi_postgres_data:/var/lib/postgresql/data \

-p 5433:5432 \

postgres:13

5433refers to the port on the host machine.5432refers to the port inside the Docker Postgres container.

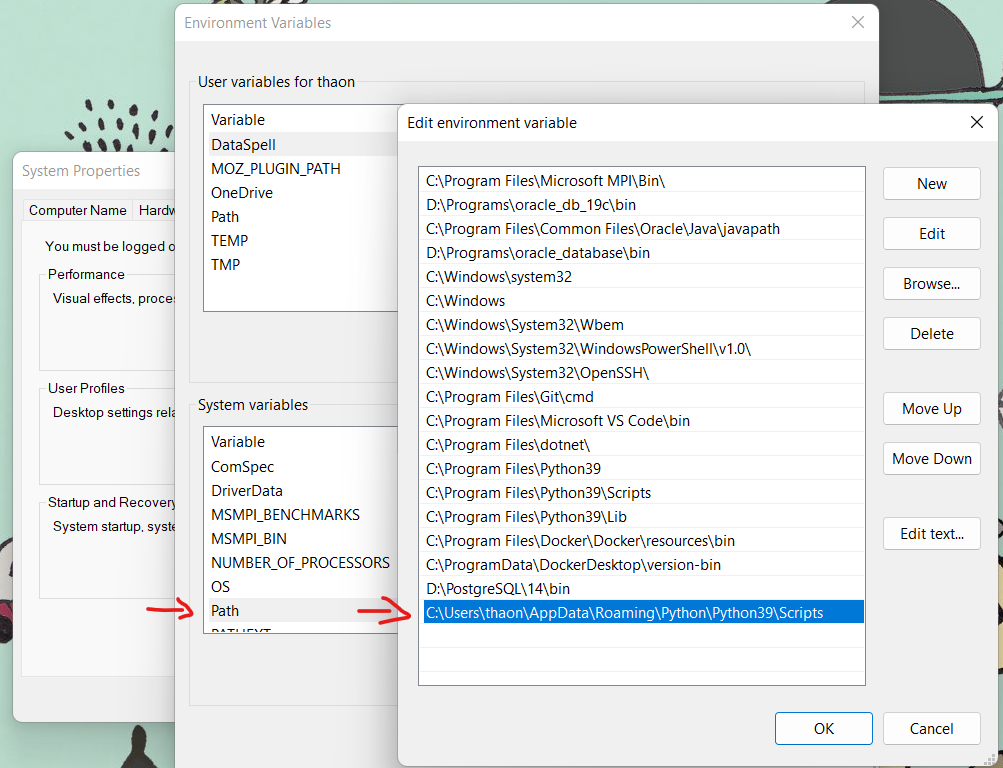

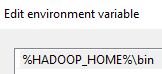

# PGCLI - pgcli: command not found

Problem

If you have already installed pgcli but Bash or the Windows Terminal doesn't recognize the command:

- On Git Bash:

bash: pgcli: command not found - On Windows Terminal:

pgcli: The term 'pgcli' is not recognized…

Solution

Try adding the Python path to the Windows PATH variable:

- Use the command to get the location:

pip list -v - Copy the path, which looks like:

C:\Users\...\AppData\Roaming\Python\Python39\site-packages - Replace

site-packageswithScripts:C:\Users\...\AppData\Roaming\Python\Python39\Scripts

It might be that Python is installed elsewhere. For example, it could be under:

c:\python310\lib\site-packages

In that case, you should add:

c:\python310\lib\Scriptsto PATH.

Instructions

- Add the determined path to

Path(orPATH) in System Variables.

Reference

# PGCLI - running in a Docker container

If running pgcli locally causes issues or you do not want to install it on your machine, you can use it within a Docker container instead.

Below is the usage with values used in the course videos for:

- Network name (Docker network)

- Postgres-related variables for pgcli

- Hostname

- Username

- Port

- Database name

docker run -it --rm --network pg-network ai2ys/dockerized-pgcli:4.0.1

Then execute the following pgcli command:

pgcli -h pg-database -U root -p 5432 -d ny_taxi

You'll be prompted for the password for the user root.

Example Output:

Server: PostgreSQL 16.1 (Debian 16.1-1.pgdg120+1)

Version: 4.0.1

Home: [pgcli.com](http://pgcli.com)

To list tables:

root@pg-database:ny_taxi> \dt

+--------+------------------+-------+-------+

| Schema | Name | Type | Owner |

|--------+------------------+-------+-------|

| public | yellow_taxi_data | table | root |

+--------+------------------+-------+-------+

SELECT 1

Time: 0.009s

root@pg-database:ny_taxi>

# RRPGCLI: Case sensitive use of “Quotations” around columns with capital letters

PULocationID will not be recognized, but "PULocationID" will be. This is because unquoted identifiers are case insensitive. See docs.

# PGCLI - error column c.relhasoids does not exist

When using the command \d <database name> you get the error column c.relhasoids does not exist.

Resolution:

- Uninstall pgcli.

- Reinstall pgcli.

- Restart your PC.

# Postgres: bind: address already in use

Issue

When attempting to start the Docker Postgres container, you may encounter the error message:

Error - postgres port is already in use.

Solutions

Option 1: Identify and Stop the Service

Determine which service is using the port by running:

sudo lsof -i :5432Stop the service that is using the port:

sudo service postgresql stop

Option 2: Map to a Different Port

For a more long-term solution, consider mapping to a different port:

- Map local port 5433 to container port 5432 in your Docker configuration (

Dockerfileordocker-compose.yml). - If using a VM, ensure that port 5433 is forwarded in the host machine configuration.

This approach prevents conflicts and allows the Docker Postgres container to run without interruption.

# PGCLI - After installing PGCLI and checking with `pgcli --help` we get the error: `ImportError: no pq wrapper available`

The error persists because the psycopg library cannot find the required libpq library. Ensure the required PostgreSQL client library is installed:

sudo apt install libpq-dev

Rebuild psycopg:

Uninstall the existing packages:

pip uninstall psycopg psycopg_binary psycopg_c -yReinstall psycopg:

pip install psycopg --no-binary psycopg

The issue should be resolved by now. However, if you still encounter the error:

ModuleNotFoundError: No module named 'psycopg2'

Then run the following:

pip install psycopg2-binary

# Postgres - OperationalError: (psycopg2.OperationalError) connection to server at "localhost" (::1), port 5432 failed: FATAL: password authentication failed for user "root"

This error occurs when uploading data via a connection in Jupyter Notebook:

engine = create_engine('postgresql://root:root@localhost:5432/ny_taxi')

Possible Solutions:

Port Conflict:

- Port 5432 might be occupied by another Postgres installation on your local machine. This can lead to your connection not reaching Docker.

- Try using a different port, such as 5431, or verify the port mapping.

- Alternatively, remove any old or unnecessary Postgres installations if they're not in use.

Windows Service Check:

- Check for any running services on Windows that might be using Postgres.

- Stopping such services might resolve the issue.

# Postgres: connection failed: connection to server at "127.0.0.1", port 5432 failed: FATAL: password authentication failed for user "root"

To resolve the issue of a failed connection to PostgreSQL due to password authentication, consider the following steps:

Check Port Usage: Ensure that port 5432 is properly forwarded. If it is being used by another process, follow these steps to kill it:

sudo lsof -i :5432 sudo kill -9 PIDFor Windows Users: If PostgreSQL is running locally and pgAdmin4 is using the 5432 port, follow these instructions:

- Press Win + R to open the Run dialog.

- Type

services.mscand press Enter. - In the Services window, scroll down to find a service named like

PostgreSQL,postgresql-x64-13, or similar, depending on your PostgreSQL version. - Right-click the PostgreSQL service and select Stop.

# Postgres - OperationalError: (psycopg2.OperationalError) connection to server at "localhost" (::1), port 5432 failed: FATAL: role "root" does not exist

This error can occur in the following scenarios:

- Using

pgcli:pgcli -h localhost -p 5432 -U root -d ny_taxi - Uploading data via a connection in a Jupyter notebook:

engine = create_engine('postgresql://root:root@localhost:5432/ny_taxi')

Solutions:

Port Change:

- Change the port from 5432 to another port (e.g., 5431).

- Example: Change

5432:5432to5431:5432.

User Change:

- Change

POSTGRES_USER=roottoPGUSER=postgres.

- Change

Docker Solution:

- Run

docker compose down. - Remove the folder containing the Postgres volume.

- Run

docker compose upagain.

- Run

Additional Resources:

For more details, refer to this Stack Overflow discussion.

# Postgres - OperationalError: (psycopg2.OperationalError) connection to server at "localhost" (::1), port 5432 failed: FATAL: database "ny_taxi" does not exist

OperationalError: (psycopg2.OperationalError) connection to server at "localhost" (::1), port 5432 failed: FATAL: database "ny_taxi" does not exist

Make sure PostgreSQL is running. You can check that by running:

docker ps

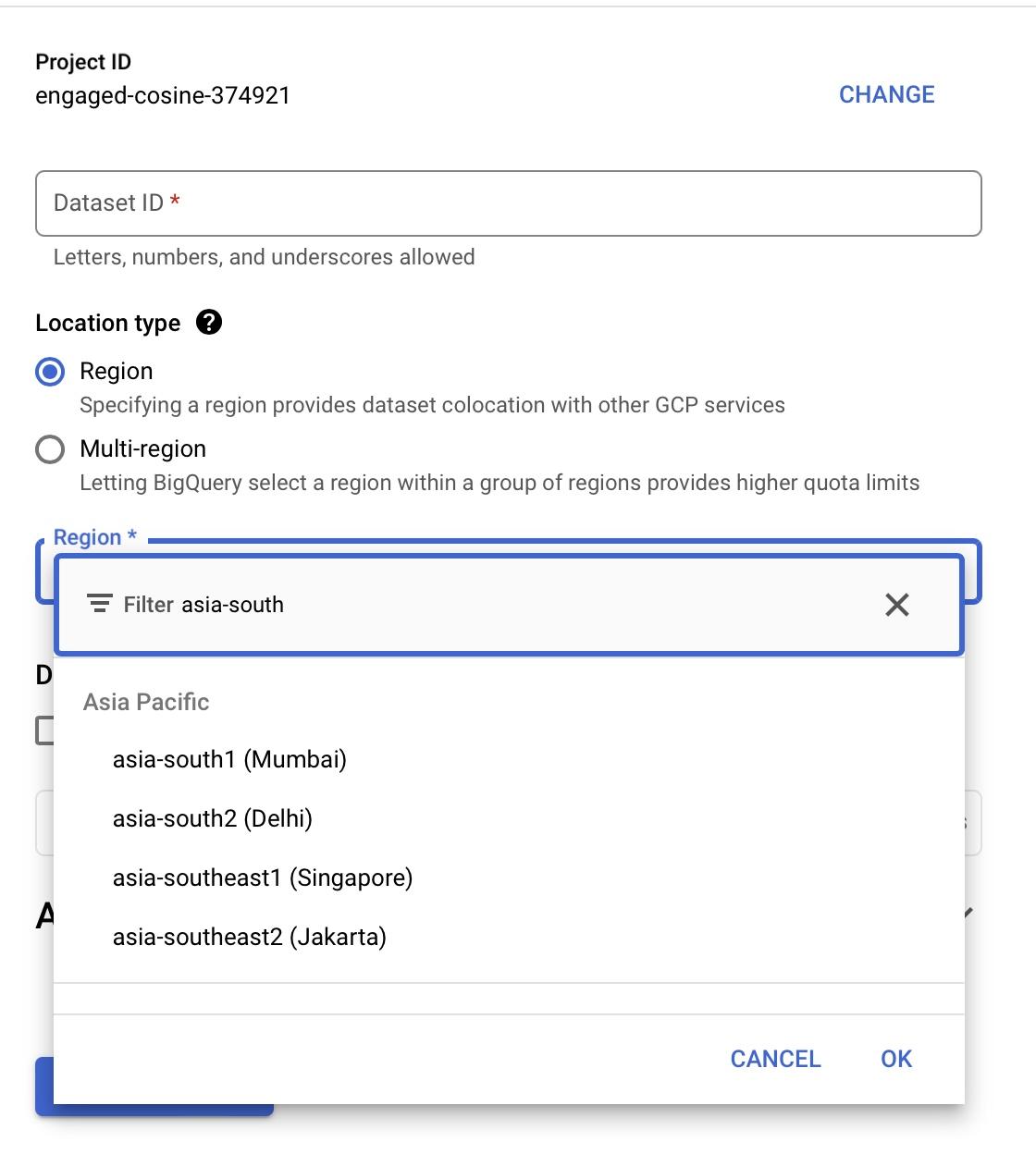

Solution: