Breaking into data engineering takes real, hands-on experience with production tools, but most courses stop at theory.

The Data Engineering Zoomcamp changes that. It’s a free data engineering course that teaches you how to build production-grade data pipelines from start to finish. You’ll work with Docker, Terraform, BigQuery, dbt, Spark, and Kafka, and graduate with a portfolio project and a certificate.

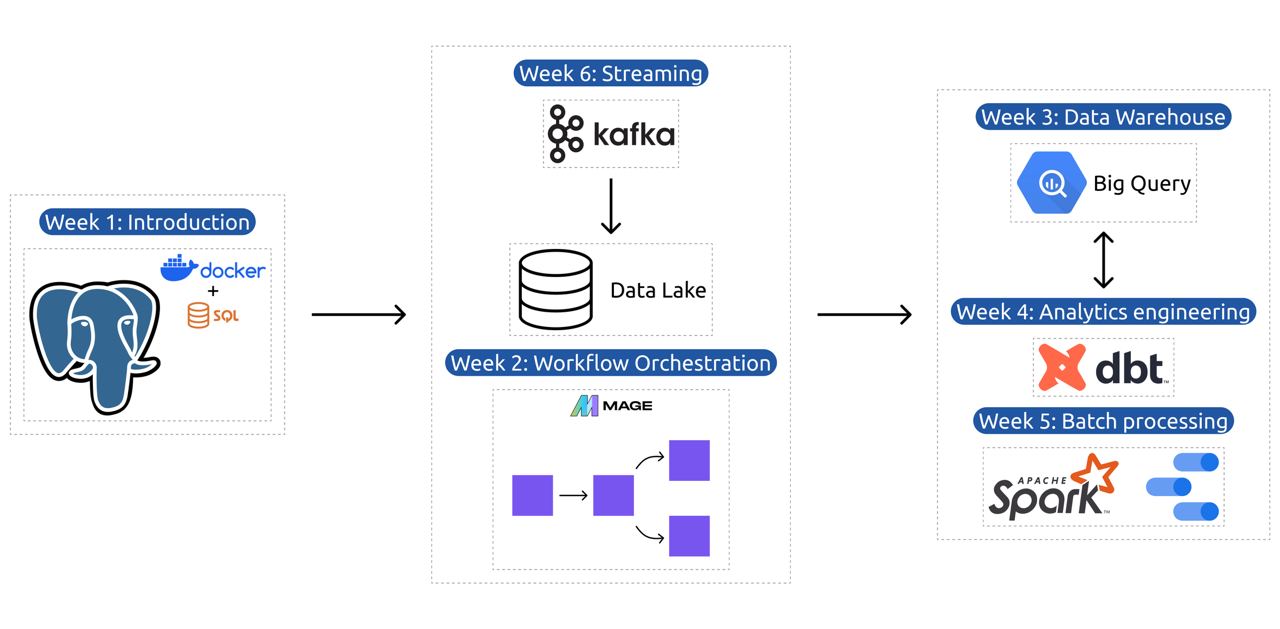

Complete Data Engineering Zoomcamp curriculum: from infrastructure setup to stream processing

It’s ideal for beginners and career switchers preparing for junior data engineer roles, as well as experienced professionals who want to refresh their knowledge, expand their network, or test themselves as a mentor for less experienced professionals.

Unlike most courses, DE Zoomcamp helps you build your public portfolio, share your work confidently, and connect with a global community for feedback, mentorship, and career opportunities.

TL;DR: Data Engineering Zoomcamp is a free 9-week course on building production-ready data pipelines. The next cohort starts in January 2026. Join the course here.

Table of Contents

- What is the Data Engineering Zoomcamp?

- Why Learn Data Engineering?

- Who Can Learn Data Engineering?

- Course Prerequisites

- Course Curriculum: What You’ll Learn

- How the Data Engineering Zoomcamp Works

- Zoomcamp vs. Bootcamp

- How to Get Started

- Testimonials

- Frequently Asked Questions

What is the Data Engineering Zoomcamp?

Data Engineering Zoomcamp is a 9-week program that follows a clear progression: infrastructure setup, workflow orchestration, data warehousing, analytics engineering, batch processing, streaming, and a final capstone project.

Data Engineering Zoomcamp GitHub repository showing the course materials

What makes it different is the community. You’ll join an active Slack workspace where thousands of learners troubleshoot together, share progress, and connect for jobs and collaborations. The course encourages learning in public: sharing your work earns bonus points and builds your online presence.

The final three weeks focus on your capstone that gets peer-reviewed, and you graduate with a polished GitHub portfolio that proves you can ship real data systems.

Why Learn Data Engineering?

Typically, data science teams are comprised of data analysts, data scientists, and data engineers. Among different data roles, data engineers are the specialists who connect all the pieces of the data ecosystem within a company or institution.

But why would you want to become one? Here are some of the main reasons that make data engineering roles rewarding and valuable:

- You become the builder behind every data product that keeps information flowing.

- You increase your earning potential by joining a smaller, high-value pool of professionals.

- You develop transferable skills that are valuable across industries and provide long-term career flexibility. These skills are also foundational for roles in machine learning and MLOps.

- You future-proof your career by building a mindset that will stay essential even as tools change and processes get automated.

Who Can Learn Data Engineering (and What Roles It Leads To)

The great thing about data engineering is that you don’t need to be a computer science graduate or have years of experience in data to start.

If you understand basic programming and have curiosity about how data systems work, you can learn data engineering.

If you tick most of the following boxes, data engineering might be a good fit for you:

- Enjoy solving technical, logical problems and building things that work reliably.

- Like Python, SQL, or scripting and want to use those skills for something impactful.

- Want to understand how data moves inside organizations — from raw sources to analysis and AI (including LLM applications).

- Prefer practical, system-level work over purely theoretical or statistical modeling.

- Appreciate clear structure and step-by-step learning (which is how DE Zoomcamp is built).

Course Prerequisites

As mentioned earlier, learning data engineering doesn’t require prior data engineering experience.

The course is designed to be accessible to beginners.

The only requirements are:

- Comfort with the command line (basic navigation and file operations)

- Basic SQL knowledge (SELECT, WHERE, JOIN statements)

- Python experience is helpful but not required

If you’re completely new to programming, consider spending a few weeks learning the basics before starting the course.

Course Curriculum: What You’ll Learn in the Data Engineering Zoomcamp

The course follows a logical progression from infrastructure setup to advanced data processing, culminating in an end-to-end project.

| Module | What You'll Learn | Tools & Technologies |

|---|---|---|

| 1. Infrastructure & Prerequisites | • Set up your development environment with Docker and PostgreSQL • Learn cloud basics with GCP • Master infrastructure-as-code using Terraform |

Docker, PostgreSQL, GCP, Terraform |

| 2. Workflow Orchestration | • Master data pipeline orchestration with Mage.AI • Implement and manage Data Lakes using Google Cloud Storage • Build automated, reproducible workflows |

Mage.AI, Google Cloud Storage |

| 3. Data Warehouse | • Deep dive into BigQuery for enterprise data warehousing • Learn optimization techniques like partitioning and clustering • Implement best practices for data storage and retrieval |

BigQuery |

| 4. Analytics Engineering | • Transform raw data into analytics-ready models using dbt • Develop testing and documentation strategies • Create impactful visualizations with modern BI tools |

dbt, BI tools |

| 5. Batch Processing | • Process large-scale data with Apache Spark • Master Spark SQL and DataFrame operations • Optimize batch processing workflows |

Apache Spark, Spark SQL |

| 6. Stream Processing | • Build real-time data pipelines with Kafka • Develop streaming applications using KSQL and Faust • Implement stream processing patterns |

Kafka, KSQL, Faust |

| Final Project | • Build an end-to-end data pipeline from ingestion to visualization • Apply all learned concepts in a real-world project • Create a portfolio-ready project with documentation |

Cloud platforms (GCP/AWS/Azure), Terraform, Spark, Kafka, dbt, BigQuery |

Capstone Project

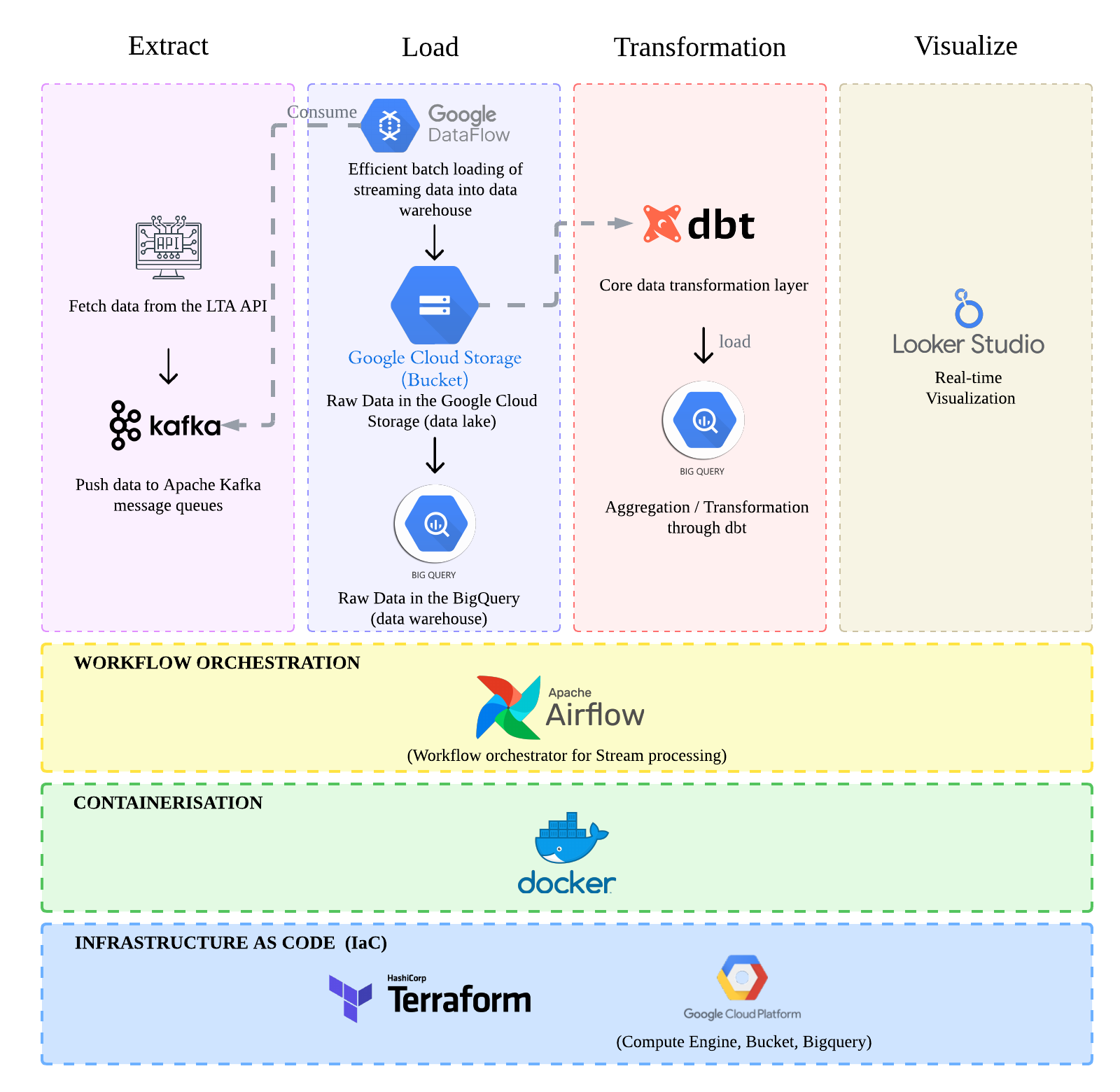

The final three weeks are dedicated to applying your knowledge in a real-world project that showcases everything you’ve learned throughout the course.

Data Engineering Zoomcamp capstone project of one of the course graduates, Maddie Zheng, showing project architecture: extract, load, transform, and visualize data. Source: Maddie's project

You’ll build an end-to-end data pipeline using a dataset of your choice, implementing both data lake and warehouse solutions with proper documentation.

Your project will be peer-reviewed by fellow participants and you’ll peer-review at least three other projects.

| Project Requirements | Deliverables | Evaluation Criteria |

|---|---|---|

|

• Select and process a dataset that interests you • Build end-to-end data pipelines (batch or streaming) • Implement both data lake and warehouse solutions • Create analytical dashboards |

• Production-ready data pipeline • Documented data models • Interactive dashboard • Project presentation |

• Peer review of at least three other projects • Technical implementation quality • Documentation completeness • Solution architecture design |

How the Data Engineering Zoomcamp Works

The course runs for 9 weeks in cohort format, providing structure and community support throughout your learning journey.

While you can access all course materials at your own pace without joining a cohort, participating in the structured program offers significant advantages: graded homework assignments, project submission and evaluation, peer interaction, and the opportunity to earn a certificate.

Below, we list the key features of the course and how they work.

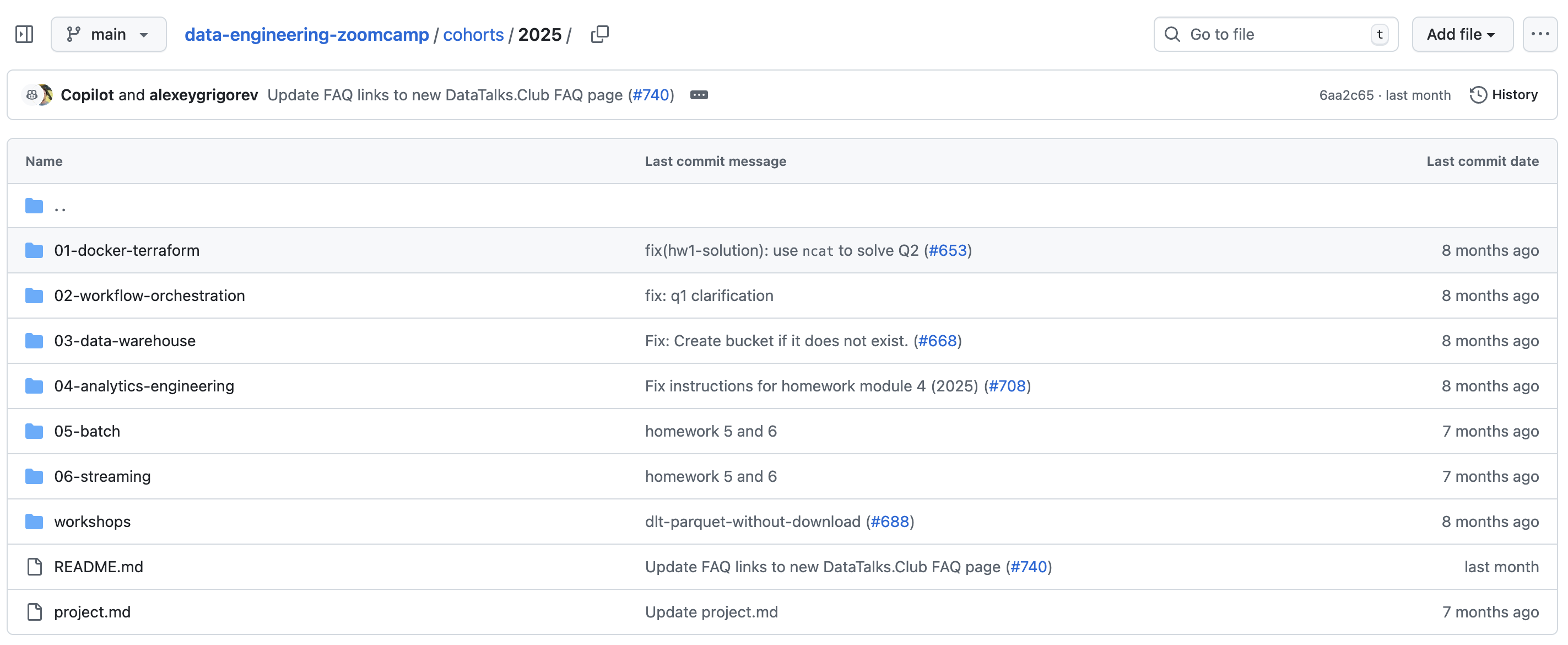

GitHub Repository: The Central Hub of the Course

Data Engineering Zoomcamp GitHub repository showing the course materials

All course materials live in the GitHub repository. The lectures are pre-recorded and available on YouTube, so you can watch at your own pace.

Data Engineering Zoomcamp YouTube playlist with pre-recorded lectures

Homework Assignments

To reinforce your learning, we release homework assignments for each week of the course. You can submit a homework assignment at the end of each week.

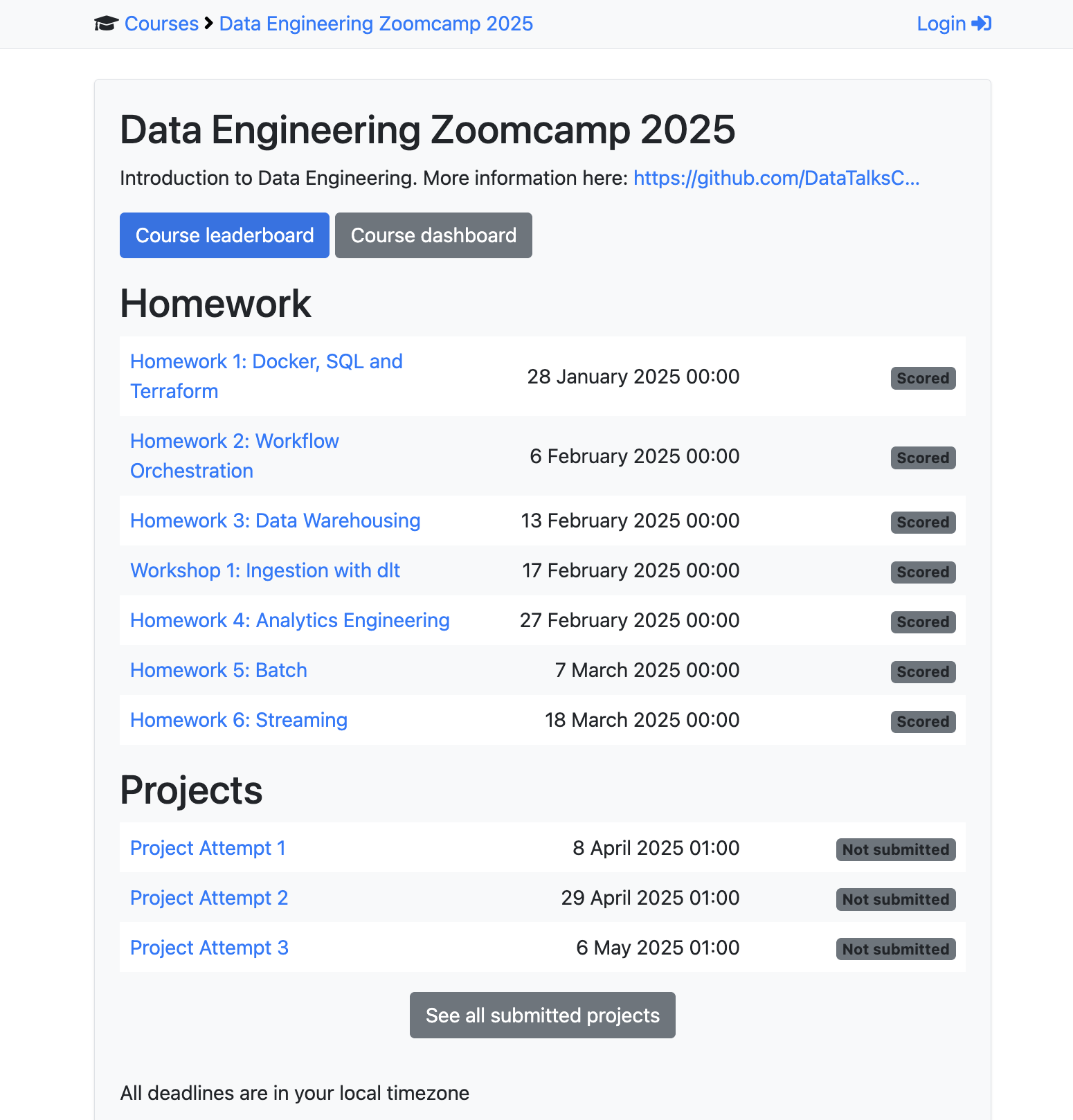

Data Engineering Zoomcamp schedule showing the course schedule and submission deadlines

It doesn’t count toward your certificate, but it helps you practice and appears on an optional anonymous leaderboard.

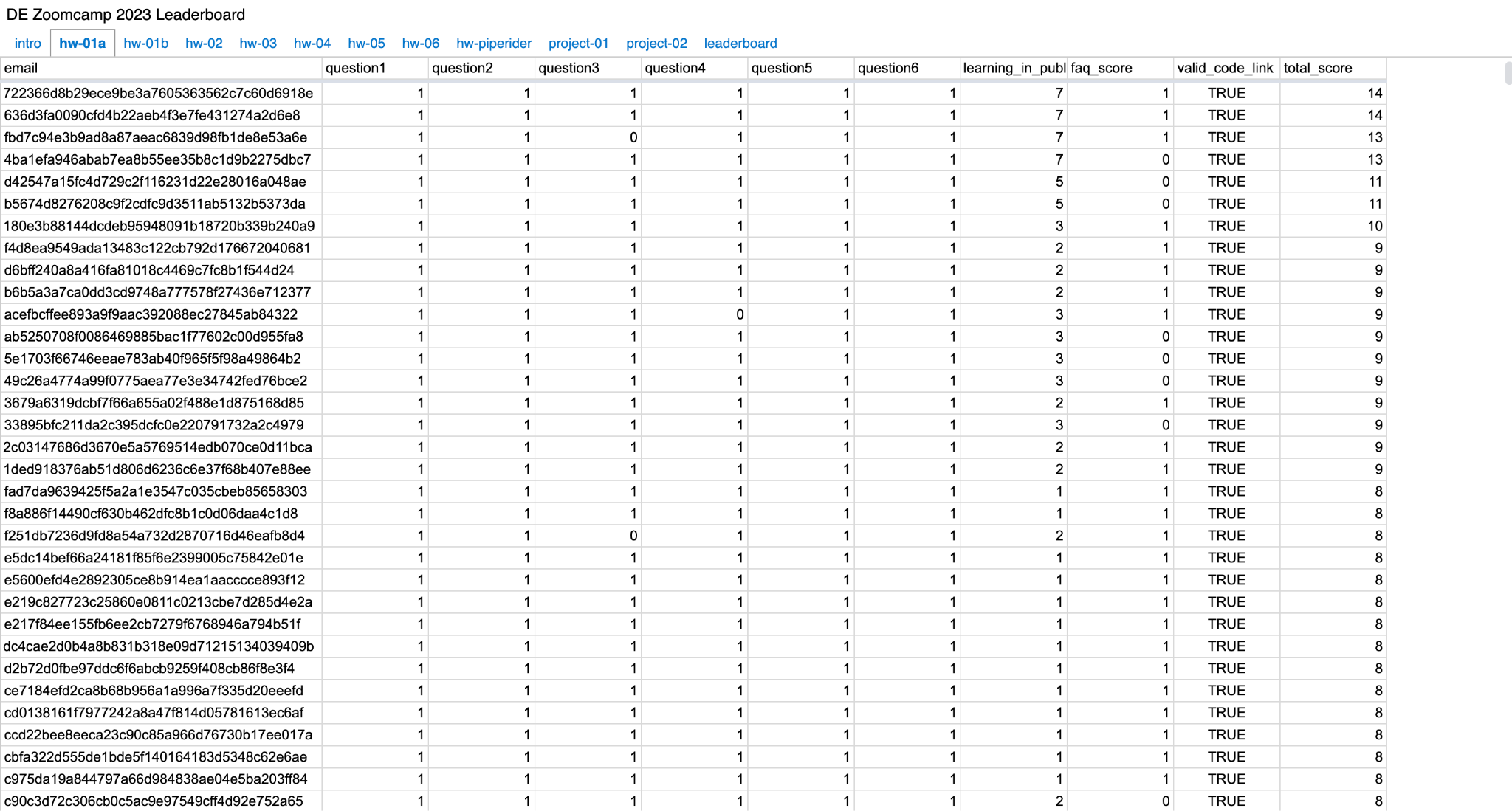

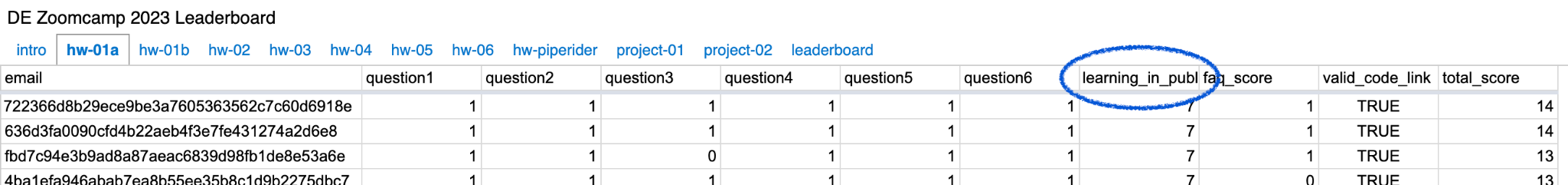

Your scores are added to an anonymous leaderboard, creating friendly competition among learners and motivating you to do your best.

Course leaderboard displaying student progress and achievements anonymously

You can earn bonus points by learning in public — sharing your work on blogs, YouTube, or social media. The next section explains how it works in practice.

Learning in Public: Build Your Online Presence

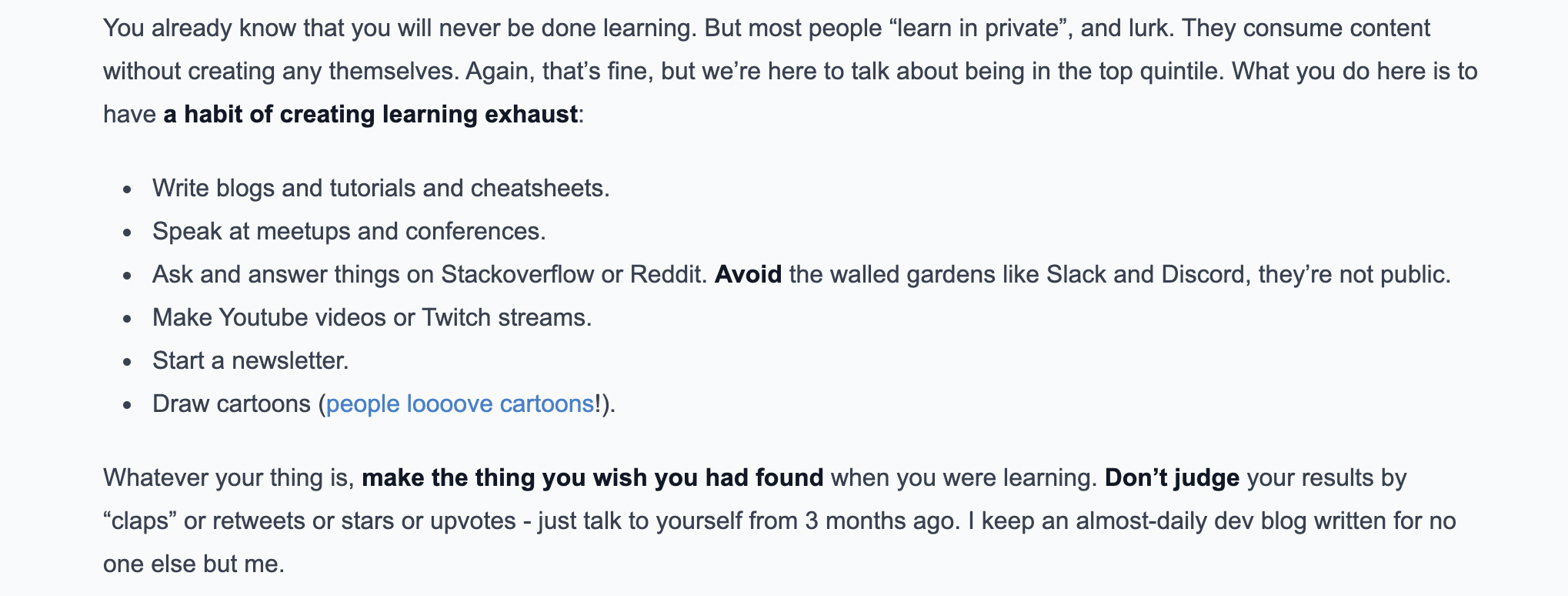

A unique feature is our “learning in public” approach, inspired by Shawn @swyx Wang’s article. We believe that everyone has something valuable to contribute, regardless of their expertise level.

An extract from Shawn @swyx Wang's article about learning in public

Throughout the course, we actively encourage and incentivize learning in public. By sharing your progress, insights, and projects online, you earn additional points for your homework and projects.

Previous cohort's leaderboard highlighting bonus points earned through learning in public activities

This not only demonstrates your knowledge but also builds a portfolio of valuable content. Sharing your work online also helps you get noticed by social media algorithms, reaching a broader audience and creating opportunities to connect with individuals and organizations you may not have encountered otherwise.

Many of our graduates have shared that their social media presence has helped them attract job offers and collaborations.

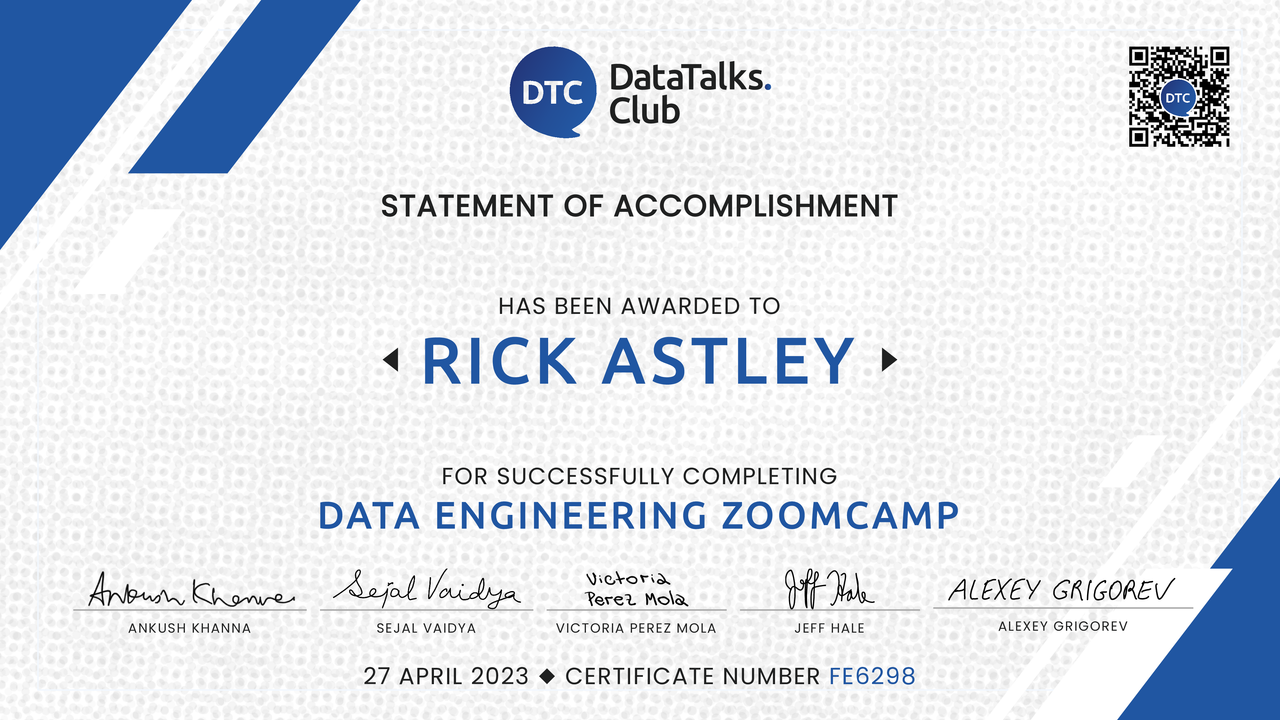

How to Get a Certificate

Data Engineering Zoomcamp certificate showing the certificate requirements

To earn your certificate, you must complete the course with a live cohort and fulfill three key requirements:

- Build a capstone project: Create an end-to-end data pipeline that demonstrates your mastery of the course concepts

- Submit on time: Meet the project submission deadline to qualify for certification

- Peer review: Evaluate and provide feedback on 3 fellow students’ projects during the peer review process

What is DataTalks.Club Community? A Place to Connect and Learn with Other Data Professionals

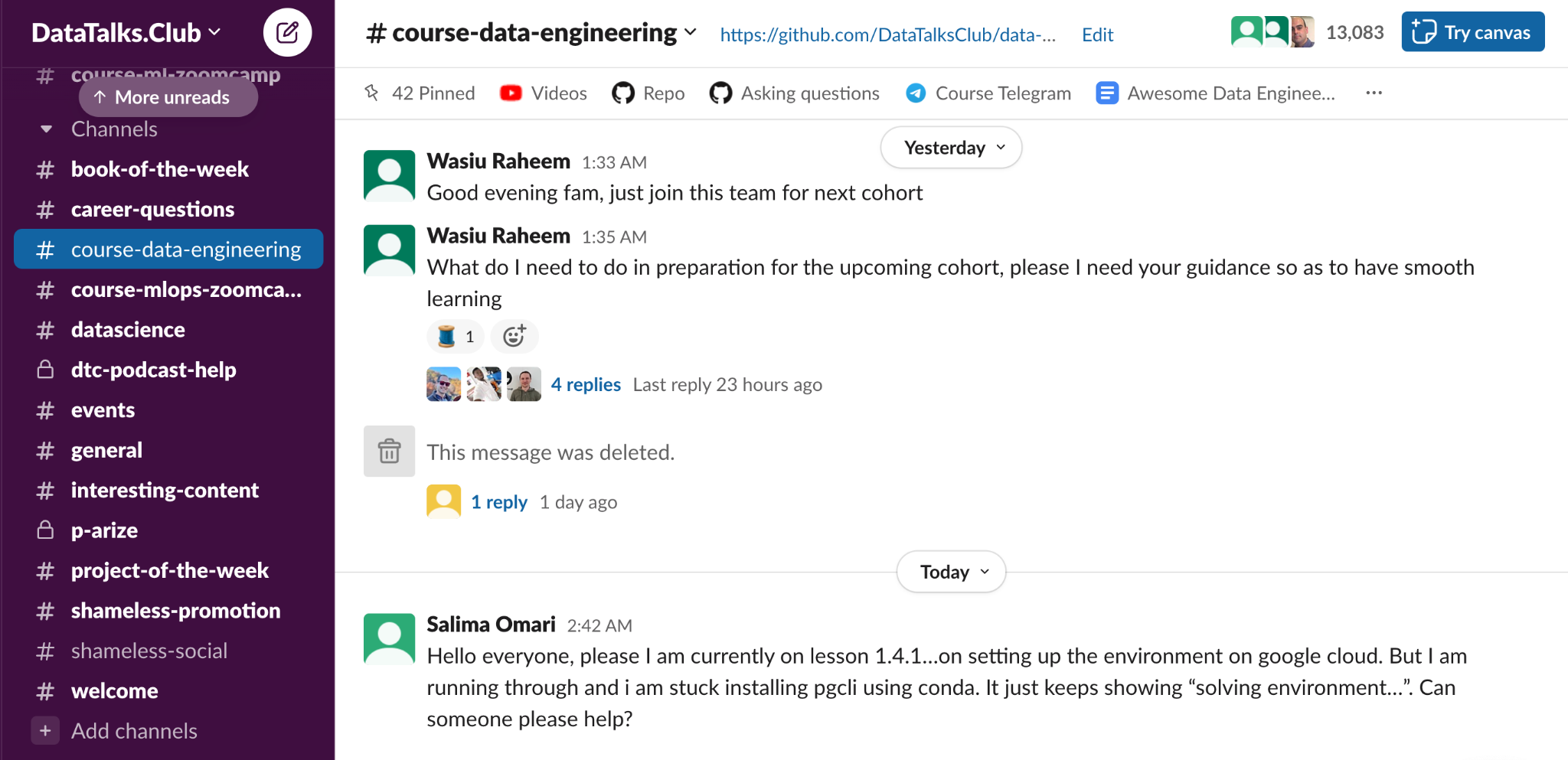

Active discussions and peer support in our dedicated Slack community channel

DataTalks.Club is a global community of 80,000+ data professionals who connect on Slack to share knowledge, ask career questions, and discuss everything from analytics and visualization to machine learning and data engineering. As one of the largest digital groups dedicated to data, it’s where you’ll find data scientists, ML engineers, data analysts, and enthusiasts at all career stages.

When you join a cohort, the dedicated course channel becomes your home base. Here, you’ll troubleshoot problems with peers working through the same challenges and share your progress and insights.

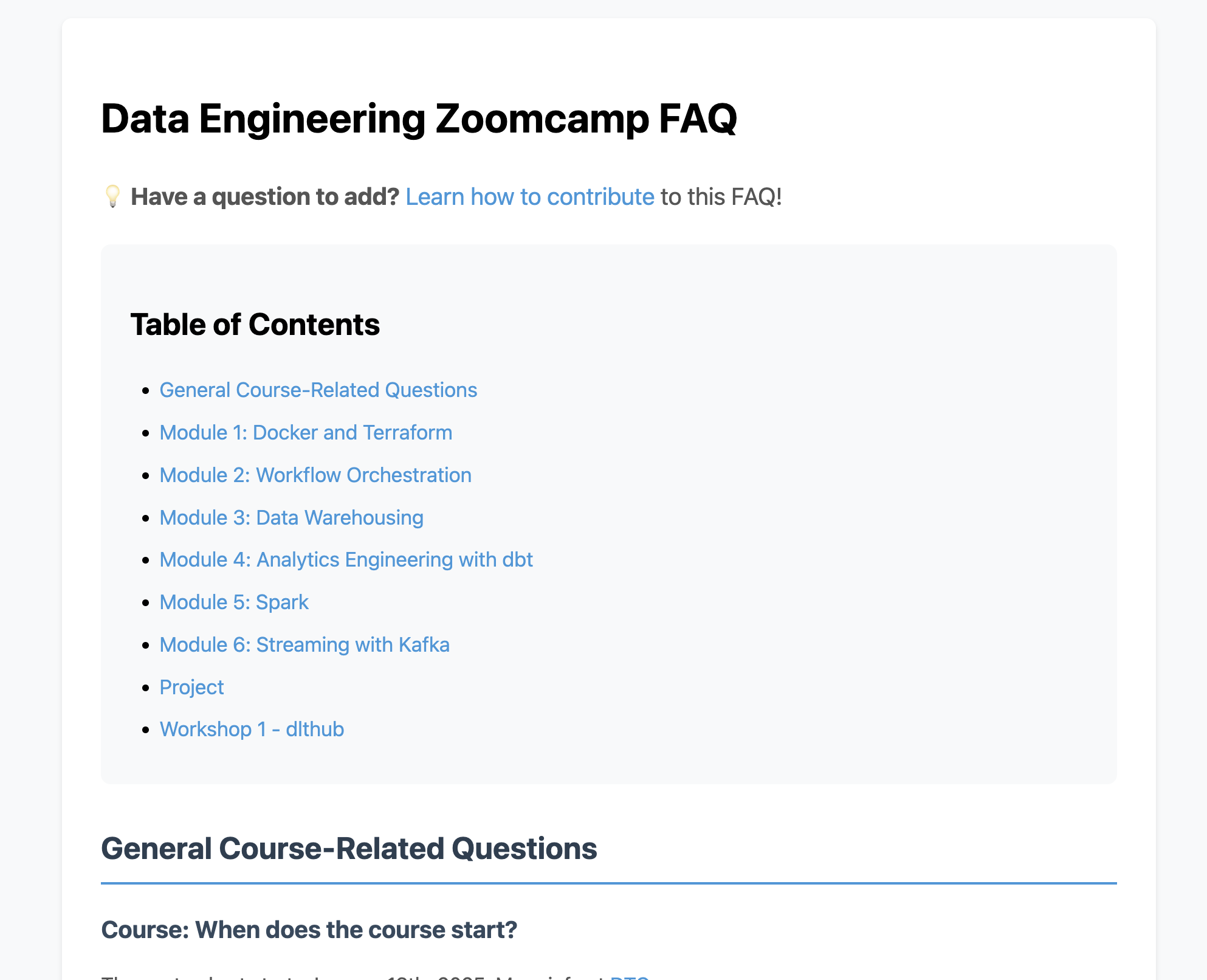

DataTalks.Club FAQ repository showing common questions and technical issues

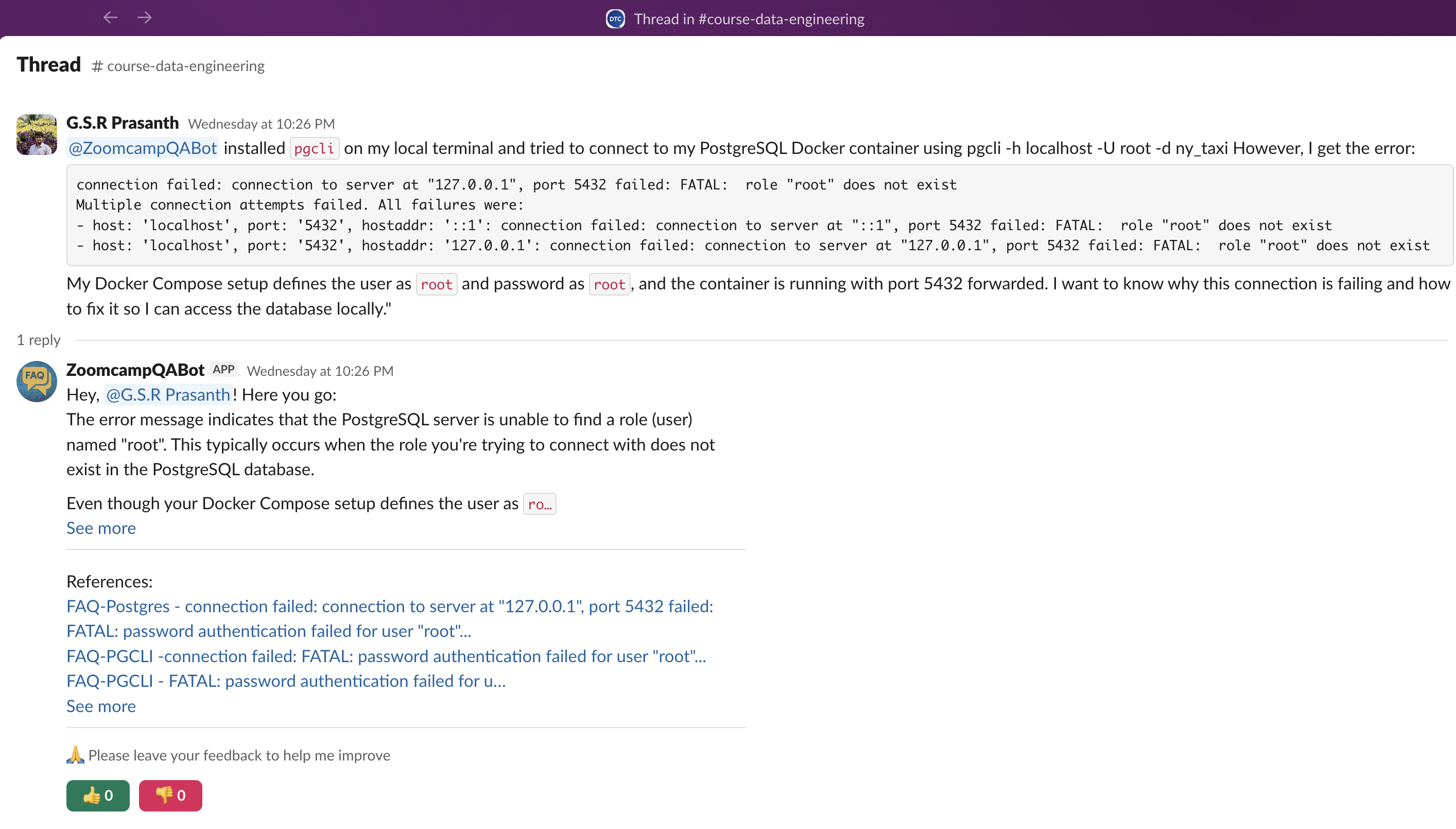

Beyond peer support, there are two ways to get help: our FAQ repository covers common questions and technical issues, and the @ZoomcampQABot in Slack provides quick answers when you need them.

DataTalks.Club Zoomcamp QABot in Slack providing quick answers when you need them

Zoomcamp vs. Bootcamp: What’s the Difference?

We often get asked what the difference is between the Data Engineering Zoomcamp and paid bootcamps.

Below, we list the key features of the Data Engineering Zoomcamp and how they compare to paid bootcamps.

| Feature | DE Zoomcamp (Cohort) | DE Zoomcamp (Self-paced) | Paid Bootcamps |

|---|---|---|---|

| Cost | Free | Free | $2,000–$10,000+ |

| Format | 9-week cohort with fixed schedule | Learn anytime at your own pace | Fixed schedule, instructor-led |

| Homework | Weekly scored assignments | Available but no scoring | Weekly with instructor feedback |

| Projects | Capstone project with peer review and scoring | Build on your own, no evaluation and scoring | Instructor-reviewed projects |

| Certificate | Yes, after completing project + peer reviews | No certificate | Certificate of completion |

| Community Support | Active Slack + optional live Q&A sessions | Slack community only | Instructor-led, 1:1 or group mentorship |

| Learning in Public | Encouraged with bonus points | Optional | Rarely emphasized |

| Timeline | 9 weeks (Jan–Mar 2026) | Flexible, self-paced | Typically 12–24 weeks |

| Best For | Career switchers or experienced data engineers wanting community and accountability | Self-motivated learners exploring data engineering | Those needing intensive structured mentorship and guidance |

How to Get Started with the Data Engineering Zoomcamp

To get started with the Zoomcamp, you can either join a live cohort or learn at your own pace.

All materials are freely available in the Data Engineering Zoomcamp GitHub repository. Each module has its own folder (e.g., 01-docker, 03-data-warehouse), and cohort-specific homework and deadlines are in the cohorts directory. Lectures are pre-recorded and available in our official Data Engineering Zoomcamp YouTube Playlist, so you can watch at your own pace.

Learn at Your Own Pace

The self-paced mode lets you start immediately and progress on your own schedule. You’ll get access to the course materials and the community and can complete the course at a pace that works for you.

All you need is to go to the Data Engineering Zoomcamp GitHub repository and start learning. It serves as a central hub for the course for easier navigation through the course materials. All the lectures are pre-recorded and available on YouTube in our official Data Engineering Zoomcamp YouTube Playlist, so you can watch whenever it suits you.

You can also join the DataTalks.Club Slack community to get help and support from the community in the #course-data-engineering channel. Homework and solutions are available on the course platform, and you can build a project for your portfolio.

Remember, self-paced learning does not include homework submissions, project evaluations, or the ability to earn a certificate. To receive certification, you need to join an active cohort.

Join a Live Cohort

2026 Cohort: Starts January 2026. Register here: Fill in this form

When you join a live cohort, you’ll work through the same materials as self-paced learners, but with the added structure of a published schedule and the energy of hundreds of peers progressing alongside you.

The course runs once per year (starts around January). Each module typically spans one week: you watch the lectures, complete hands-on exercises, and submit a homework assignment. Your submissions get scored and appear on an anonymous leaderboard.

After completing all six modules, the capstone project phase begins. You’ll build your own end-to-end pipeline, submit it through our form, and peer-review at least three other students’ projects while yours gets reviewed by your peers. This reciprocal process gives you valuable feedback on your work and exposes you to different approaches and solutions you might not have considered.

Even if you join after the official start date, you can still follow along — but note that some homework forms may already be closed. All active deadlines are listed on the course platform.

To earn a certificate, you’ll need enough time to complete one final project and the required peer reviews. Details are in the Projects and Certificate sections.

Ready to join DE Zoomcamp? Here’s how it works:

- Register for the course, you’ll be automatically accepted into the next cohort

- Join the DataTalks.Club Slack community and the

#course-data-engineeringchannel for updates, questions, and peer support - (Optional) Get a head start by exploring the GitHub repository and watching lectures before the cohort officially begins

- When the cohort starts, you’ll receive an email with the full schedule and submission deadlines

- Follow the weekly rhythm: watch lectures, complete exercises, submit homework

- During the final three weeks, build and submit your capstone project, then peer-review three other projects

- Receive your certificate once your project passes peer review

The entire journey takes 9 weeks from start to certificate, and you’ll be part of a global cohort tackling the same challenges at the same time.

Comparison

We summarized the key differences between the two joining options in this table:

| Feature | Self-Paced | Live Cohort |

|---|---|---|

| Timing | Learn at your own pace, start anytime | Fixed 9-week schedule (January–March each year) |

| Course Materials | Full access to GitHub repository and YouTube lectures | Full access to GitHub repository and YouTube lectures |

| Community | Access to Slack community (#course-data-engineering) |

Access to Slack community (#course-data-engineering) |

| Homework | Available but not scored | Scored automatically, appears on leaderboard |

| Projects | Build on your own, no evaluation | Submit 1 final project (capstone) with peer review |

| Certificate | Not available | Available after completing project and peer reviews |

| Structure | Flexible, no deadlines | Weekly rhythm with deadlines and peer accountability |

Testimonials

We’ve collected some testimonials from our students who have completed the Data Engineering Zoomcamp.

Thank you for what you do! The Data Engineering Zoomcamp gave me skills that helped me land my first tech job.

— Tim Claytor (Source)

Three months might seem like a long time, but the growth and learning during this period are truly remarkable. It was a great experience with a lot of learning, connecting with like-minded people from all around the world, and having fun. I must admit, this was really hard. But the feeling of accomplishment and learning made it all worthwhile. And I would do it again!

— Nevenka Lukic (Source)

One of the significant things I inferred from the Zoomcamp is to prioritize fundamentals and principles over ever-evolving tools and tech stacks. Hugely grateful to Alexey Grigorev for putting together this incredible course and offering it for free.

Such a fun deep dive into data engineering, cloud automation, and orchestration. I learned so much along the way. Big shoutout to Alexey Grigorev and the DataTalksClub team for the opportunity and guidance throughout the 3 months of the free course.

— Assitan NIARE (Source)

If you’re serious about breaking into data engineering, start here. The repo’s structure, community, and hands-on focus make it unparalleled.

— Wady Osama (Source)

Frequently Asked Questions

Yes. The Data Engineering Zoomcamp is a free, project-based data engineering course that covers pipelines, data warehouses, batch and streaming, and orchestration—without any tuition fees.

Bootcamps usually charge tuition, offer full-time schedules, and sometimes include dedicated career services. Zoomcamps are part-time, community-run, open-source, and free, with an emphasis on self-directed learning and peer support.

During an active cohort, you can earn a certificate by completing the final project, reviewing peers’ projects, and meeting all deadlines. Self-paced learners don’t receive certificates but still build the same projects.

To earn a certificate, you need to complete one capstone project by building an end-to-end data pipeline. After submitting your project, you must also review at least 3 other students’ projects by the deadline and provide constructive feedback.

The next cohort of the DE Zoomcamp starts around January each year. Register here: https://airtable.com/appzbS8Pkg9PL254a/shr6oVXeQvSI5HuWD before the course starts.

No, the 2025 cohort is closed. You can join the 2026 cohort when it starts in January 2026. Register here before the course starts.

DE Zoomcamp is run by DataTalks.Club, a global online community of data professionals and learners. While the initial idea and most of the content were created by Alexey Grigorev, members of the DataTalks.Club community contribute as instructors and maintainers.

DataTalks.Club is often referred to as “the DataTalks Club”, “data talks club”, or “datatalks club”.

You should be comfortable with basic coding (Python or similar), the command line, and basic SQL. No prior data engineering experience is required.

Expect to spend 5-15 hours per week, depending on your background. This includes watching videos, completing homework, and working on the capstone project. More time may be needed during the final project weeks.

Yes! All course materials, videos, and recordings remain available after the cohort ends, and you can learn at your own pace. You’ll have access to the Slack community for support. However, self-paced learning does not include homework submissions, project evaluations, or the ability to earn a certificate. To receive a certificate, you need to join an active cohort.

You have multiple support channels available. Join the Slack community where you can ask questions and get help from instructors and fellow students. We also have an FAQ repository with answers to common questions and a @ZoomcampQABot in Slack for quick help.

The GitHub repository is https://github.com/DataTalksClub/data-engineering-zoomcamp.

You can find the full syllabus in the readme of the Data Engineering Zoomcamp GitHub repository.

Yes, people use these names interchangeably. Throughout this page we’ll use “Data Engineering Zoomcamp” as the canonical name.

The course focuses on building real-world pipelines and infrastructure with tools like Docker, BigQuery, Spark, and Kafka. Many learners have used the final project and GitHub portfolio as part of their data engineering job search.

You can request an invite via the Slack signup form. After confirming your email, you’ll receive an invitation link to join the workspace.

The main community hub is Slack. Data Engineering Zoomcamp also has a Telegram announcement channel, but all core discussion happens in Slack.