Teams turn to large language models (LLMs) because they want applications that can answer questions or search information more intelligently. But once they start building, they discover how unstable these systems can be: answers change from one run to the next, search quality depends heavily on how data is indexed, and a small prompt adjustment can break a feature that worked yesterday. Without a clear way to retrieve the right context, measure output quality, or monitor how the system behaves in the real world, it’s difficult to trust the results.

LLM Zoomcamp course cover

LLM Zoomcamp is designed to close this gap. This free course covers the fundamentals of LLMs and RAG, how vector and hybrid search work in practice, how to evaluate retrieval and model responses, and how to monitor user behavior once a system is live. The focus is on practical, grounded methods that make LLM-powered applications more predictable, stable, and easier to maintain.

Table of Contents

- Why Learning to Build LLM Applications is Important

- Who the Course Is For

- Course Curriculum

- How LLM Zoomcamp Works

- What is DataTalks.Club Community?

- How to Join LLM Zoomcamp

- Testimonials

- Frequently Asked Questions

Why Learning to Build LLM Applications Is Important

Large language models are rapidly expanding across industries, yet transforming experimental prototypes into reliable, production-ready systems remains a significant challenge.

According to Red Hat’s research, AI has become a core strategic priority for 72% of organizations in Europe, the Middle East, and Africa, with plans to increase AI investments by an average of 32% by 2026. However, only 7% of organizations currently report “driving customer value” at scale from their AI investments.

This gap between ambitious AI strategies and real outcomes is why expertise in building, evaluating, and maintaining LLM-powered applications is increasingly sought after in today’s market.

Who the Course Is For

LLM Zoomcamp is designed for anyone who wants to build practical, reliable LLM-powered applications. If you can write Python, use the command line, and work with Docker, you have everything you need to follow the course.

It’s a good fit for:

- Software engineers who want to add LLMs, RAG, and modern search capabilities to real products.

- Data engineers interested in how vector search, hybrid search, and retrieval pipelines fit into production systems.

- ML practitioners who want a structured way to evaluate and monitor LLM-based applications.

- Developers new to LLMs who already know Python and want a clear, practical introduction to building end-to-end AI applications.

- Technical product managers or tech leads who need a working understanding of how LLM systems behave in real usage.

- Anyone maintaining an existing LLM feature and struggling with drift, inconsistent answers, or unreliable retrieval.

You don’t need prior experience with AI or ML. The course focuses on the engineering side of modern LLM applications and guides you through the concepts step by step.

Course Curriculum

The course follows a practical, production-focused approach to building LLM applications. Each module adds a new layer—from RAG foundations to vector search, evaluation, monitoring, and a complete end-to-end project.

| Module | Topic | Focus | Tools You'll Use |

|---|---|---|---|

| 1 | Introduction to LLMs and RAG | Build your first RAG pipeline with LLM fundamentals and text search | OpenAI API, Elasticsearch |

| 2 | Vector Search | Create embeddings, index documents, and retrieve with semantic search | Qdrant, dlt |

| 2A | Agents (Bonus) | Add agentic behavior and function calling to RAG pipelines | OpenAI Function Calling |

| 3 | Evaluation | Measure retrieval quality and answer accuracy with offline and online evaluation | LLM-as-a-Judge, evaluation frameworks |

| 4 | Monitoring | Track user feedback, chat logs, and system performance in production | Grafana, monitoring dashboards |

| 5 | Best Practices | Optimize retrieval with hybrid search, reranking, and orchestration patterns | LangChain, hybrid search tools |

| 6 | End-to-End Project | Build a complete RAG application combining all components | All tools from previous modules |

What You’ll Build: Course Project

For the final project, you’ll create a complete end-to-end RAG application. The goal is to show that you can move from raw data to a working, searchable AI system that users can interact with, and that you can evaluate and monitor.

Example project: Fitness Assistant - a conversational AI that helps users choose exercises and find alternatives, making fitness more accessible for beginners who find gyms intimidating or lack access to personal trainers

You’ll build:

- A searchable knowledge base: Choose a dataset and ingest it, clean it, and store it in a format suitable for retrieval.

- A retrieval pipeline that works in practice: Implement the core RAG flow—retrieve relevant context from your knowledge base, assemble prompts, send them to an LLM, and return grounded answers.

- An evaluation process: Measure how well your system retrieves and answers information. This can include search metrics, LLM-as-a-judge, or your own evaluation framework.

- A user-facing interface: Build a simple UI or API so others can try your application. This can be Streamlit, FastAPI, a small web page, or anything that makes interaction straightforward.

- Monitoring and feedback loops: Once your app works, you’ll add a basic monitoring layer—tracking queries, feedback, or performance so you can see how it behaves over time.

By the end, you’ll have a working RAG system that demonstrates your ability to design, build, evaluate, and deploy an LLM-powered application.

How LLM Zoomcamp Works

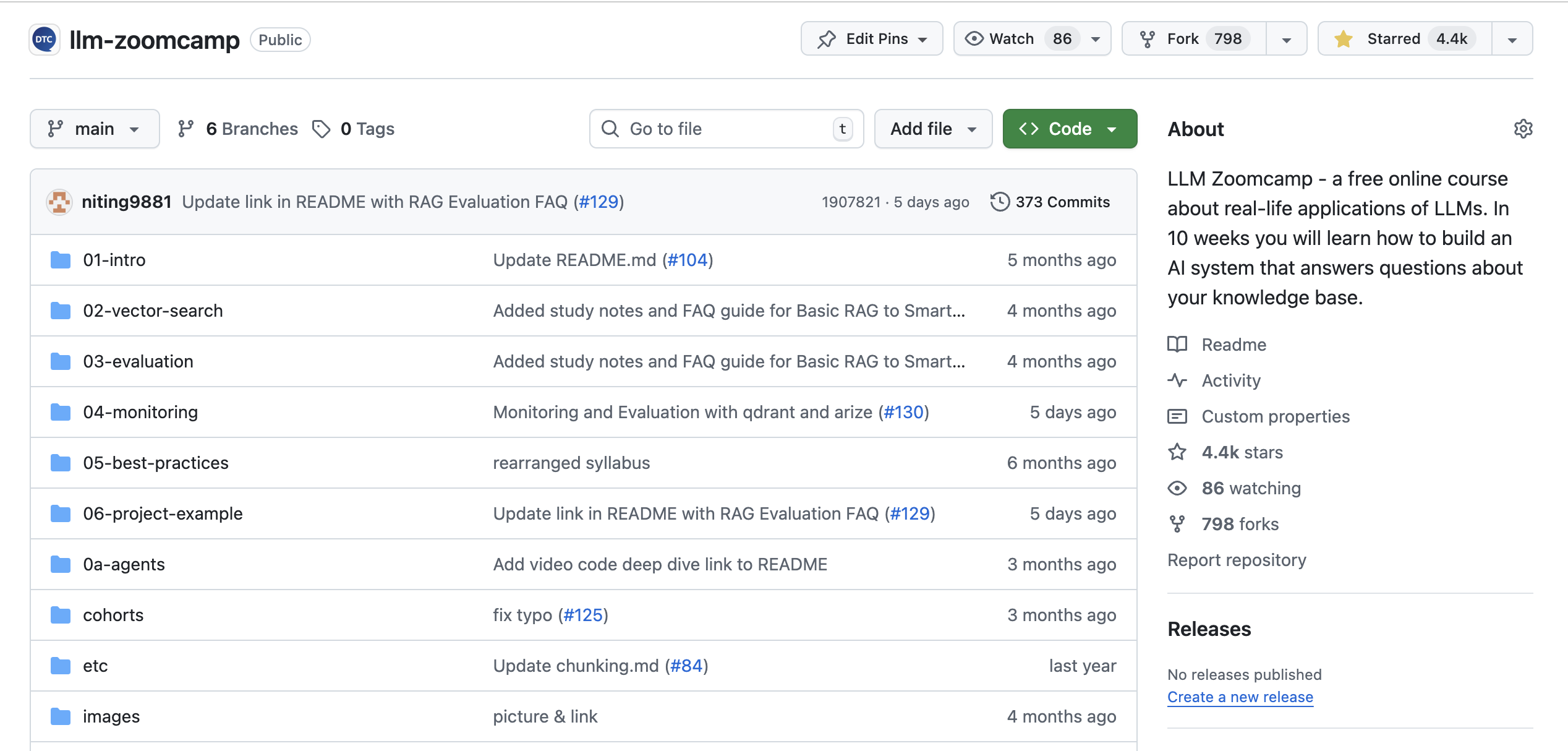

GitHub Repository: Your Source of Truth

All lessons, homework, and cohort updates live in the LLM Zoomcamp GitHub repository. The structure mirrors our other Zoomcamps, so you can quickly find weekly folders, homework forms, and project guidelines.

LLM Zoomcamp GitHub repository showing course materials and structure

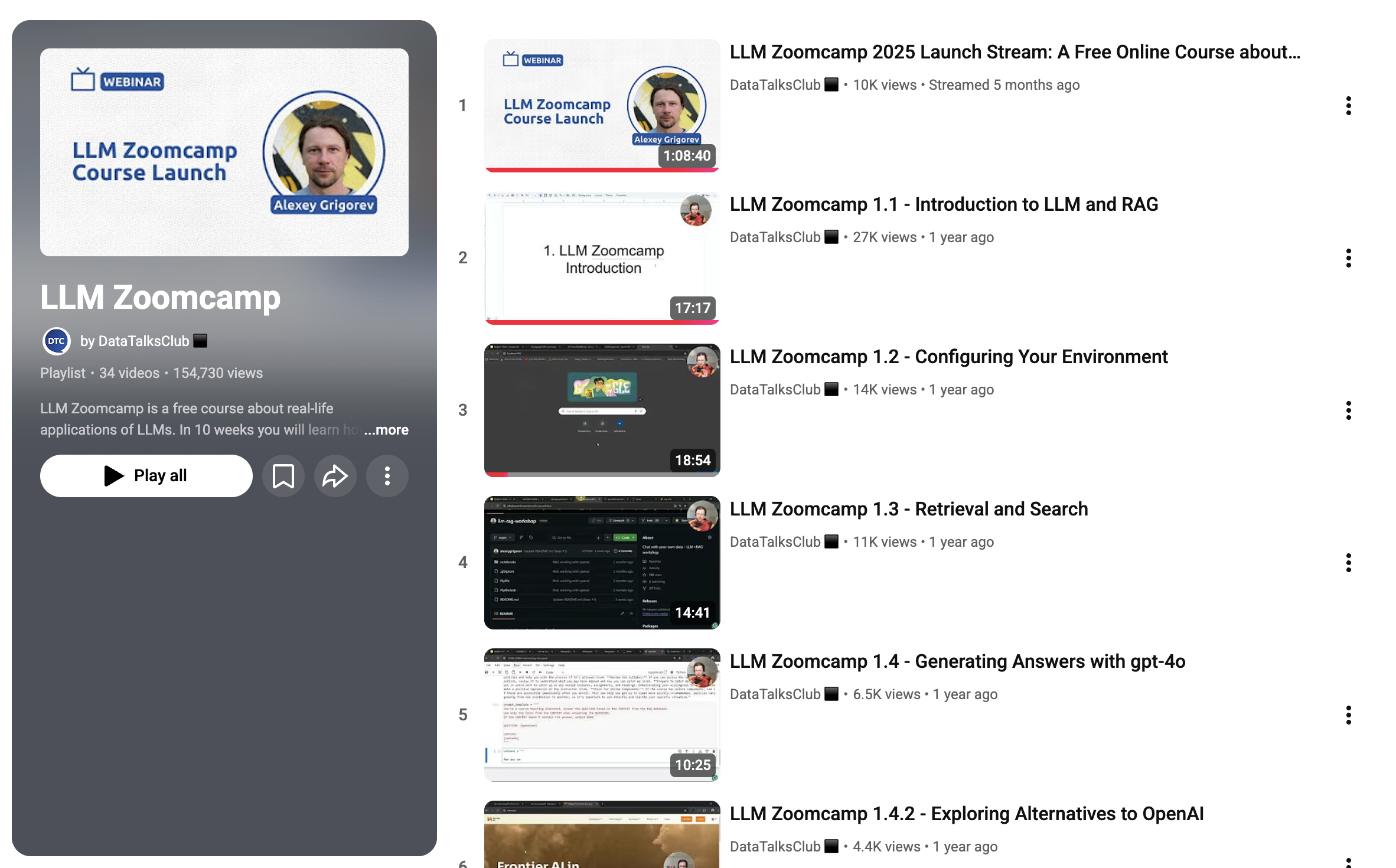

Video Lectures

Lectures are pre-recorded and available in this YouTube playlist, so you can follow the live cadence or binge-watch at your own pace.

LLM Zoomcamp YouTube playlist with pre-recorded lectures

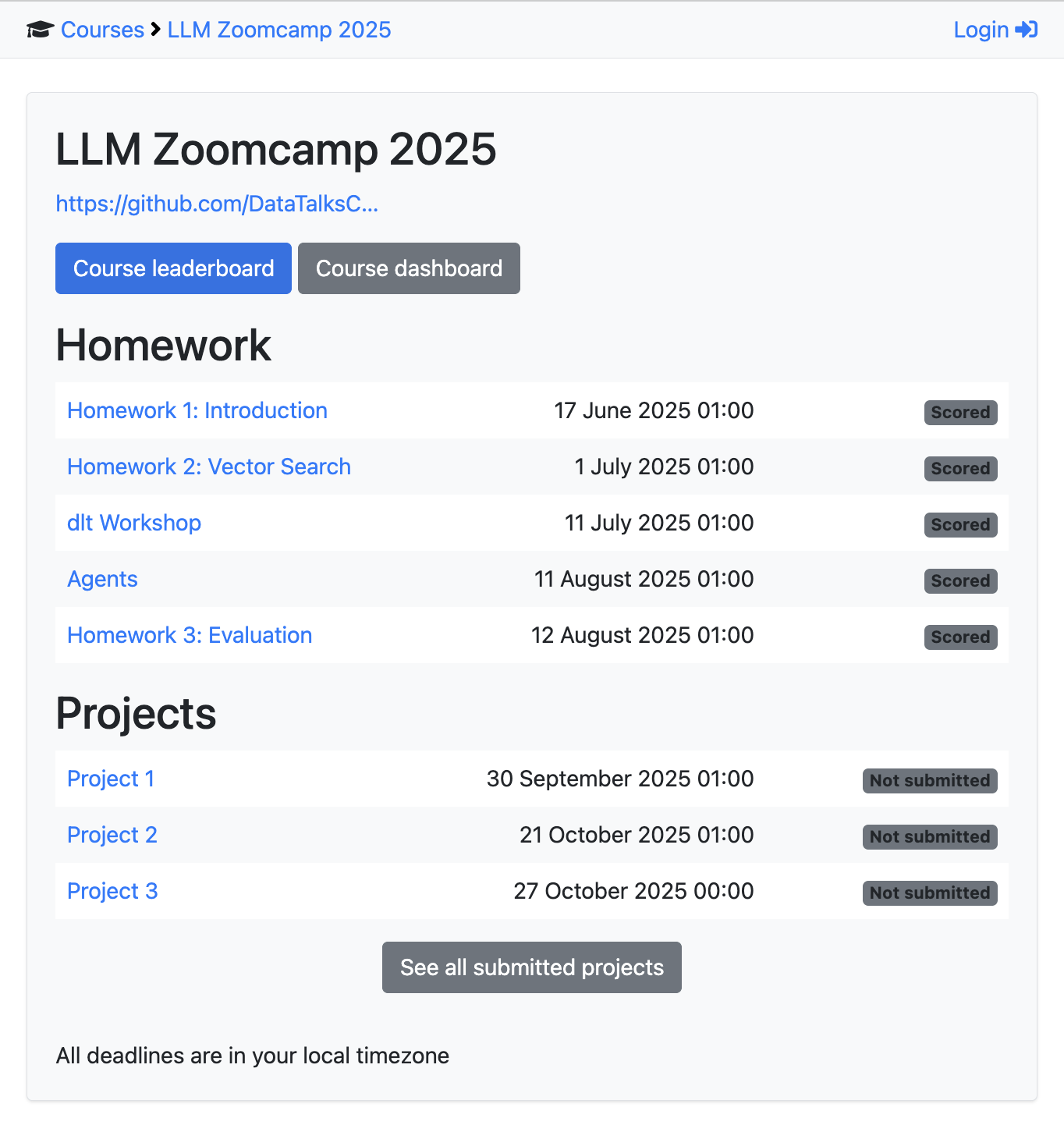

Homework Assignments

Examples of homework assignments from the 2024 cohort of LLM Zoomcamp covering RAG, vector search, and LLM engineering

We release homework assignments for each week of the course. Your scores are added to an anonymous leaderboard, creating friendly competition among course members and motivating you to do your best.

Course leaderboard displaying student progress and homework scores anonymously

Learning in Public

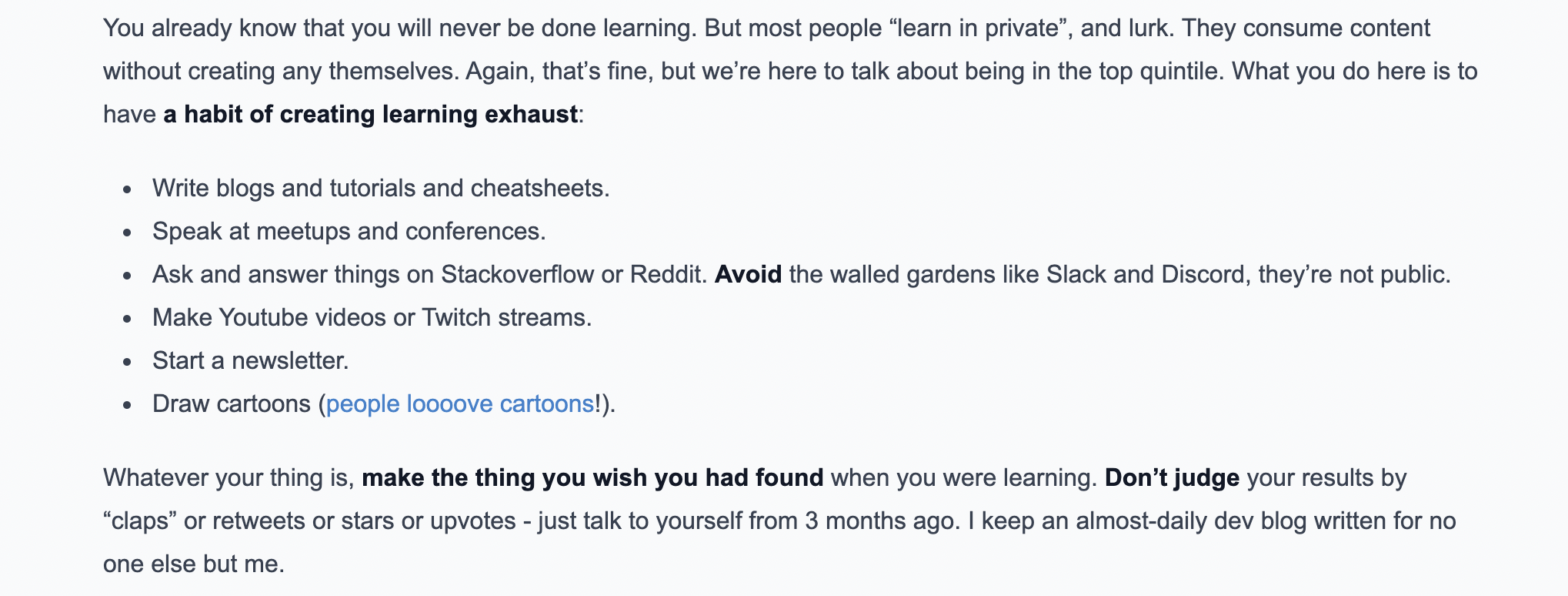

A unique feature is our “learning in public” approach, inspired by Shawn @swyx Wang’s article. We believe everyone has something valuable to contribute, regardless of their expertise level.

Extract from Shawn @swyx Wang's article explaining the benefits of learning in public

Throughout the course, we actively encourage and incentivize learning in public. By sharing your progress, insights, and projects online, you earn additional points for your homework and projects.

Sharing your work online also helps you get noticed by social media algorithms, reaching a broader audience and creating opportunities to connect with individuals and organizations you may not have encountered otherwise.

How to Get a Certificate

LLM Zoomcamp certificate for completing the course

To receive a certificate, you’ll need to complete the final project and peer review 3 other students’ projects:

- Complete the final project: Build a real-world LLM application (RAG project) that demonstrates your mastery of all course concepts

- Peer review: Evaluate and provide feedback on 3 fellow students’ projects during the peer review process

- Submit on time: Meet the project submission deadlines to qualify for certification

What is DataTalks.Club Community?

DataTalks.Club has a supportive community of like-minded individuals in our Slack. It is the perfect place to enhance your skills, deepen your knowledge, and connect with peers who share your passion. These connections can lead to lasting friendships, potential collaborations in future projects, and exciting career prospects.

Active discussions and support in the LLM Zoomcamp Slack community channel

How to Join LLM Zoomcamp

You can join LLM Zoomcamp either by following a live cohort or learning at your own pace.

All materials are freely available in the LLM Zoomcamp GitHub repository. Each module has its own folder, and cohort-specific homework and deadlines are in the cohorts directory. Lectures are pre-recorded and available in this YouTube playlist.

Option 1: Self-Paced Learning

You can start anytime and move at your own speed. You get full access to materials and community support on Slack.

You can complete homework assignments on the course platform and build a project for your portfolio, even outside a live cohort.

Under self-paced learning, homework isn’t scored, your project isn’t peer-reviewed, and you can’t earn a certificate.

Option 2: Live Cohort

LLM Zoomcamp runs once per year and typically starts in June.

When you join a live cohort, you get:

- Updated homework

- Automatic homework scoring and a leaderboard

- Project peer review

- Eligibility for a certificate after meeting all requirements

Even if you join after the official start date, you can still follow along. Note that some homework forms may already be closed. All active deadlines are listed on the course platform.

Testimonials

This course gave me hands-on experience in building LLM-powered applications, including prompt engineering, retrieval-augmented generation (RAG), pipeline orchestration, and vector search optimization.

— Alexander Daniel Rios, LLM Zoomcamp course graduate (Source)

Not gonna lie – this course took longer than planned. By the end, I was running on fumes, forcing myself to push through the final modules. But I made it.

What I loved about this course: hands-on experience building real AI systems (not just theory!), deep dives into RAG, vector databases, evaluation, and monitoring, the wealth of knowledge beyond the workshops – production-ready practices that matter in enterprise environments.

— Vasiliy Chernykh, LLM Zoomcamp course graduate (Source)

Frequently Asked Questions

The LLM Zoomcamp is a free, community-driven program by DataTalks.Club that teaches practical applications with large language models through hands-on project work.

This 10-week course covers a comprehensive curriculum with all materials open and available anytime on GitHub. You’ll work with an industry-standard stack including OpenAI API, Hugging Face, Elasticsearch, Ollama, Streamlit, Grafana, LangChain, and vector databases, and earn a certificate.

“Zoomcamp” is a term that originated from Alexey Grigorev, the founder of DataTalks.Club. It started with his book “ML Bookcamp.” When Alexey decided to create a video course based on the book, he called it “Machine Learning Zoomcamp” - a free, cohort-based course in video format. The name “zoomcamp” is a play on “bookcamp,” referring to the video format of the course. The Zoomcamp series has since expanded to include other free courses like the Data Engineering Zoomcamp, MLOps Zoomcamp, and LLM Zoomcamp, all following the same community-driven, open-source philosophy.

Yes, the LLM Zoomcamp is completely free. There are no hidden costs, no tuition fees, and no paid tiers. All course materials, videos, homework assignments, and access to the community are provided at no cost. Unlike traditional bootcamps that charge $10,000-$20,000+, this course is entirely community-driven and open source.

The LLM Zoomcamp differs from traditional LLM bootcamps in several key ways:

- Cost: Completely free vs. $10,000-$20,000+ for bootcamps

- Community: Community-driven and open source with all materials available forever on GitHub vs. content locked behind paywalls

- Flexibility: Can continue at your own pace after the cohort ends vs. rigid schedules and limited access periods

To earn a certificate, you need to complete one capstone project that demonstrates your mastery of building an end-to-end RAG application. After submitting your project, you must also review at least 3 other students’ projects by the deadline and provide constructive feedback.

The next cohort of the LLM Zoomcamp typically starts in the fall each year. Register here: https://airtable.com/appPPxkgYLH06Mvbw/shr7WtxHEPXxaui0Q before the course starts.

The LLM Zoomcamp is run by DataTalks.Club, a global online community of data professionals and learners. While the initial idea and most of the content were created by Alexey Grigorev, members of the DataTalks.Club community contribute as instructors and maintainers.

DataTalks.Club is often referred to as “the DataTalks Club”, “data talks club”, or “datatalks club”.

To get the most out of this course, you should be comfortable with programming and Python, command line, and Docker. No previous exposure to AI or ML is required. The course is designed to teach you everything you need to know about LLMs and RAG from the ground up.

Expect to spend 5-15 hours per week, depending on your background. This includes watching videos, completing homework, and working on the capstone project. More time may be needed during the final project weeks.

Yes! All course materials, videos, and recordings remain available after the cohort ends, and you can learn at your own pace. You’ll have access to the Slack community for support. However, self-paced learning does not include homework submissions, project evaluations, or the ability to earn a certificate. To receive a certificate, you need to join an active cohort.

No, certificates are only available when completing the course with a live cohort. Self-paced mode does not include homework submissions, project evaluations, or certificates. This is because the certification process requires you to peer-review three capstone projects, and these peer reviews only happen during the active course period. Additionally, the submission form closes after the peer-review list is compiled. Self-paced learners can access all course materials and the Slack community, but must join a live cohort to earn a certificate.

Yes, but if you want to receive a certificate, you need to submit your project while we’re still accepting submissions.

You don’t need a confirmation email. You are automatically accepted into the course. You can even start learning and submitting homework (while the form is open) without registering, as submissions are not checked against any registered list. Registration is primarily used to gauge interest before the start date.

There are no office hours—all lectures are pre-recorded and available in the YouTube playlist, so you can watch them whenever it suits you.

All course materials are in the GitHub repository. Each module has its own folder (for example, 01-intro or 03-classification), while cohort-specific homework and deadlines are located in cohorts/2025.

Occasionally, additional workshops or updated implementation videos are released—there will be additional announcements if this happens.

Yes! The only requirement for receiving a certificate is completing the capstone project. Homework assignments are not mandatory, though they are recommended for reinforcing concepts and understanding the material better. Your homework points will count toward your position on the course leaderboard, but they don’t affect certificate eligibility.

You have multiple support channels available. Join the DataTalks.Club Slack community where you can ask questions and get help from instructors and fellow students. We also have an FAQ repository with answers to common questions and a @ZoomcampQABot in Slack for quick help.

The GitHub repository is https://github.com/DataTalksClub/llm-zoomcamp.

Course videos are available in the YouTube playlist. For easier navigation, refer to the GitHub repository. We also maintain year-specific playlists for updates.

You need to complete one capstone project to earn a certificate. The capstone project is a comprehensive end-to-end RAG application that demonstrates your mastery of all course concepts including retrieval, vector databases, evaluation, monitoring, and LLM orchestration. After submitting your project, you’ll also need to review at least 3 other students’ projects. Learn more about the capstone project and certificate requirements.

Large Language Models (LLMs) are AI systems trained on vast amounts of text data that can understand and generate human-like text. They power applications like ChatGPT, code assistants, and intelligent search systems. Learning LLMs is essential for anyone looking to build AI-powered applications, implement RAG (Retrieval-Augmented Generation) systems, or work with modern NLP technologies. The demand for LLM skills is rapidly growing across industries.

RAG (Retrieval-Augmented Generation) is a technique that combines information retrieval with LLM generation to create AI systems that can answer questions about specific knowledge bases. RAG is crucial because it allows LLMs to access up-to-date information and domain-specific knowledge that wasn’t in their training data. This course teaches you to build production-ready RAG systems from scratch.

The course covers essential LLM tools and platforms including OpenAI API for LLM integration, Hugging Face for open-source models, Elasticsearch for vector search and text search, Ollama for running LLMs locally, Streamlit for building UIs, Grafana for monitoring, LangChain for LLM orchestration, and vector databases for efficient retrieval. You’ll also learn evaluation techniques, monitoring best practices, and data ingestion pipelines.

The DataTalks.Club LLM community is a supportive network of 80,000+ data professionals and learners. As part of the LLM Zoomcamp, you’ll have access to a dedicated course channel in Slack where you can ask questions, get help from instructors and peers, share your progress, and connect with like-minded individuals. The community provides technical support, peer learning opportunities, and networking that can lead to collaborations and career opportunities. This active community is one of the key differentiators of the LLM Zoomcamp experience.

This comprehensive training covers the complete large language model application lifecycle. You’ll receive hands-on training in building RAG systems, working with open-source and commercial LLMs, implementing vector databases and search, evaluating and monitoring LLM applications, orchestrating LLM pipelines, and deploying production-ready LLM systems. The course includes 7 core technical modules, weekly homework assignments, and a capstone project. This practical training prepares you for real-world AI applications and is taught by expert instructors from the DataTalks.Club community.

Yes! This is a completely free LLM course, with a certificate available when you complete the course with a live cohort. There are no hidden costs or tuition fees. To earn your certificate, you’ll need to complete the technical modules, build one capstone RAG project, participate in peer reviews, and follow LLM best practices. This free course provides the same quality training as paid bootcamps but at no cost. Certificates, homework submissions, and project evaluations are only available when participating in a live cohort, not in self-paced mode.

Yes, certificates are available when completing the course with a live cohort. Requirements include completing the technical modules, building one capstone RAG project, participating in peer reviews (reviewing at least 3 other students’ projects), and following LLM best practices. Certificates, homework submissions, and project evaluations are not available in self-paced mode.