Finding the right data engineering course can be overwhelming with hundreds of options across different platforms, price points, and skill levels.

This blog post compares 15 free data engineering courses and 5 paid options, detailing platforms, certificates, tech stacks, prerequisites, and durations so you can choose the course that fits your goals and budget.

Data Engineering Courses Summary Table

Free & Free-to-Audit Courses

| # | Course | Platform | Level | Format/Certificate | Duration |

|---|---|---|---|---|---|

| 1 | Data Engineering Zoomcamp | DataTalks.Club | Intermediate | Free + certificate | 9 weeks |

| 2 | IBM Data Engineering Professional Certificate | Coursera | Beginner | Free audit / paid cert | ~6 months |

| 3 | DeepLearning.AI Data Engineering Professional Certificate | Coursera | Intermediate | Free audit / paid cert | ~3 months |

| 4 | Snowflake Data Engineering Professional Certificate | Coursera | Beginner | Free audit / paid cert | ~4 weeks |

| 5 | Data Engineering Foundations Specialization | Coursera | Beginner | Free audit / paid cert | ~2 months |

| 6 | Introduction to Data Engineering | Coursera | Beginner | Free audit / paid cert | 1 week |

| 7 | Python, Bash and SQL Essentials for Data Engineering | Coursera | Beginner | Free audit / paid cert | ~4 months |

| 8 | Applied Python Data Engineering Specialization | Coursera | Intermediate | Free audit / paid cert | ~5 months |

| 9 | Generative AI for Data Engineers Specialization | Coursera | Intermediate | Free audit / paid cert | 8 weeks |

| 10 | Preparing for Google Cloud Certification: Cloud Data Engr Professional Certificate | Coursera | Intermediate | Free audit / paid cert | ~4 weeks |

| 11 | Meta Database Engineer Professional Certificate | Coursera | Beginner | Free audit / paid cert | ~6 months |

| 12 | AI: Advanced Data Engineering | edX | Advanced | Free audit / paid cert | 4 weeks |

| 13 | DelftX: AI Skills for Engineers - Data Engineering and Data Pipelines | edX | Introductory | Free audit / paid cert | 6 weeks |

| 14 | AI: Spark, Hadoop, and Snowflake for Data Engineering | edX | Introductory | Free audit / paid cert | 4 weeks |

| 15 | Understanding Data Engineering | DataCamp | Beginner | Free start / paid subscription | 2 hours |

Paid Courses & Certifications

| # | Course | Platform | Level | Format/Certificate | Duration |

|---|---|---|---|---|---|

| P1 | Data Engineer in Python Career Track | DataCamp | Intermediate | Paid subscription | ~40 hours |

| P2 | Data Engineering with AWS Nanodegree | Udacity | Intermediate | Paid nanodegree | 39 hours |

| P3 | Data Engineering with Microsoft Azure Nanodegree | Udacity | Advanced | Paid nanodegree | 56 hours |

| P4 | Data Engineer Career Path | Dataquest | Beginner | Paid subscription | ~4 months |

| P5 | AWS Certified Data Engineer - Associate (DEA-C01) Exam | AWS Certification | Associate | Paid exam | 130 minutes (self-paced prep) |

How We Collected This List

Fully free data‑engineering courses that grant both complete access to materials and a free certificate are rare. Many courses below allow auditing of lecture videos and readings for free, but graded assignments, peer feedback and certificates usually require payment. We have therefore included both truly free courses and free‑to‑audit courses in this list so you can choose the option that best fits your budget and learning goals.

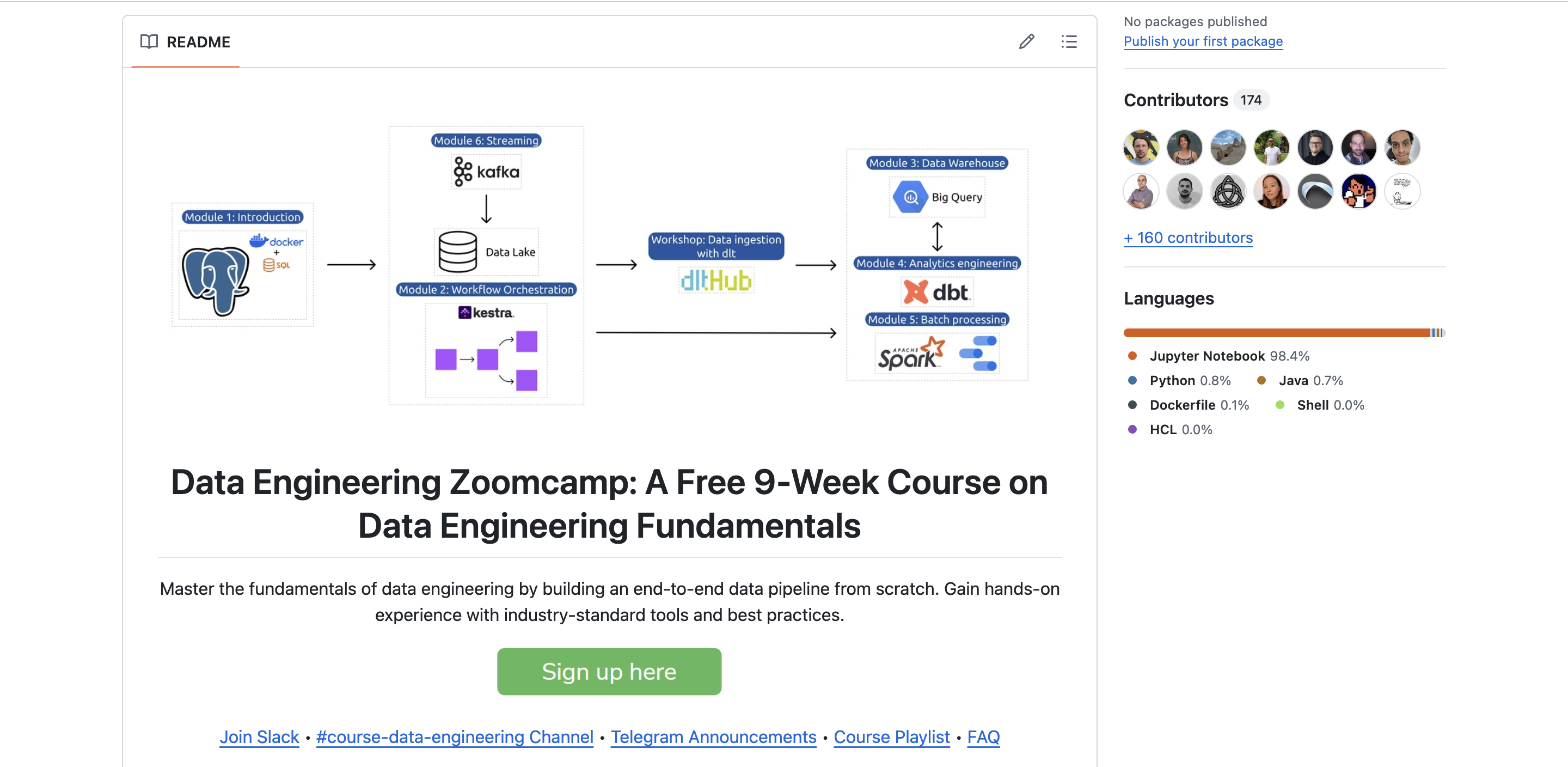

1. Data Engineering Zoomcamp

Data Engineering Zoomcamp: A community‑driven, hands‑on bootcamp for building production‑grade data pipelines

- Platform: DataTalks.Club (GitHub/Slack)

- Provider: DataTalks.Club community

- Level: Intermediate (beginner‑friendly)

- Prerequisites: Comfort with the command line and basic SQL; Python experience helpful but not mandatory.

- Key topics covered: Infrastructure & prerequisites; workflow orchestration; data warehousing; analytics engineering; batch & stream processing; capstone project.

- Tools/tech stack: Docker, PostgreSQL, GCP & Terraform, Mage.AI, Google Cloud Storage, BigQuery, dbt, BI tools, Apache Spark & Spark SQL, Kafka, KSQL, Faust.

- Format: Free (open materials & certificate)

- Duration: 9‑week structured program

- Certificate: Free certificate after completion

Data Engineering Zoomcamp is a free, community‑driven, hands‑on course that teaches how to build production‑grade data pipelines. Learners work through weekly modules, collaborate via Slack and complete a capstone project; the course emphasizes practical skills and portfolio building.

The next cohort of the Data Engineering Zoomcamp begins January 12, 2026. Learn necessary skills to become a data engineer in 9 weeks and build production-grade data pipelines. Register now to join the course and stay updated.

2. IBM Data Engineering Professional Certificate

IBM Data Engineering Professional Certificate: A comprehensive program equipping beginners with job‑ready data‑engineering skills

- Platform: Coursera

- Provider: IBM

- Level: Beginner

- Prerequisites: Basic computer literacy; no programming experience required.

- Key topics covered: Relational databases (MySQL, PostgreSQL, Db2); NoSQL databases; big‑data tools (MongoDB, Cassandra, Hadoop, Spark); ETL and data pipelines with Apache Airflow and Kafka; generative‑AI basics.

- Tools/tech stack: SQL, MongoDB, Cassandra, Hadoop, Spark, Apache Airflow, Kafka, Python

- Format: Free to audit; paid certificate

- Duration: About 6 months at 10 hrs/week (16 courses)

- Certificate: IBM Professional Certificate

IBM Data Engineering Professional Certificate is a comprehensive program equips beginners with job‑ready data‑engineering skills. Participants learn relational and NoSQL database management, big‑data processing and building ETL pipelines using Airflow and Kafka; the series culminates in applied projects.

3. DeepLearning.AI and AWS Data Engineering Professional Certificate

DeepLearning.AI and AWS Data Engineering Professional Certificate: A practitioner‑oriented program teaching data engineering mental models and practical pipeline techniques

- Platform: Coursera

- Provider: DeepLearning.AI

- Level: Intermediate

- Prerequisites: Intermediate Python programming and familiarity with data structures

- Key topics covered: Data‑engineering lifecycle; designing data models; building scalable pipelines; using AWS, Hadoop, Spark and Kinesis

- Tools/tech stack: AWS, Hadoop, Apache Spark, Kinesis, SQL, Python

- Format: Free to audit; paid certificate

- Duration: ~3 months, 3‑course series

- Certificate: Professional certificate from DeepLearning.AI

DeepLearning.AI Data Engineering Professional Certificate is a practitioner‑oriented program that teaches the mental model of data engineering and practical techniques for building pipelines on modern big‑data platforms.

4. Snowflake Data Engineering Professional Certificate

Snowflake Data Engineering Professional Certificate: Learn Snowflake's architecture and build scalable pipelines with SQL and Python

- Platform: Coursera

- Provider: Snowflake

- Level: Beginner

- Prerequisites: Basic SQL and Python recommended

- Key topics covered: Ingesting data at scale; performing transformations with SQL/Python; orchestration; DevOps and observability for data pipelines

- Tools/tech stack: Snowflake platform, SQL, Python, DevOps tooling

- Format: Free to audit; paid certificate

- Duration: About 4 weeks at ~10 hrs/week

- Certificate: Snowflake professional certificate

Snowflake Data Engineering Professional Certificate is a series that introduces learners to Snowflake’s architecture and teaches how to build and monitor scalable pipelines using SQL and Python, plus best practices in DevOps and observability.

5. IBM Data Engineering Foundations Specialization

IBM Data Engineering Foundations Specialization: A five‑course series introducing key concepts and tools for data engineers

- Platform: Coursera

- Provider: IBM

- Level: Beginner

- Prerequisites: Basic computer literacy; no prior data‑engineering experience

- Key topics covered: Python fundamentals; relational databases & SQL; database design; data‑engineering lifecycle; data architecture, pipelines and ETL

- Tools/tech stack: Python, SQL, IBM Db2, ETL tools

- Format: Free to audit; paid certificate

- Duration: 2 months (5 courses)

- Certificate: IBM specialization certificate

IBM Data Engineering Foundations Specialization is a five‑course series that introduces key concepts and tools used by data engineers, including programming in Python and designing databases and pipelines.

6. IBM Introduction to Data Engineering

IBM Introduction to Data Engineering: A short course providing a high‑level overview of data engineering and technologies

- Platform: Coursera

- Provider: IBM

- Level: Beginner

- Prerequisites: None

- Key topics covered: Data‑engineering roles; data‑platform architectures; relational vs NoSQL databases; big‑data engines (Hadoop, Spark); security & governance

- Tools/tech stack: SQL, NoSQL, Hadoop, Spark

- Format: Free to audit; paid certificate

- Duration: 1‑week course

- Certificate: Course certificate

IBM Introduction to Data Engineering is a short course that provides a high‑level overview of data engineering, the technologies involved and where data engineers fit within a data ecosystem.

7. Python, Bash and SQL Essentials for Data Engineering Specialization by Duke University

Python, Bash and SQL Essentials for Data Engineering Specialization: Build foundational skills in scripting and database interaction

- Platform: Coursera

- Provider: Duke University

- Level: Beginner

- Prerequisites: Beginner‑level Linux skills; no Python experience required

- Key topics covered: Python, Bash & Linux for data‑engineering tasks; connecting and querying SQL databases; web scraping; data manipulation and version control

- Tools/tech stack: Python, Bash, Linux CLI, pandas, Jupyter, Git, MySQL, AWS SageMaker

- Format: Free to audit; paid certificate

- Duration: 4 months at 5 hrs/week (4 courses)

- Certificate: Duke specialization certificate

Python, Bash and SQL Essentials for Data Engineering Specialization builds foundational skills in scripting and database interaction, using hands‑on labs in Jupyter notebooks to practice Python, Bash and SQL.

8. Applied Python Data Engineering Specialization by Duke University

Applied Python Data Engineering Specialization: Learn to leverage big‑data platforms and DevOps practices for robust pipelines and ML workflows

- Platform: Coursera

- Provider: Duke University

- Level: Intermediate

- Prerequisites: Experience with Python, Git, Docker and Kubernetes; background in linear algebra and statistics

- Key topics covered: Building scalable big‑data pipelines (Hadoop, Spark, Snowflake, Databricks); ML workflows with PySpark & MLFlow; DataOps/DevOps; data visualization and storytelling

- Tools/tech stack: Hadoop, Spark, Snowflake, Databricks, PySpark, MLFlow, Python visualization libraries, Kubernetes, Docker

- Format: Free to audit; paid certificate

- Duration: 5 months at 10 hrs/week (3 courses)

- Certificate: Duke specialization certificate

Applied Python Data Engineering Specialization is designed for data‑focused software engineers and researchers, this specialization teaches how to leverage big‑data platforms and DevOps practices to build robust pipelines and ML workflows.

9. Generative AI for Data Engineers Specialization by IBM

Generative AI for Data Engineers Specialization: Explore how generative AI enhances data‑engineering tasks and ETL pipelines

- Platform: Coursera

- Provider: IBM

- Level: Intermediate

- Prerequisites: No experience required, though prior data‑engineering knowledge is helpful

- Key topics covered: Generative‑AI models and tools (text/code/image/audio/video); prompt‑engineering techniques; using generative AI for data warehouse schema design, data generation, augmentation and anonymization; case studies in ETL and data repositories

- Tools/tech stack: Generative‑AI models, prompt‑engineering tools (ChatGPT, IBM Watsonx, Prompt Lab, Spellbook, Dust)

- Format: Free to audit; paid certificate

- Duration: 8 weeks at 2 hrs/week (3 courses)

- Certificate: IBM specialization certificate

Generative AI for Data Engineers Specialization explores how generative AI enhances data‑engineering tasks. Students learn prompt engineering and apply generative‑AI tools to ETL pipelines and data management.

10. Preparing for Google Cloud Certification: Cloud Data Engr Professional Certificate by Google Cloud

Preparing for Google Cloud Certification: Cloud Data Engr Professional Certificate: Prepare for Google Cloud's Professional Data Engineer certification

- Platform: Coursera

- Provider: Google Cloud

- Level: Intermediate

- Prerequisites: Proficiency with SQL and experience with programming languages such as Python

- Key topics covered: Identifying and using Google Cloud Big Data and ML products; interactive analysis with BigQuery; migrating MySQL and Hadoop workloads using Cloud SQL and Dataproc; choosing appropriate data‑processing services

- Tools/tech stack: BigQuery, Cloud SQL, Dataproc, Dataflow, Data Lakes, TensorFlow, Apache Hadoop & Spark

- Format: Free to audit; paid certificate; includes Qwiklabs hands‑on labs

- Duration: 4 weeks at about 10 hrs/week (5‑course series)

- Certificate: Google Cloud professional certificate

Preparing for Google Cloud Certification: Cloud Data Engr Professional Certificate prepares learners for Google Cloud’s Professional Data Engineer certification by teaching how to leverage Google’s data‑processing services and includes hands‑on labs via Qwiklabs.

11. Meta Database Engineer Professional Certificate

Meta Database Engineer Professional Certificate: A beginner‑friendly program teaching database engineering from the ground up

- Platform: Coursera

- Provider: Meta

- Level: Beginner

- Prerequisites: None

- Key topics covered: SQL proficiency; database creation, management and optimization; building database‑driven Python applications; advanced data‑modeling concepts; interview preparation

- Tools/tech stack: MySQL, SQL, Python, Django, Linux CLI, Git

- Format: Free to audit; paid certificate

- Duration: 6 months at 6 hrs/week (9 courses)

- Certificate: Meta professional certificate

Meta Database Engineer Professional Certificate is a beginner‑friendly program that teaches database engineering from the ground up, covering relational databases, Python applications and data modeling, with five applied projects to build real‑world skills.

12. AI: Advanced Data Engineering by Pragmatic AI Labs

AI: Advanced Data Engineering: Learn to design and optimize data pipelines at enterprise scale using modern technologies

- Platform: edX

- Provider: Pragmatic AI Labs

- Level: Advanced

- Prerequisites: Previous data‑engineering experience (self‑paced course)

- Key topics covered: Scaling data systems; working with Celery and RabbitMQ for message queues; optimizing workflows with Apache Airflow; using vector and graph databases for scalable data management

- Tools/tech stack: Celery, RabbitMQ, Apache Airflow, vector databases, graph databases

- Format: Self‑paced; free to audit; paid certificate

- Duration: 4 weeks (3-6 hrs/week)

- Certificate: Verified certificate available

AI: Advanced Data Engineering by Pragmatic AI Labs is a advanced course that teaches how to design and optimize data pipelines at enterprise scale using modern message‑queuing and database technologies, with hands‑on labs for practicing each concept.

13. DelftX: AI Skills for Engineers - Data Engineering and Data Pipelines by Delft University of Technology (TU Delft)

DelftX: AI Skills for Engineers - Data Engineering and Data Pipelines: A foundational course introducing data‑engineering principles for AI applications

- Platform: edX

- Provider: Delft University of Technology (TU Delft)

- Level: Introductory

- Prerequisites: None

- Key topics covered: Importance of data management for AI; data requirements; obtaining data; extracting and querying data from databases with SQL; setting up Python notebooks; using pandas for tabular data; visualizing data with seaborn

- Tools/tech stack: Python, pandas, Jupyter notebooks, seaborn, SQL

- Format: Self‑paced; free to audit; paid certificate

- Duration: 6 weeks, 5-7 hrs/week

- Certificate: Verified certificate available

DelftX: AI Skills for Engineers - Data Engineering and Data Pipelines by Delft University of Technology (TU Delft) is a foundational course that introduces engineers to data‑engineering principles for AI applications, including SQL data extraction and Python‑based data handling and visualization.

14. AI: Spark, Hadoop, and Snowflake for Data Engineering by Pragmatic AI Labs

AI: Spark, Hadoop, and Snowflake for Data Engineering: Explore essential data‑engineering platforms and optimize them using PySpark and Python

- Platform: edX

- Provider: Pragmatic AI Labs

- Level: Introductory

- Prerequisites: No prior experience required

- Key topics covered: Managing & optimizing Hadoop, Spark and Snowflake; performing analytics and ML tasks in Databricks; using PySpark for Python data science; managing ML lifecycle with MLflow; applying Kaizen, DevOps & DataOps methodologies

- Tools/tech stack: Hadoop, Spark, Snowflake, Databricks, PySpark, MLflow

- Format: Self‑paced; free to audit; paid certificate

- Duration: 4 weeks (3-6 hrs/week)

- Certificate: Verified certificate available

AI: Spark, Hadoop, and Snowflake for Data Engineering by Pragmatic AI Labs is an introductory course that explores essential data‑engineering platforms and teaches how to optimize them using PySpark and Python while integrating DevOps/DataOps practices.

15. Understanding Data Engineering by DataCamp

Understanding Data Engineering: A short, no‑code course explaining data engineering concepts and workflows

- Platform: DataCamp

- Provider: DataCamp

- Level: Beginner

- Prerequisites: None

- Key topics covered: Definition of data engineering; data workflows (ingestion, storage, transformation, serving); differences between batch and streaming processing; responsibilities of data engineers versus other roles

- Tools/tech stack: Conceptual; introduces SQL and cloud concepts

- Format: Free to start (audit) but part of a paid subscription

- Duration: 2‑hour course with 11 videos & 32 exercises

- Certificate: DataCamp certificate

Understanding Data Engineering by DataCamp is a short, no‑code course that explains what data engineering is, outlines typical workflows and clarifies how the role differs from data science and analytics.

Paid Courses

1. Data Engineer in Python Career Track by DataCamp

- Platform: DataCamp

- Provider: DataCamp

- Level: Intermediate

- Prerequisites: Prior Python knowledge and familiarity with cloud concepts

- Key topics covered: Data manipulation and automation; building ETL/ELT pipelines; using Apache Airflow for workflow orchestration; efficient coding practices; version control with Git

- Tools/tech stack: Python, pandas, SQL, Apache Airflow, dbt, Git, command line, cloud services

- Format: Subscription‑based (paid)

- Duration: ~40 hours across multiple courses and projects

- Certificate: DataCamp track certificate

Data Engineer in Python Career Track by DataCamp is a professional track that deepens Python‑centric data‑engineering skills, teaching learners to design and automate data pipelines and orchestrate workflows using Airflow and industry best practices.

2. Data Engineering with AWS Nanodegree

- Platform: Udacity

- Provider: Udacity

- Level: Intermediate

- Prerequisites: Knowledge of relational data models, command‑line basics, intermediate Python and basic Git

- Key topics covered: Data modeling (relational & NoSQL); building ETL pipelines with PostgreSQL and Apache Cassandra; designing and implementing cloud data warehouses on AWS; working with Spark and data lakes; automating pipelines with Airflow

- Tools/tech stack: PostgreSQL, Apache Cassandra, Amazon S3, Redshift, IAM, VPC, EC2, RDS, Apache Spark, Airflow

- Format: Paid nanodegree program

- Duration: 39 hours; 4 courses & 4 projects

- Certificate: Udacity Nanodegree

Data Engineering with AWS Nanodegree by Udacity is a project‑based program that trains learners to design data models, build data warehouses and lakes and automate pipelines on AWS using Airflow and Spark.

3. Data Engineering with Microsoft Azure Nanodegree

- Platform: Udacity

- Provider: Udacity

- Level: Advanced

- Prerequisites: Command‑line basics, relational data models, intermediate Python and basic Git

- Key topics covered: Creating relational & NoSQL data models; designing and implementing cloud data warehouses with Azure Synapse; building data lakes and lakehouse architectures using Spark & Azure Databricks; orchestrating pipelines with Azure Data Factory & Synapse Analytics

- Tools/tech stack: PostgreSQL, Apache Cassandra, Azure Synapse Analytics, Azure Databricks, Azure Data Factory, Apache Spark

- Format: Paid nanodegree program

- Duration: 56 hours; 6 courses & 4 projects

- Certificate: Udacity Nanodegree

Data Engineering with Microsoft Azure Nanodegree by Udacity is a program that prepares learners to become cloud data engineers on Azure by teaching scalable data architectures, data warehouses/lakes and orchestrated pipelines.

4. Data Engineer Career Path by Dataquest

- Platform: Dataquest

- Provider: Dataquest

- Level: Beginner‑friendly

- Prerequisites: None (learning by doing)

- Key topics covered: Python programming; data architecture & pipelines; data wrangling & cleaning; SQL & multi‑table databases; algorithms; command‑line & Git; workflow automation

- Tools/tech stack: Python, pandas, NumPy, SQLite, PostgreSQL, MapReduce, Jupyter notebooks, command line, Git

- Format: Subscription‑based (paid); self‑paced

- Duration: 4 months at 5 hrs/week (22 courses & 12 projects)

- Certificate: Dataquest career‑path certificate

Data Engineer Career Path by Dataquest is a structured, project‑based path that takes learners from Python basics through building data pipelines and processing large datasets, with guided projects to build a portfolio.

5. AWS Certified Data Engineer - Associate

- Platform: AWS Certification

- Provider: Amazon Web Services

- Level: Associate-level certification

- Prerequisites: Recommended 2-3 years of data‑engineering experience and 1-2 years of hands‑on AWS experience

- Key topics covered: Implementing data pipelines; ingesting and transforming data; selecting optimal data stores; designing data models and managing data lifecycles; operationalizing and monitoring pipelines; ensuring authentication, encryption & governance

- Tools/tech stack: AWS services such as Amazon Kinesis, Amazon MSK, DynamoDB Streams, AWS DMS, AWS Glue, Amazon Redshift and other AWS data services

- Format: Certification exam; 65 multiple-choice/multiple‑response questions; 130‑minute duration

- Duration: Self‑study; exam is 130 minutes

- Certificate: AWS Certified Data Engineer - Associate credential

AWS Certified Data Engineer - Associate by Amazon Web Services is a certification that validates competence in designing, building and maintaining data pipelines on AWS, including data ingestion, storage, transformation and governance. Candidates should have several years of data‑engineering experience.